AI Policy

What is an AI Policy?

An AI policy refers to the laws, regulations, and organizational guidelines that govern the development and deployment of AI systems. These policies ensure that AI technologies align with ethical standards and regulatory requirements, addressing key issues such as fairness, privacy, security, and economic impacts.

With the rapid adoption of generative AI, companies, schools, and governments must implement structured AI policies to regulate its usage effectively. Organizations can use an AI policy template to set clear guidelines on AI governance, risk management, and compliance.

Why is an AI Policy Important?

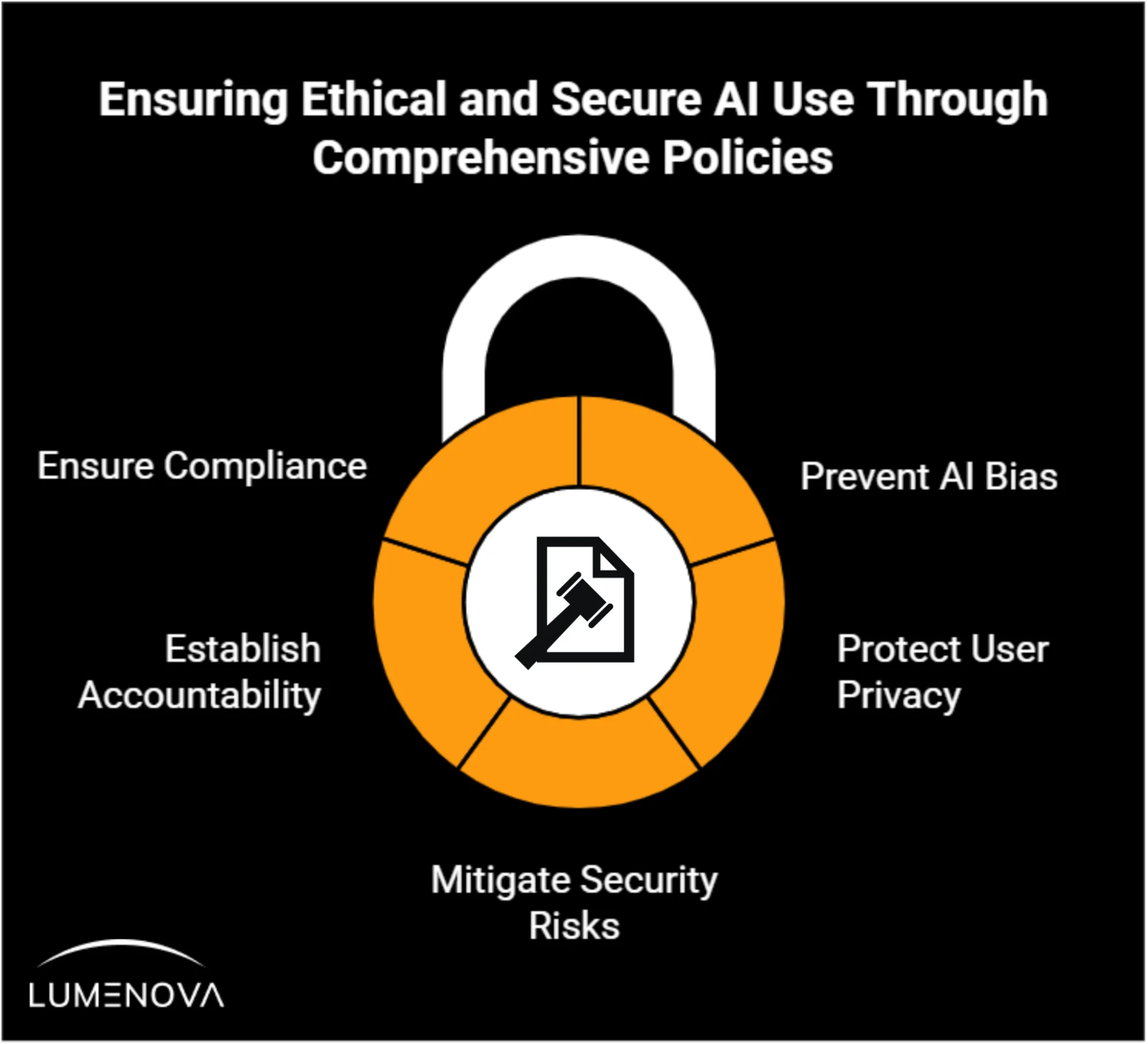

A well-defined AI use policy helps organizations:

- Prevent AI bias and ensure fairness in AI-driven decisions.

- Protect user privacy by complying with data protection laws such as GDPR and CCPA.

- Mitigate AI security risks, including cyber threats and unauthorized AI applications.

- Establish accountability for AI-generated content in organizational contexts.

- Ensure compliance with national and international AI governance and safety standards.

Key Elements of an Effective AI Policy

1. AI Fairness & Non-Discrimination

AI algorithms must be designed to avoid bias and unfair treatment. Organizations should implement policies for monitoring algorithmic fairness and ensuring compliance with responsible AI practices.

2. AI Privacy & Data Protection

When AI handles sensitive data, companies must have a clear AI security policy that aligns with regulations like the GDPR, HIPAA, and CCPA. A well-structured policy should define how AI systems handle sensitive data securely.

3. AI Security & Ethical Use

Organizations should develop policies that include security protocols to prevent AI misuse, such as deep fakes, disinformation, or AI-powered fraud. AI usage policies should outline ethical AI practices and ensure accountability in AI-driven decisions.

4. AI’s Economic & Workplace Impact

The rise of AI automation raises concerns about job displacement. AI policies should define AI’s role in decision-making, employee monitoring, and workplace automation while ensuring ethical AI use.

Developing an AI Policy for Companies & Schools

Companies

An effective policy should include:

- Organization-specific AI governance framework for compliance with AI laws.

- GenAI policy covering the responsible use of AI-generated content.

- Policy generator tools to create tailored guidelines for business operations.

Schools & Universities

A proper policy for schools must outline:

- AI use in education, both for students and educators.

- AI security policy ensuring safe and ethical AI applications in classrooms.

- University policies guiding research institutions on AI governance and compliance.

Final Thoughts

An effective AI governance policy ensures that AI systems are transparent, accountable, and ethically designed. Whether it’s tied to corporate regulations, to general AI usage, or to responsible AI practices, organizations must implement clear frameworks to mitigate AI risks while maximizing AI’s potential.

Frequently Asked Questions

An AI policy is a set of rules, laws, or usage protocols that dictate how AI can be developed and used responsibly. It ensures AI systems align with ethical principles, legal standards, and security requirements while preventing risks like bias, misinformation, and data breaches. AI policies are becoming more critical as businesses, schools, and governments increasingly rely on AI-driven tools. Organizations adopt AI-governance frameworks to keep automated decisions transparent and accountable, while universities craft policies that encourage responsible use of generative tools and safeguard academic integrity. Governments are also setting national AI regulations to ensure responsible AI development, such as the US AI policy, the UK AI policy, and the EU AI Act.

AI policies in companies help regulate AI’s role in decision-making, data processing, and automation. A corporate AI policy ensures AI is used transparently, ethically, and securely while complying with global regulations. Businesses implement acceptable AI use policies to set guidelines for AI-generated content, HR automation, and marketing AI tools. Many organizations use an AI policy generator to customize their frameworks, ensuring compliance with laws like GDPR and CCPA. Without clear policies, companies risk security breaches, AI bias lawsuits, and regulatory fines.

An AI policy for schools must define how AI can be used in learning, research, and student evaluations. With generative AI tools being widely accessible, universities are adopting acceptable AI use policies to regulate AI-assisted assignments and prevent academic dishonesty. An effective education-sector AI policy should centre on teaching students to use generative tools safely and effectively, require educators to disclose any AI-assisted grading, and bar research applications that could facilitate harmful or unethical outcomes. Schools also need a responsible AI policy to ensure that AI-driven tools like chatbots and automated grading systems are fair and unbiased.

AI policies must address cybersecurity risks, ensuring that AI systems do not expose sensitive information. A strong AI security policy protects against cyber threats, unauthorized access, and data leaks. AI-driven fraud and deepfake scams are growing concerns, making it crucial for organizations to establish guidelines on AI-generated media and misinformation. A responsible use AI policy ensures that AI does not manipulate or deceive users, while companies develop AI governance policies to maintain transparency in AI decision-making.

Governments worldwide are implementing AI policy regulations to govern AI use responsibly. The US’ AI policy includes executive orders on AI safety, ethics, and workplace automation. The UK’sAI policy emphasizes AI innovation while addressing security risks. The EU AI Act classifies AI applications based on risk and imposes strict regulations on high-risk AI models. Businesses and institutions must stay updated on AI policy news to remain compliant with these evolving laws.

Organizations can start by identifying their AI use cases, assessing risks, and defining ethical guidelines. Many companies use an AI policy template or an AI policy generator to structure their governance framework. A sample AI policy or a generative AI policy template provides a foundation, covering essential elements like bias mitigation, transparency, and data security. A corporate AI policy example can help businesses align their AI governance with legal requirements. By implementing a responsible AI policy, organizations ensure AI serves ethical, secure, and transparent purposes.