Algorithmic Discrimination

Algorithmic discrimination happens when AI systems make decisions that unfairly treat people based on attributes like race, gender, age, income level, or other personal characteristics. It often shows up when the data the system was trained on reflects historical bias or inequality.

This kind of bias isn’t always easy to see. It doesn’t always stem from bad intentions. But it leads to real consequences. People may get denied loans, jobs, healthcare, or public services (not because of who they are, but because of how a model learned to make decisions).

At its core, algorithmic discrimination is a failure of oversight. And fixing it is not just a technical task. It’s a governance issue.

How Algorithmic Discrimination Happens

Let’s be direct: most AI systems don’t set out to discriminate. The problem is how they’re built. The data they’re trained on. The choices made during development. Here are a few of the most common ways algorithmic discrimination takes hold:

Biased Training Data

If the historical data used to train a model is biased, the model will learn those patterns. For example, if a hiring dataset favors one group over others, the model will likely do the same. It’s not copying behavior. It’s learning patterns (and often, those patterns are skewed).

Incomplete Representation

If a group isn’t well represented in the training data, the system won’t perform well for them. That could mean a facial recognition tool that works better for some faces than others, or a medical model that misclassifies patients from certain backgrounds.

Proxy Features

Even when you remove protected attributes like race or gender, the model can still learn from other features that act as proxy indicators. ZIP code, income, and education level — they can all correlate with sensitive data attributes that lead to biased outcomes.

Subjective Labeling

Where humans label data, bias is likely. Data labels might reflect stereotypes or unchecked assumptions, which are then internalized by the model.

Optimization Without Fairness

Most systems are optimized for accuracy, efficiency, or profit. But if fairness isn’t part of that equation, discrimination can happen even when everything looks

like it’s working as expected.

Why Algorithmic Discrimination Matters

This isn’t just about bad press or compliance checklists. When algorithmic discrimination goes unchecked, it leads to real harm. The impact is financial, legal, reputational, and ethical.

Legal Risk

Anti-discrimination laws apply whether a decision is made by a human or an AI. If an algorithm treats people unfairly based on protected traits, it can put your organization at serious legal risk.

Trust

People need to trust that AI systems are fair. If a model is biased, confidence will become compromised. That affects your product, your brand, and your long-term ability to scale responsibly.

Performance

A biased system is a broken system. It’s making decisions based on incomplete or skewed logic. This reduces the quality and consistency of AI outcomes while limiting AI’s utility.

Ethics

Bias in AI reinforces the very problems we’re trying to solve with technology. If your system is perpetuating inequality, you’re not a victim of the problem, you’re part of it.

How We Advise Teams to Prevent Algorithmic Discrimination

At Lumenova AI, we’ve worked with organizations building and deploying AI at scale, and we’ve seen how easily bias can slip through. Our view is simple: if you don’t have structured governance in place, you’re not mitigating discrimination. You’re just hoping it doesn’t happen.

Here’s how we recommend teams approach it, based on what we know works.

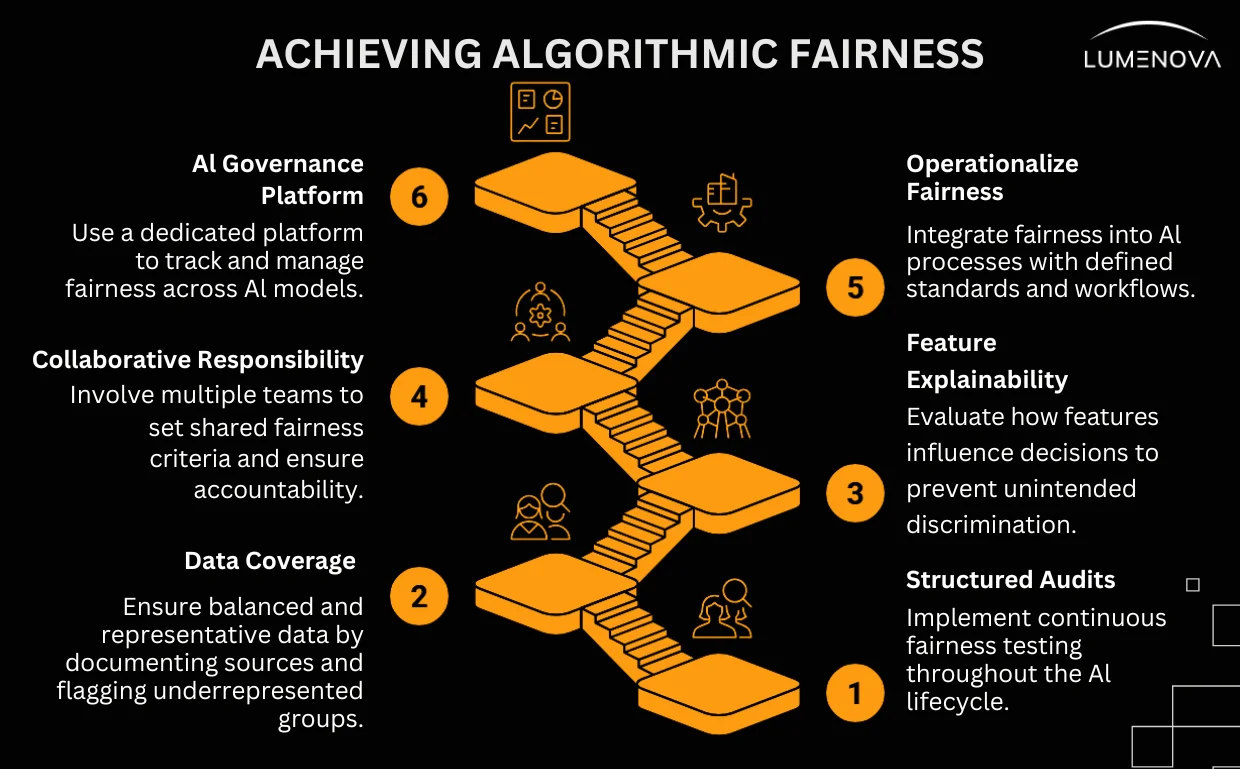

1. Start With Structured Audits, Not One-Off Checks

Bias isn’t something you look for once. It’s something you manage continuously. We advise teams to run fairness testing throughout the AI lifecycle (not just pre-launch). That means testing for disparities in outcomes, understanding which groups are most affected, and tracking fairness metrics over time. If your model is live, your audit process should be too.

We also push for full transparency. Audits that happen in a spreadsheet no one sees don’t count. Documenting what was tested, when, and how is just as important as the results.

2. Use Training Data You Can Actually Stand Behind

Bias often starts with the data. We advise teams to go beyond dataset “cleanliness” and start asking who’s represented. Is your data balanced? Does it reflect the real population that your model will affect? If you’re relying on historical data, are you reproducing past exclusions?

Our recommendation is to document sources, flag underrepresented groups, and apply coverage thresholds where needed. You can’t fix what you don’t track, and when it comes to fairness, data coverage is everything.

3. Know What Your Features Are Doing (Not Just What They Are)

Feature explainability needs to be standard. We advise every team to evaluate how their model is making decisions, not just which features it’s using, but how much weight each one carries.

A feature that looks harmless on the surface (like location, education level, or browser history) can introduce discrimination when it’s used as a proxy for something sensitive. You don’t need to remove every risky input, but you need to understand its influence. And you need to justify it.

4. Make Fairness Everyone’s Responsibility

One of the biggest risks we see is when fairness gets siloed, pushed off to a single ML engineer or compliance reviewer. That doesn’t work. Bias prevention has to be collaborative.

Our advice is simple: make sure legal, risk, compliance, data science, and product teams are all involved early. Set shared criteria for fairness. Agree on what’s acceptable, and what’s not. Put decisions in writing. And give each team access to the tools and visibility they need to spot problems.

5. Operationalize It (Don’t Improvise)

Policies don’t matter if no one enforces them. Risk logs don’t offer any value if no one reads them. That’s why we built our platform the way we did, to help teams make fairness a working part of their AI process, not a one-time fix.

We advise every organization to define standards for fairness, document mitigation steps, and assign ownership for bias testing and approval. Put concrete, structured workflows in place that don’t rely on memory or good intentions. If bias mitigation isn’t baked into how you build, review, and ship AI, it’s not real governance, but risk waiting to scale and potentially cascade.

6. Use an AI Governance Platform

Governance needs infrastructure. You can’t track fairness across dozens of models, multiple data pipelines, and overlapping teams without a platform built for it.

What Lumenova AI Brings to the Table

At Lumenova AI, we support Responsible AI that gives organizations the control they need to govern AI (with structure, traceability, and purpose). Algorithmic discrimination is one of the most urgent risks in AI today, and we don’t approach it as a theoretical problem. We treat it as a real, measurable, and addressable risk that deserves system-level governance.

Our Responsible AI software helps organizations identify bias early, understand how it enters the system, and apply policies to reduce its impact, before models go live. We provide tools to:

- Run structured fairness audits across demographic groups

- Trace training data and feature-level contributions

- Document model decisions and justification logic

- Apply policy guardrails and risk thresholds to sensitive use cases

- Monitor performance and fairness post-deployment

We don’t believe fairness is a checkbox. We believe it’s part of the system architecture.

Final Thought About Algorithmic Discrimination

Discrimination in AI systems doesn’t always show up in logs or dashboards. But that doesn’t mean it’s not happening. If you’re not actively managing it, you’re allowing it to scale (invisibly, and at speed). Preventing it starts with visibility. It takes cross-functional alignment, clear policies, and systems that are built for oversight.

That’s the kind of governance we deliver. Because building AI responsibly is not just about what the model can do, it’s about what the organization chooses to allow.

And we believe accountability should be built in, not added later.