The Responsible AI Blog

Get the latest news and insights about Responsible AI and its transformative impact on today’s business landscape.

Featured

July 1, 2025

Why Traditional Security Tools Fail to Manage Generative AI Risks

Traditional tools miss GenAI risks like prompt injection and data leaks. Lumenova AI ensures security, compliance, and trust with smart risk governance.

June 26, 2025

The Hidden Dangers of AI Bias in Healthcare Decision-Making

AI bias in healthcare can lead to dangerous outcomes. Only a robust AI governance platform can prevent a catastrophe, if it has the right capabilities.

June 24, 2025

AI Best Practices for Cross-Functional Teams: Getting Legal, Compliance, and Data Science on the Same Page

Read our AI best practices. Learn how to build bridges between legal, compliance & data science so your team can create AI that’s responsible from day one.

June 19, 2025

Generative AI Security: How to Protect Your Organization

Unlock innovation safely with strong Generative AI security. Discover critical risks and proactive mitigation strategies while harnessing GenAI benefits.

June 18, 2025

AI Agents: Navigating the Risks and Why Governance is Non-Negotiable

Discover how AI agents challenge traditional governance. Learn risk strategies for autonomous systems, agent oversight, and multi-agent alignment.

June 17, 2025

7 Common Types of AI Bias and How They Affect Different Industries

Learn how AI bias emerges, impacts different industries, and can be mitigated through responsible AI practices and effective AI risk management.

June 12, 2025

Do You Need an AI Consultant? Signs Your Business Requires Expert Guidance

Does your business need an AI consultant? Learn six signs it's time to bring in expert guidance for AI compliance, governance, and effective implementation.

June 10, 2025

AI in Healthcare Compliance: How to Identify and Manage Risk

Learn how healthcare organizations can identify, assess, and mitigate AI-related risks to ensure regulatory compliance and safeguard patient outcomes.

June 5, 2025

AI Audit Tools and Scenarios for Insurance: Claims, Underwriting, and More

Discover essential AI audit tools, scenarios, and best practices for insurance. Learn how to ensure compliance, fairness & trust in AI-driven operations.

June 4, 2025

The AI Revolution is Here: Investigating Capabilities and Risks

Explore the power and risks of AI agents and agentic AI systems. Learn what they can do, where they fail, and why AI governance must evolve to keep up.

June 3, 2025

Understanding AI Security Tools: Safeguarding Your AI Systems

Learn how AI security tools detect threats, prevent attacks, and ensure model integrity to support responsible AI and effective AI risk management.

May 29, 2025

Responsible AI (RAI) Principles

Read our blog and understand the core principles of Responsible AI! Learn how to implement Responsible AI best practices and respect these AI principles.

May 27, 2025

What Is Responsible AI (RAI)?

Curious to discover what Responsible AI really means, why it matters in 2025 & how your organization can implement it the right way? Read this blog post!

May 22, 2025

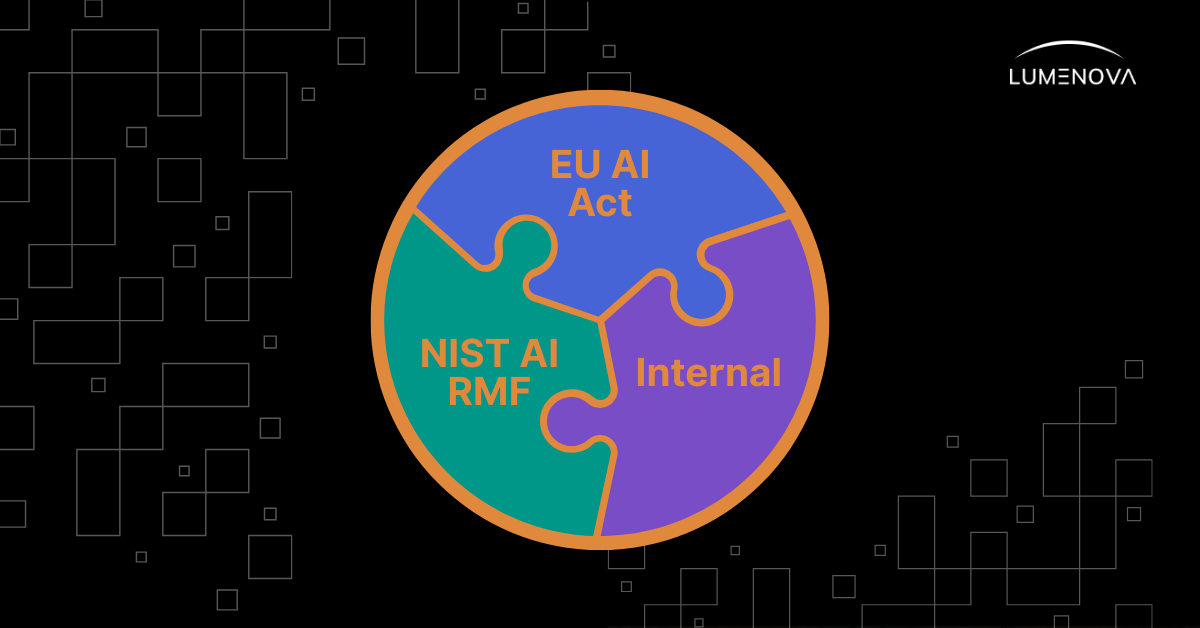

AI Governance Frameworks Explained: Comparing NIST RMF, EU AI Act, and Internal Approaches

Compare NIST AI RMF, EU AI Act, & internal governance. Learn how these frameworks guide organizations in building trustworthy, responsible AI.

May 21, 2025

The AI Revolution is Here: Understanding the Power of AI Agents

Explore the rise of AI agents, how they differ from traditional AI, their use cases & what their deployment means for the future of enterprise AI systems.

May 20, 2025

With Great Efficiency Comes Great Risk: Why AI and Risk Management Go Hand-in-Hand

AI drives efficiency but brings risks like regulation, drift, security threats & bias. Discover why risk management is essential in any AI strategy.

May 15, 2025

Can Your AI Be Hacked? What to Know About AI and Data Security

AI and data security must go hand-in-hand to protect your organization & manage your AI risk. Read about AI key attack vectors & protect your organization!

May 13, 2025

The Pathway to AI Governance

Discover the pathway to effective AI governance. Learn the key steps, best practices, and how to manage AI risk and compliance across your organization.

May 8, 2025

Why Explainable AI in Banking and Finance Is Critical for Compliance

Explainable AI in banking and finance is a key component of regulatory compliance, ensuring fairness, better decision-making, and stakeholder trust.

May 5, 2025

Weighing the Benefits and Risks of Artificial Intelligence in Finance

Explore the benefits and risks of artificial intelligence in finance, with a focus on the U.S. market. Understand the potential impacts of AI technologies.

May 1, 2025

A Closer Look at AI Regulations Shaping the Finance Industry in April 2025

Discover the top AI regulations shaping the finance industry in April 2025. Stay informed on the legal changes you need to know to navigate AI in finance.

April 29, 2025

Does Your AI Monitoring System Follow These Best Practices?

Is your AI monitoring system keeping up? Discover best practices for avoiding drift, ensuring compliance, and protecting performance. Read the full guide.

April 24, 2025

AI Governance Platform vs AI Risk Management Tool: You Need Both

An AI governance platform provides the framework; risk management tools manage threats. Learn why a holistic approach is vital for your organization.

April 22, 2025

How to Audit Your AI Policy for Compliance and Security Risks

Regular audits of your AI policy allow you to prioritize severe AI risks and effectively protect your brand.

April 17, 2025

AI Agent Index: Governance & Business Implications

Read to uncover how the AI Agent Index reveals key business and governance implications of AI agents, insights that redefine risk, compliance, & strategy.

April 15, 2025

What You Should Know: The AI Agent Index

Find out how the AI Agent Index (AIAI) documents AI systems, tracks risks & reveals gaps in safety testing. See why transparency in AI governance matters.

April 10, 2025

Is It Time to Prioritize That AI Risk Assessment?

Understand the urgency of AI risk assessments to protect your organization from regulatory and reputational fallout.

April 8, 2025

How to Build an AI Use Policy to Safeguard Against AI Risk

Discover how to build a strong AI use policy that manages risk, ensures compliance, and supports growth. Learn the steps and best practices. Read more!

April 3, 2025

3 Questions to Ask Before Purchasing an AI Data Governance Solution

Choosing the right AI data governance solution is critical to ensure your AI builds trust instead of destroying it. Ask these 3 questions before investing.

April 1, 2025

The Role of AI Due Diligence in Mergers & Acquisitions: What Investors Need to Know

Learn how to assess AI risks in Mergers & Acquisitions deals (from bias to compliance) and protect your investment with smarter, structured due diligence.

March 27, 2025

Hey MSPs - Do You Offer AI Consulting Services Yet?

As AI adoption grows, MSPs have a major opportunity to offer AI consulting services for their clients. Learn more!

March 25, 2025

How to Make Sure Your AI Monitoring Solution Isn't Holding You Back

Is your AI monitoring slowing you down? Learn to spot inefficiencies, reduce their impact, and boost productivity with Lumenova’s solution. Read more!

March 20, 2025

How to Build an Artificial Intelligence Governance Framework

An artificial intelligence governance framework can help you proactively mitigate AI risk and comply with local regulations.

March 18, 2025

Enterprise AI Governance: Ensuring Trust and Compliance

Discover how enterprise AI governance mitigates risks, ensures compliance, and fosters trust. Learn best practices for regulatory-ready AI governance.

March 13, 2025

3 Hidden Risks of AI for Banks and Insurance Companies

Risks of AI use can be particularly dangerous for regulated industries like banking & insurance. Be sure to protect your company against these 3 AI risks.

March 11, 2025

How to Choose the Best AI Governance Software

Read our complete guide to selecting AI governance software that ensures compliance, scalability & security while mitigating risks for your organization.

March 4, 2025

AI in the Insurance Industry: What to Know About Managing AI Risk

AI is helping insurers to bolster their bottom line, but it also comes with risk. Learn how to manage risk when implementing AI in the insurance industry.

February 25, 2025

Model Drift: Detecting, Preventing and Managing Model Drift

AI model drift affects accuracy over time, leading to unreliable predictions. Discover how to spot, prevent, and fix ML data drift for optimal performance.

February 18, 2025

Model Drift: Types, Causes and Early Detection

Understand what model drift is & how it impacts AI performance. AI model drift can reduce accuracy & reliability. Learn how to detect ML data drift early.

February 11, 2025

CAG vs RAG: Which One Drives Better AI Performance?

Explore the differences between CAG and RAG. Find the best AI approach for your company's needs: speed, simplicity, or adaptability. Learn more today!

February 4, 2025

CAG: What Is Cache-Augmented Generation and How to Use It

Discover what is Cache-Augmented Generation (CAG) & how it boosts AI speed, reliability, and security. Learn how it can transform your business. Read now!

January 28, 2025

Texas Responsible AI Governance Act: Analysis

Discover how TRAIGA could redefine AI governance with bold policies, key strengths, and critical gaps shaping the future of innovation and compliance.

January 23, 2025

Texas Responsible AI Governance Act: Detailed Breakdown

Discover how Texas’ Responsible AI Act (TRAIGA or HB 1709) tackles data misuse and bias with strict rules, transparency and penalties. Learn more now!

January 21, 2025

Black Swan Events in AI: Preparing for the Unknown

Black Swan ML: Prepare for AI-driven disruptions with strategies to build resilience, anticipate risks & turn BS events into opportunities for innovation.

January 16, 2025

The Path to AGI: Timeline Considerations and Impacts

Artificial General Intelligence is evolving. How soon before general AI reshapes society? Explore AGI timelines, deployment factors, and potential impacts.

January 14, 2025

Black Swan Events in AI: Understanding the Unpredictable

Black Swan ML: Read our blog post to discover black swan events definition & learn how to prepare, adapt, and thrive amidst any unexpected blackswan event.

January 9, 2025

The Path to AGI: How Do We Know When We’re There?

Artificial General Intelligence: How will we know when we’ve reached AGI? Explore key frameworks, thought experiments & challenges in measuring general AI.

January 7, 2025

AI in Finance: The Rise and Risks of AI Washing

AI in Finance: Uncover the risks of AI washing in finance, misleading AI claims that erode trust. Learn to spot red flags, & ensure AI transparency.

December 20, 2024

The Path to AGI: Mapping the Territory

Artificial General Intelligence: Read about AGI meaning & general AI info. Discover AGI's potential and challenges in reshaping the future of AI.

December 17, 2024

AI in Finance: The Promise and Risks of RAG

AI in Finance: Learn how to safely implement RAG in finance by ensuring data privacy, compliance, and accurate AI outputs for better decision-making.

December 12, 2024

Multi-Agent Systems: Cooperation and Competition

Multi Agent Systems: Explore the dynamics of cooperation and competition in multi-agent systems. Discover how AI agents balance cooperation & competition.

December 10, 2024

AI in Healthcare: Redefining Consumer-Centric Care

Artificial Intelligence in Healthcare: Learn about the use of AI in healthcare. Discover how AI in medicine and healthcare is transforming consumer care!

December 5, 2024

AI Policy Predictions for 2025

Artificial Intelligence Policy: Discover AI governance policy insights and frameworks for responsible AI use. Learn how to stay compliant with Lumenova AI!

November 28, 2024

California AI Regulation: Additional Updates

California AI Regulation: From transparency (AB 2013) to accountability (AB 2885) and AI literacy (AB 2876), explore key insights and impacts. Read more!

November 26, 2024

Making Generative AI Models Work: Cost Management in GenAI

Machine Learning models: Explore strategies to manage costs, from cloud optimization to scaling, ensuring innovation aligns with sustainable budgeting!

November 21, 2024

California AI Regulation: Updates

California AI Regulation: Discover California’s proactive steps in AI governance and their impacts on transparency, accountability, and public safety.

November 19, 2024

Mastering Generative AI Models: Trust & Transparency

Machine Learning models: Explore building trust in generative AI with techniques for generative models explainability, managing hallucinations. Learn how!

November 14, 2024

Driving Responsible AI Practices: AI Literacy at Work

Discover how AI literacy empowers organizations in AI governance, risk management, and innovation; plus bold predictions on its future in responsible AI.

November 7, 2024

Managing AI Risks Responsibly: Why the Key is AI Literacy

AI literacy can mitigate the dangers of artificial intelligence. Explore how to manage the risks of AI and ensure safe, responsible AI adoption. Read more!

November 5, 2024

Mastering Generative AI Models: Tackling Core Challenges

Machine Learning models: Learn key challenges and solutions in data privacy, ethical & societal implications to unlock Gen AI's full potential. Read more!

October 31, 2024

Generative AI: Human’s Role in 2024 and Beyond

Explore the role of humans in generative AI, human and AI collaboration, and how generative AI tools reshape skills, ethics, and the future of work.

October 24, 2024

Generative AI: Predictions for 2024 and Beyond

Generative AI is transforming industries. Discover key predictions for 2024 and beyond to stay ahead of trends. Read more & drive future innovation!

October 17, 2024

Generative AI: Creating Value in 2024 and Beyond

Generative AI value is reshaping industries with real world applications. Discover strategies for leveraging AI to drive business innovation. Explore more!

October 3, 2024

AI Innovation and Awareness: A Growing Disconnect

Explore how the gap between AI innovation and awareness can lead to risks, and discover ways to bridge this divide for a responsible AI-driven future.

September 30, 2024

Governing Generative AI in Finance: A Balanced Approach

Gen AI in finance: Discover how generative AI in finance enhances forecasts, automates reports, streamlines contract management & detects fraud. Read more!

September 26, 2024

AI Agents: Future Evolution

Discover the captivating future of AI agents & the impact their evolution could have across industries. Prepare for the transformative possibilities ahead.

September 19, 2024

AI Agents: Potential Risks

Uncover the risks tied to AI agents, from decision-making flaws to misuse. Learn strategies to manage these challenges effectively. Explore today!

September 17, 2024

The Strategic Necessity of Human Oversight in AI Systems

Explore the critical role of human oversight in AI systems. Learn how effective oversight prevents biases & ensures AI aligns with ethical standards.

September 12, 2024

AI Agents: AI Agents at Work

AI Agents at work: an exploration of several use cases in which AI agents can prove useful in real-world business settings.

September 10, 2024

AI Accountability: Stakeholders in Responsible AI Practices

Learn more about the role of responsible AI team collaboration and its influence on AI accountability.

September 6, 2024

Lumenova AI Achieves ISO/IEC 27001:2022 Certification

Lumenova AI achieves ISO/IEC 27001:2022 certification, reinforcing our commitment to data security. Learn more about this milestone on our blog.

September 5, 2024

AI Agents: Introduction to Agentic AI Models

AI Agents: Dive deep into AI agents and agentic AI models with Lumenova AI. Learn how they advance governance, automation, and ethical AI. Read more!

August 29, 2024

Existential and Systemic AI Risks: Prevention and Evaluation

Our latest blog explores high-level tactics for preventing and evaluating systemic and existential AI risks. Read more!

August 27, 2024

Overreliance on AI: Addressing Automation Bias Today

Prevent overreliance on AI and combat automation bias in your organization. Learn actionable strategies to strengthen decision-making processes now!

August 22, 2024

SOC 2 Type II successfully completed, for the second year

Request a demo to discover how Lumenova AI’s enhanced security & compliance measures can benefit your organization. Let's build a more secure AI future!

August 15, 2024

Existential and Systemic AI Risks: Existential Risks

Discover AI-driven existential risks, including threats from malicious AI actors, AI agents, and loss of control scenarios. Read more!

August 13, 2024

Existential and Systemic AI Risks: Systemic Risks

Explore the systemic risks AI inspires across societal, organizational, and environmental domains. Join Lumenova in exploring these complex scenarios.

August 8, 2024

Existential and Systemic AI Risks: A Brief Introduction

Explore the critical issues surrounding existential and systemic AI risks in our brief introduction. Join us now, as we delve into these complex scenarios.

August 1, 2024

What is Retrieval-Augmented Generation (RAG)?

Discover how Retrieval-Augmented Generation (RAG) can enhance Large Language Models and add real value to your business.

July 23, 2024

Fairness and Bias in Machine Learning: Mitigation Strategies

Reducing bias and ensuring fairness in machine learning can lead to equitable outcomes where technology benefits everyone.

July 16, 2024

AI Risk Management: Ensuring Data Privacy & Security

Discover proven strategies for AI risk management focusing on data privacy, security, and compliance. Protect your data and stay proactive today!

July 11, 2024

Connecticut Senate Bill 2: An Act Concerning AI

Connecticut Senate Bill 2: Learn how this AI regulation ensures transparency, accountability, and consumer protection in high-risk AI deployment.

July 5, 2024

What Might Your AI Risk Management Radar Miss?

Explore hidden AI risks and proactive strategies for comprehensive AI risk management. Stay ahead with insights on safeguarding your AI systems.

July 2, 2024

Adversarial Attacks in ML: Detection & Defense Strategies

Learn how adversarial machine learning exploits vulnerabilities. Explore seven cutting-edge defensive strategies for mitigating AML-driven threats.

June 27, 2024

California Senate Bill 1047

Understand the regulatory landscape for Frontier AI models under California’s Senate Bill 1047. Learn how these regulations promote ethical AI practices.

June 25, 2024

EU AI Act Compliance with Lumenova AI Risk Management Tools

Achieve EU AI Act compliance and manage risks with certainty using Lumenova AI’s risk management platform for transparent and responsible AI deployment.

June 20, 2024

AI Policy Analysis: European Union vs. United States

Discover the policy comparison between the European Union and US. Find out how each entity's policies impact AI innovation & governance on a global scale.

June 18, 2024

Managing AI Generated Content: Legal & Ethical Complexities

Learn what AI generated content is & why AI generated content can harm your organization! Find out if you can copyright ai generated content. Read more.

June 13, 2024

The Intersection Between AI Ethics and AI Governance

Delve into the fusion of AI ethics and governance. Understand the necessity of an 'ethics-first' approach for robust AI systems. Read more now!

June 6, 2024

Perspectives on AI Governance: Do's and Don'ts

Explore essential do's and don'ts for building effective AI governance practices. Learn how to proactively manage AI risks and unlock its potential.

June 4, 2024

Understand Your AI: Compliance & Regulatory Insights for AI in Banking

Our Responsible AI platform helps banks improve efficiency and enhance customer experience. Explore how Responsible AI can transform financial services today.

May 30, 2024

Perspectives on AI Governance: AI Governance Frameworks

Find out how to implement AI Governance Frameworks for compliance and success. Stay informed with the latest insights to future-proof your business!

May 28, 2024

AI Risk Management: Transparency & Accountability

Curious to learn more about AI risk management? Stay with us and discover AI and machine learning challenges & AI models and data risk management.

May 23, 2024

Colorado Senate Bill 24-205: Algorithmic Discrimination Protection

Discover Colorado’s Senate Bill 24-205 promoting fairness in AI. Learn how this regulation ensures equity and what you can do to meet compliance today.

May 21, 2024

Harnessing Trust with Responsible Generative AI

Explore the responsible AI definition with us. Understand responsible AI use and why are responsible AI practices important to an organization.

May 16, 2024

NYC Local Law 144 and Bias Audit Regulation

Dive into our blog post to learn everything you need to know about NYC Local Law 144 and its impact on algorithmic hiring.

May 15, 2024

Colorado 10-1-1 - 1st milestone: June 1, 2024

Learn about Colorado’s new regulation mandating insurers to prevent unfair discrimination with ECDIS, algorithms, and predictive models. Stay compliant!

May 10, 2024

Analysis: Canada's Artificial Intelligence and Data Act

Dive into our blog post to learn more about Canada's AI and Data Act, including the extent to which it aligns with major AI legislations.

May 7, 2024

Benefits of a Responsible AI Platform for Governance

Read this blog post to find out more about the benefits of using a Responsible AI platform to facilitate AI Governance.

May 2, 2024

Decoding the EU AI Act: Future Provisions

Read this blog post to learn how the AI Act will be applied once it takes effect, and to gain exclusive insights on possible future provisions.

April 30, 2024

The AI Revolution in Insurance: Risks & Solutions

AI is transforming insurance, streamlining claims, personalizing policies, detecting fraud, and enhancing customer experiences.

April 25, 2024

Canada’s AI and Data Act

Dive into our blog post to learn more about Canada's AI and Data Act, including key actors, targeted technologies and core objectives.

April 23, 2024

Risks of Skimping on AI-Specific Risk Management

Did you know that neglecting AI-specific risk management could spell trouble for your organization? Learn why in our latest blog post.

April 19, 2024

Decoding the EU AI Act: Influence on Market Dynamics

Learn more about the EU AI Act and how its provisions are set to generate widespread effects throughout the EU AI market in our latest blog post.

April 12, 2024

Choosing a Responsible AI Platform

Responsible AI platforms offer comprehensive assessments and resilient strategies to align AI technology with legal standards and ethical principles.

April 11, 2024

California's Generative AI Procurement Guidelines

Learn about California’s Generative AI procurement guidelines for public sectors. Stay informed on AI regulatory changes shaping industry practices now.

April 10, 2024

Lumenova AI: Now Featured on GOV.UK

We're thrilled to announce a significant milestone in our journey – Lumenova AI has been included in GOV.UK for AI Assurance Techniques.

April 4, 2024

Decoding the EU AI Act: Standardizing AI Legislation

Learn more about how the EU AI Act is laying the groundwork for standardized AI regulation in our latest blog post.

April 2, 2024

2024: The Year of Responsible Generative AI

In 2024, new Generative AI innovations will shape industries, emphasizing responsibility and ethical use. Stay updated with Lumenova AI.

March 29, 2024

What You Should Know About ISO 42001

Learn about ISO 42001 and its importance in standardizing AI risk management practices across industries. Discover more in our comprehensive blog post.

March 26, 2024

4 Types of AI Cyberattacks Identified by NIST

NIST identifies four major types of cyberattacks and offers mitigation strategies to protect, detect, respond and recover.

March 14, 2024

Decoding the EU AI Act: Transparency and Governance

Learn more about the transparency obligations, governance roles and structures, as well as post-market monitoring procedures proposed by the EU AI Act.

March 11, 2024

Trustworthy AI: Connecting Innovation and Integrity

Our Responsible AI platform offers comprehensive assessments and resilient strategies to achieve Trustworthy AI through innovation and integrity.

March 7, 2024

NIST's Cybersecurity Framework 2.0

Learn how NIST’s AI Risk Management Framework supports organizations in identifying and addressing AI-related risks while ensuring compliance.

March 4, 2024

Decoding the EU AI Act: Regulatory Sandboxes and GPAI Systems

Learn about the EU AI Act’s regulatory sandboxes and AI law, promoting innovation and safety in AI applications. Explore more on Lumenova’s blog.

February 26, 2024

How to Get Started with Generative AI Governance

Learn the basics of Generative AI governance and how to implement it effectively. Get started with insights & strategies from Lumenova AI.

February 22, 2024

Regulatory Insights for AI in Insurance

Dive into our latest blog post to find out more about the latest regulatory insights for AI in the insurance industry.

February 20, 2024

AI Opportunities and Risks in Insurance

Dive into the opportunities and risks of using AI in insurance. Explore how AI can transform the industry with Lumenova AI insights and updates.

February 16, 2024

Decoding the EU AI Act: Scope and Impact

Read our latest blog post to find out more about the regulatory scope, purpose, and impact of the EU AI Act.

February 9, 2024

Lumenova AI Joins NIST's AI Safety Institute Consortium

Lumenova AI joins NIST AISIC, supporting initiatives for secure and ethical AI standards and practices. Learn more with Lumenova AI updates and insights.

February 8, 2024

Progress of AI Policy in 2023 and Predictions for 2024

2023 was an exciting and ambitious year for AI policymaking. Find out more about the progress of AI policy in 2023 and our predictions for 2024.

January 29, 2024

NIST's AI Risk Management Framework

Explore the core elements of effective AI risk management. Understand how to reduce AI-related risks & ensure safe implementation across your organization.

January 23, 2024

Responsible AI Governance: Debunking Myths

Read our blog post to uncover the truth behind Responsible AI Governance. Explore common myths, gain insights, and shape an ethical AI strategy for 2024.

January 19, 2024

AI in 2023: A Year in Review

As we gear up to face the challenges and opportunities of 2024, let's reflect on the most noteworthy AI advancements of 2023.

January 17, 2024

California AI Regulation: ADMT & Gen AI

Dive into our latest blog post to explore the key changes in the AI landscape driven by recent California state regulations.

January 16, 2024

Managing Risks of LLMs in Finance

Learn how to manage the risks associated with Large Language Models (LLMs) in financial services. Uncover strategies through Lumenova AI insights.

January 12, 2024

Case Study: Leveraging Generative AI in Banking

This case study examines how a retail bank can safely scale generative AI initiatives by implementing AI governance platform Lumenova AI.

January 10, 2024

Colorado Senate Bill 21-169: AI Life Insurance Regulation

Read more about Colorado's new AI regulation in our latest blog post. Stay informed on Responsible AI practices in the evolving landscape of AI Governance.

December 20, 2023

Navigating the AI safety landscape in Generative AI

Understand the evolving AI safety landscape for generative AI, highlighting best practices and key strategies. Read more on Lumenova’s blog.

December 11, 2023

Landmark Agreement on EU AI Act

Read our comprehensive guide to the EU AI Act, detailing regulatory requirements and impacts on AI development. Explore more on our blog.

December 1, 2023

Responsible Generative AI Principles

In this article, we explore key principles inspired by various industry-leading approaches to building responsible generative AI solutions.

November 28, 2023

White House AI Executive Order

Dive into President Biden’s AI executive order. Learn its strategic steps and impact on building a secure, ethical AI future for all. Read more now!

November 17, 2023

Transparency in AI Companies: Stanford Study

A new Stanford study reveals the lack of transparency from major AI companies, highlighting the urgent need for improved accountability.

November 10, 2023

AI Opportunities and Risks in Healthcare

Explore the future of AI in healthcare, focusing on innovations and applications transforming medical services. Discover more about medical AI in our post.

October 18, 2023

Joining Responsible AI Innovators in EAIDB

Lumenova AI joins EAIDB, reinforcing our commitment to responsible AI innovation and ethical AI practices. Read more about this achievement.

October 3, 2023

Lumenova AI: A Proud Addition to the OECD.AI Database

We're thrilled to announce a significant milestone in our journey – Lumenova AI has been included in OECD.AI’s Trustworthy AI Tools

September 15, 2023

Ethics of Generative AI in Finance

Discover key ethical considerations for implementing generative AI in finance. Learn more about AI ethics & AI for financial services in our latest blog.

August 15, 2023

Lumenova AI Is Now SOC 2 Type II Compliant

We’re proud to announce our SOC 2 Type II attestation, setting new benchmarks in Responsible AI. Learn how this strengthens our commitment to security!

July 6, 2023

How Businesses Can Prepare for the EU AI Act

Understanding the EU AI Act: Read our blog post for insights and best practices regarding Europe's AI Act.

June 29, 2023

Europe's AI Act: Key Takeaways

Discover the essential takeaways from the Europen Union's AI Act. Read our blog post to find out more.

June 10, 2023

What Businesses Need to Know About the EU AI Act

Navigate the EU AI Act with confidence. Learn how AI regulations impact businesses, compliance strategies, and the future of responsible AI. Read more!

May 10, 2023

Putting Generative AI to Work Responsibly

Leverage generative AI responsibly! Discover best practices for ethical AI use, risk mitigation, and compliance. Read our guide to responsible AI adoption.

May 2, 2023

Model Building with ChatGPT: Myth or Reality?

Can ChatGPT build machine learning models? We test its capabilities, limitations, and impact. Discover the truth behind AI-powered model building!

April 12, 2023

The Risks & Rewards of Generative AI

Generative AI is reshaping industries, but at what cost? Explore the risks, rewards & key considerations for ethical AI adoption. Read the full insights!

March 2, 2023

Adversarial Attacks vs Counterfactual Explanations

How resilient is your ML model? We tested adversarial attacks vs. counterfactual explanations to uncover vulnerabilities & improve AI robustness. Read now!

February 27, 2023

Counterfactual Explanations in Machine Learning

Counterfactual explanations: AI's black box nature can hinder trust. Learn how counterfactual explanations in ML boost transparency, trust and compliance.

February 22, 2023

Types of Adversarial Attacks and How To Overcome Them

Explore types of adversarial attacks in machine learning, including data poisoning, evasion, and model extraction, plus strategies to defend AI models.

February 3, 2023

NIST Releases New AI Risk Management Framework

NIST's AI Risk Management Framework (NIST RMF) sets the standard for AI governance. Discover how the RMF framework helps manage risks & ensure compliance.

January 26, 2023

Understanding Adversarial Attacks in Machine Learning

Adversarial machine learning: Explore adversarial examples that reveal AI weaknesses. Learn how data poisoning works & why securing ML models is critical.

September 21, 2022

Why Business Leaders Should Care About AI Transparency

AI transparency matters. Discover how transparency in AI builds trust, boosts performance, enhances security, and ensures compliance with regulations.

September 14, 2022

Why AI Transparency Is Essential to Building Trust

Discover how AI transparency enhances trust, fairness & compliance. Learn why transparent AI is key to ethical AI adoption & performance. Read more!

September 7, 2022

Bias and Unfairness in Machine Learning Models

Explore AI bias & fairness in machine learning. Learn real-world cases, de-biasing techniques & ethical AI solutions. Read more about algorithmic bias!

August 31, 2022

Group vs. Individual Fairness in AI

Explore group and individual fairness metrics in machine learning research. Learn how to balance algorithmic fairness in AI systems. Read more now!

July 29, 2022

The Nuances of Fairness in Machine Learning

AI fairness is key to ethical AI. Learn how to tackle AI bias, ensure fairness in AI, and build transparent, responsible, and trustworthy AI models.

July 15, 2022

The Benefits of Responsible AI for Today's Businesses

Discover how Responsible AI ensures ethical, transparent, and fair AI systems. Learn the importance of the responsible use of AI for businesses.

July 1, 2022

Explainable AI in Machine Learning

Explainable AI enhances trust in AI models. Learn how to explain artificial intelligence decisions with XAI techniques for transparency & compliance.