June 24, 2025

AI Best Practices for Cross-Functional Teams: Getting Legal, Compliance, and Data Science on the Same Page

Contents

AI best practices start with people, not just technology. Most problems show up when teams aren’t aligned. That’s why strong AI practices should go beyond code and data; they depend on how teams work together.

We’ve worked with clients in finance, tech, and consulting to help steer their AI systems back on course. And what did we learn? The real risks aren’t always embedded in the models themselves, but in the disconnects between departments.

You might have legal asking the right questions about privacy and liability. Compliance is scanning for gaps in audit trails or fairness. And data science is driving full speed toward innovation. But if they’re not aligned early (and often), your AI gets stuck, becomes risky, or ends up unusable.

That disconnect is what we call a silo. And silos can quietly sabotage even the smartest ideas.

At Lumenova AI, we’ve seen how breaking down these walls leads to better, faster, safer outcomes. In this guide, we’ll unpack what silos are, why they form, and how to fix them using proven AI best practices. We’ll give you real tips that work inside high-stakes environments.

Let’s start with the root cause: silos.

What Are Silos in AI Projects?

The definition and danger of silos

- A silo is when teams operate in isolation. In AI projects, it means legal, compliance, and data science are each working hard (but not together). And in a domain where decisions about fairness, risk, and explainability overlap constantly, isolation causes breakdowns.

Why is AI uniquely vulnerable to siloed thinking?

- AI touches on rules (legal), process (compliance), and code (data science) all at once. That overlap means you can’t afford to treat those responsibilities as separate buckets.If a model is biased, is it a technical issue? A compliance issue? A legal risk? Frequently, the answer is yes (to all of them). Which is why working in silos doesn’t work.

How do silos lead to delays, risk, and lost trust?

When teams aren’t aligned, it may happen that:

- Legal gets involved too late

- Compliance misses critical context

- Data science faces sudden blockers after weeks of work

The cost? Delays, rework, regulatory exposure, and ultimately, less trust from leadership and users alike.

The View from Each Team

Every team in an AI initiative has a different focus, and none of them are wrong. The challenge is that these priorities often clash when they aren’t aligned early. To break silos, we first need to understand what’s happening inside each one.

Legal: Focused on risk, liability, and long-term protection

Legal teams are the company’s internal defense system. Their job is to assess and reduce exposure, whether that’s to lawsuits, regulatory violations, or reputational fallout. In the context of AI, this means asking critical (and often tough) questions like:

- Is this AI model compliant with evolving laws like GDPR, CCPA, or the EU AI Act?

- Are we using any sensitive personal data? If so, are we doing it with proper consent?

- Can this system’s decisions be explained in plain language if challenged in court?

- If something goes wrong (bias, discrimination, data misuse), who’s liable?

Legal isn’t just signing off on contracts. They’re anticipating risks that may not surface until months or years later. The challenge? Legal often gets brought in at the end, when models are already built. At that point, making changes is harder, costlier, and sometimes impossible.

From our work, we’ve seen that when legal is part of the conversation from the beginning, they shift from gatekeepers to strategic partners. They’re not there to say “no”. They’re there to help you build an AI system that can stand up to public, legal, and regulatory scrutiny without panic.

Our advice: Invite legal counsel to participate in early model scoping and policy development. This proactive involvement reduces rework and accelerates project timelines.

Compliance: Guarding process, structure, and operational integrity

Compliance teams are the protectors of the way things are done. They’re less focused on the “what” of the AI model and more concerned with the “how” behind it:

- Was the model trained using approved datasets?

- Are we logging key decisions and changes for auditability?

- Have we evaluated bias and fairness using standard metrics?

- Can we trace every change made to the algorithm over time?

Compliance isn’t trying to shut projects down, but trying to make sure that whatever you build can survive an audit. They’re your internal regulators, making sure that your house is in order before external regulators or media scrutiny knock on the door.

Compliance often gets looped in too late. Usually, after the model is already built and ready to go live. That’s when they’re asked to check the documentation. And suddenly, problems show up. There’s no record of how decisions were made. No one ran bias tests. Changes weren’t tracked. There’s no clear trail showing who approved what.

Our advice: Integrate compliance checkpoints into each AI sprint or development milestone. Use automation to track approvals, validations, and test results across projects.

Data Science: Building, optimizing, and pushing the envelope

Data scientists (including Machine Learning Engineers, AI Product Leads, Data Analysts, AI Research Scientists, NLP Engineers, AI Ethics Officers, Data Visualization Specialists, and Model Owners) are the engine of AI progress. Their focus is innovation, speed, and performance. They care about questions like:

- Can we build a better model?

- Is this algorithm delivering accurate predictions?

- Can we reduce latency or improve real-time performance?

- How fast can we go from experiment to production?

This mindset is critical, and it’s how companies stay competitive. But it can also clash with the slower, documentation-heavy processes that legal and compliance require. It’s not that data scientists don’t care about ethics or fairness. It’s that their incentives are usually tied to performance, not governance. And if they aren’t aware of policy requirements upfront, they may unknowingly build systems that are brilliant technically (but noncompliant legally).

We frequently encounter scenarios where data science teams deploy high-performing models (such as deep neural networks or other black-box architectures) without accounting for explainability requirements. While the model may meet technical benchmarks, it fails to satisfy regulatory or internal standards for transparency. It works great, but no one can explain how. That becomes a blocker.

Our advice: Collaborate with legal, compliance, and data science leaders to co-develop model development checklists that integrate technical performance criteria alongside policy, regulatory, and ethical requirements. Equip teams with governance-enabled tools that embed these checks into their existing workflows (ensuring compliance is continuous, not disruptive).

At Lumenova AI, we help data science teams work smarter by embedding lightweight, governance-aware tooling into their workflows.

Why Shared Ownership Is the Foundation

From isolated input to collective impact

When AI projects are built through hand-offs, no one owns the full outcome. That’s dangerous. Shared ownership flips the script. Each team sees their role not just in isolation, but in support of a shared mission.

Making AI a team sport

When teams build together:

- Legal asks better questions

- Compliance spots risks sooner

- Data scientists make smarter design choices

Language Matters: Building a Shared Vocabulary

Words can move a project forward (or stop it in its tracks). When cross-functional teams use the same word to mean different things, misalignment creeps in. That’s especially true in AI. Terms like bias, explainability, transparency, and model drift may seem straightforward, but ask legal, compliance, and data science to define them, and you’ll likely get three different answers.

This isn’t a minor issue. It’s often the root of deeper conflict: delays, miscommunication, and models that don’t meet expectations because no one fully agreed on what those expectations were.

If you want smoother collaboration, start by aligning language.

Don’t assume shared understanding (create it)

One of the simplest but most powerful steps your team can take is to agree on key terms before the technical work begins. That might mean gathering everyone (legal, compliance, data science) in one meeting, and walking through what your organization means when it says:

- “Auditable”

- “Responsible AI”

- “Consent”

- “High-risk model”

- “Data minimization”

- “Model drift”

It doesn’t take long, but it changes everything.

Create a living AI glossary (or use resources that already exist)

To help with this, we’ve published a general-purpose AI governance glossary on our website, a reference resource designed to support teams having exactly these conversations.

Whether you’re launching a new model or just updating internal policies, it’s a great place to start. Use it to check your definitions, align your teams, or spark that first cross-functional meeting.

Language shapes how your teams think and how they act. Don’t leave it to interpretation. Define it, together.

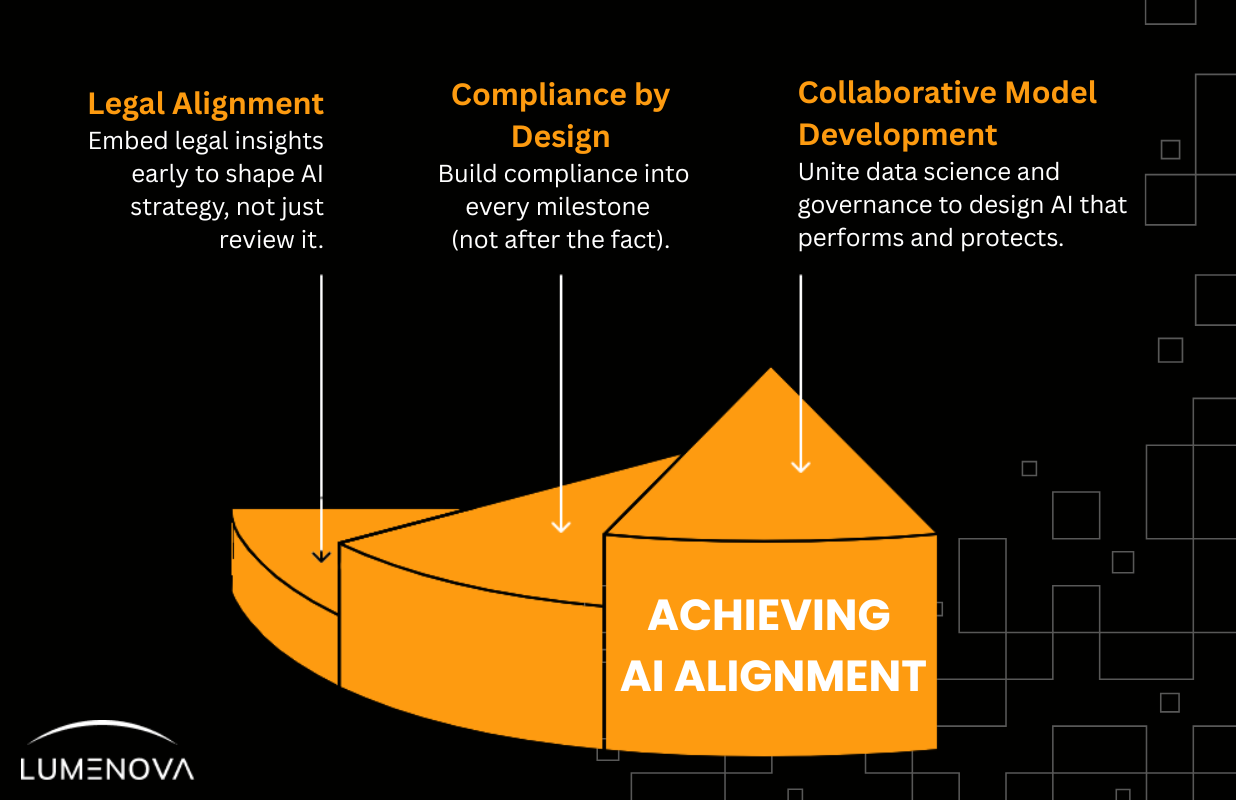

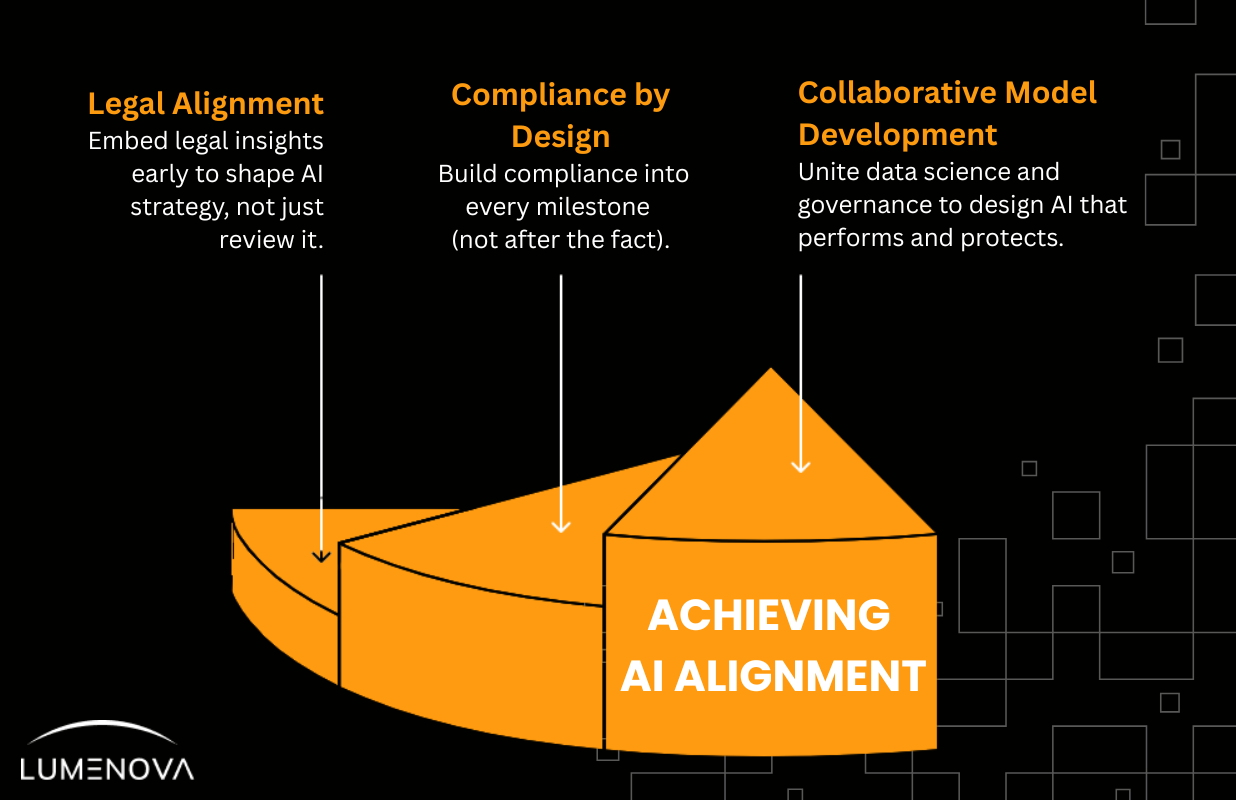

4 AI Best Practices for Cross-Functional Teams

1. Co-write governance plans

With all governance requirements built into the app, you can easily adhere to them, saving time on searching and preventing any crucial details from being missed. This ensures nothing gets missed and reduces time spent searching for requirements.

That means:

- Creating approval workflows directly in the app

- Writing checklists as a team and embedding them in processes

- Designing what model validation should look like

When everyone helps write the plan, everyone buys into it.

2. Check in often – in the app (even when things seem fine)

Don’t wait for problems to check in. Build a habit of regular, lightweight touchpoints right inside the RAI Platform:

- Drop quick updates where the work is happening

- Leave comments to surface blockers or decisions

- Review progress together (briefly and often)

These moments don’t need to be formal or scheduled. What matters is staying visible and connected as work evolves.

Set up Cross-Functional training sessions

Organize regular workshops where legal, compliance, and data science teams take turns educating one another on the core principles, challenges, and priorities of their respective fields. Fostering this kind of mutual understanding not only strengthens collaboration across departments but also helps preempt misunderstandings and streamline joint decision-making.

3. Create shared goals and success metrics

Align on outcomes like:

- “How many models passed full audit review?”

- “What’s our model documentation score?”

- “How fast did we respond to flagged risks?”

Use KPIs that matter to more than one team.

4. Keep your docs in one spot

Use a single platform or folder where everything lives:

- Risk assessments

- Model testing reports

- Compliance notes

- Legal feedback

This reduces confusion and helps when audits or questions come up later. Therefore, our suggestion is to build a simple dashboard that shows:

- What models are being worked on

- What stage they’re in

- What risks have been flagged

- Who’s reviewing what

This stops people from being surprised. It also helps senior leadership get visibility without micromanaging.

Now Let’s Turn the Lens Inward: Is Your AI Collaboration Where It Should Be?

Before wrapping up, let’s pause and look inward.

We often talk about AI strategy, innovation, and compliance as separate conversations. But the truth is, they’re deeply connected, and the quality of our collaboration across teams often determines whether our AI efforts lead to measurable success or unintended risk. So now is a good time to ask the harder questions. Not just about tools or policies, but about structure, culture, and alignment.

Below are questions we invite you to sit with. Gather your leadership team (across AI, compliance, legal, risk, and data) and talk through these together. The answers won’t just shape your enterprise AI governance approach. They’ll shape your outcomes.

Strategic Alignment & Shared Ownership

- Are legal, compliance, and data science actually collaborating from day one, or still working in sequence?

- Does your AI governance framework clearly outline who owns what, when, and why?

- Is governance treated as a shared goal or as a handoff between departments?

- Can your cross-functional teams make joint decisions without getting stuck in hierarchy?

Risk, Compliance & Legal Integration

- Are legal and compliance actively involved during AI development (or reacting to decisions already made)?

- How are you tracking your obligations under evolving laws like GDPR or the EU AI Act?

- Do you have a reliable way to audit your AI systems and act on what you find?

- Are you confident your team is balancing innovation with risk management, or is one outpacing the other?

Data, Models & Explainability

- Is your data governance solid across functions, or does each team play by different rules?

- How do you handle black-box models when regulators or stakeholders ask, “Can you explain this?”

- Are you proactively addressing model bias and making those efforts visible?

- Do your data scientists have the tools, support, and direction to embed governance into their work?

Culture, Communication & Capability Building

- Are your legal, risk, and compliance teams equipped with the AI literacy they need?

- How often do your technical and non-technical teams sit down to share what they’re seeing?

- Is there space in your culture to raise tough questions about AI systems (and do those questions go anywhere)?

- How do you measure the quality of your collaboration? Are you improving, or standing still?

Conclusion

You’ve seen how silos hold AI back (not because people aren’t doing their jobs, but because they’re not doing them together). Legal is managing risk. Compliance is tracking integrity. Data science is building fast and pushing boundaries. All three are essential. But when they’re out of sync, your smartest work ends up slowed down or diluted on arrival. And that’s the core of what this guide has laid out. Not just how silos form, but how to break through them. How to shift from handoffs to shared ownership. From “let me know when it’s done” to “let’s build it together from the start.”

We’ve talked about what each team brings to the table and why alignment isn’t optional when the stakes are high. We’ve unpacked what real collaboration looks like (from shared glossaries and co-designed processes to joint KPIs and centralized documentation). And we’ve asked you to look inward: to ask the questions that surface the gaps before they become blockers.

So if you’re ready to move from best intentions to best practices (and get legal, compliance, and data science working from the same team), Lumenova AI can help.

Book a demo today and see how our Responsible AI platform makes alignment real, governance scalable, and silos a thing of the past.

Frequently Asked Questions

The top AI best practices include co-writing governance plans, sharing success metrics transparently, maintaining centralized documentation, and fostering real-time collaboration across teams. These methods reduce silos and support alignment between legal, compliance, and data science teams.

AI projects fail when legal, compliance, and data science operate in silos—leading to misaligned priorities, missed risks, and unusable models. Cross-functional collaboration ensures AI systems are not only innovative but also compliant, explainable, and audit-ready.

Co-ownership of AI governance starts with joint planning—creating shared approval workflows, model validation checklists, and collaborative documentation processes. This helps shift from reactive to proactive risk management and streamlines AI deployment.

A shared vocabulary prevents misunderstandings by aligning definitions of critical terms like “bias,” “explainability,” or “high-risk model.” Establishing a common glossary enables smoother collaboration and helps prevent delays caused by miscommunication.

To ensure continuous compliance, teams should embed governance into development workflows using automated checkpoints, audit logs, and risk tracking. This makes compliance seamless and ensures issues are addressed early, not after deployment.