Contents

AI has moved from experiment to infrastructure. What started as a niche capability is now embedded in how decisions are made, how products are shaped, and how operations run. It’s no longer only a question of whether companies are using AI. It’s about whether they are governing it well.

When governance is missing, AI introduces more risk than value. When governance is strong, AI becomes an asset (trusted, explainable, and fit for purpose).

In this article, we’ll explore the pathway to effective AI governance, providing insights on key steps, best practices, and how to manage AI risk and compliance across your organization.

Why AI Governance Matters Now

AI systems can be brilliant, but they can also be brittle. They make predictions, not promises. And when those predictions go unchecked, the fallout is real. Biased hiring tools. Unexplainable credit decisions. Chatbots generate inappropriate content. Sensitive data shows up where it shouldn’t.

Regulators have noticed. So have customers. So have boards. That’s why leading organizations are not waiting for a crisis to start governing AI. They’re building structured programs that define risk, assign responsibility, and monitor models with discipline.

Good AI governance is about:

- Reducing exposure to legal and ethical risks

- Ensuring model quality over time

- Aligning AI use with business goals and public expectations

- Creating explainable, and auditable practices

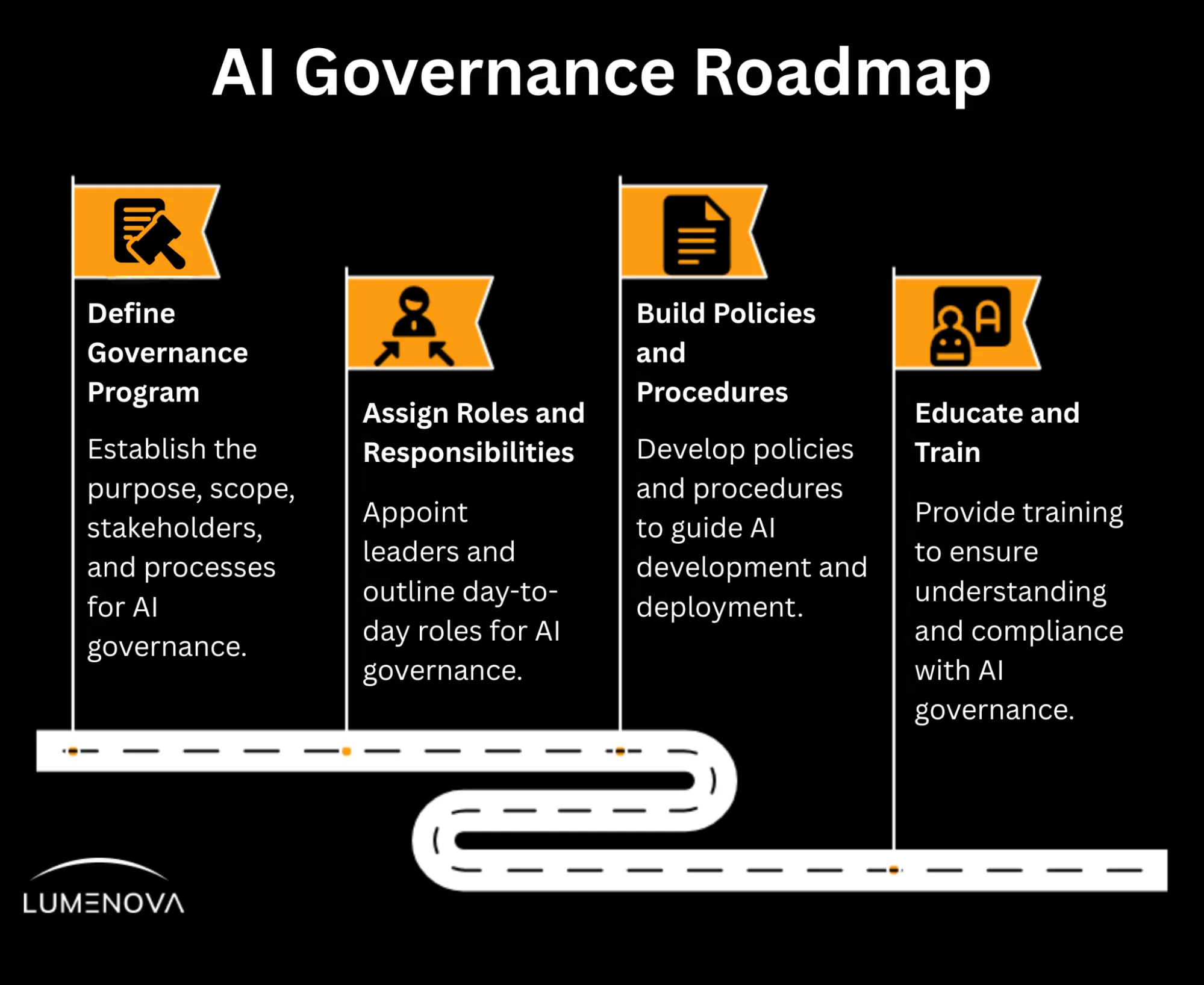

4 Steps To AI Governance

Step 1: Define Your Governance Program

Start with clarity. Every strong enterprise AI governance effort begins by defining its purpose and scope.

That means drafting a charter or mandate that answers four questions:

- Why are we doing this? (Mission)

- What does it cover? (Scope)

- Who is involved? (Stakeholders)

- How will it work? (RAI principles and decision-making processes)

Your charter doesn’t need to be fancy. But it must be clear. It should define key terms so everyone speaks the same language, and it should draw lines between what’s allowed, what’s restricted, and what needs review.

Good governance starts with intention.

Step 2: Assign Roles and Responsibilities

AI can be a powerful cross-functional tool, meaning that it requires cross-functional governance. The first step in AI governance is defining who does what.

Leadership Roles

Start at the top. Appoint a Head of AI Governance or designate an existing leader with clear accountability. Then establish a governance board or council with members from:

- Legal and compliance

- Risk and audit

- Data science and engineering

- Business units

- Privacy and security

Day-to-Day Roles

Next, outline who will do the work. This includes:

- Application Owners: Build or procure AI tools, and ensure they are properly reviewed and documented before deployment.

- Reviewers: Independently assess AI systems before and after deployment.

- Accountable Executives: Senior leaders who oversee AI-related business impacts and ensure risks are properly escalated and addressed.

Every AI model should have someone clearly responsible for it. No exceptions.

Step 3: Build Policies and Procedures

Once people are in place, they need rules to follow. Now is the time to create clear AI policies and procedures that guide AI development and deployment.

Your Core Documents

- AI Governance Policy

- Scope and definitions

- Ethical principles

- Risk appetite and thresholds

- Regulatory standards

AI Principles Statement

- A shorter, public-facing AI mission statement and value proposition

Operational Procedures

- How to assess risk levels

- How to report impacts

- How to protect AI systems and training data

- How to document AI systems

- How to approve new tools and policies

- How to monitor performance and retrain models when needed

These documents should mirror your existing policy structures, but must be tailored for AI’s unique risks. Use simple language. Make them easy to find and follow.

Would you be interested in exploring the construction of AI policies designed to mitigate risk and guarantee adherence to regulatory standards? Read our article “How to Build an AI Use Policy to Safeguard Against AI Risk”.

Step 4: Educate and Train Your People

Governance only works when people understand it. This means building and maintaining AI literacy across the organization.

- General Staff: Understand what AI is, how it’s used, and what to watch out for

- Model Builders: Learn design and development standards, including data hygiene and risk controls

- AI Users: Know how to use tools responsibly and spot poor outputs

- Reviewers and Risk Leads: Stay current with regulation, policy, and technical assessments

Best Practices for Long-Term Success

As your governance program matures, these habits will keep it strong:

- Centralize human oversight to avoid silos and inconsistent reviews

- Align with regulations early, not after problems arise

- Document decisions so you can show your work to auditors and leadership

- Automate where you can to reduce human error and save time

- Review and improve your governance regularly as tech and AI laws evolve

Governance isn’t a one-and-done project. It’s a system that adapts as AI changes.

How Lumenova AI Supports AI Governance

Many companies know they need governance. Fewer know how to scale it without burying their teams in manual work. We offer:

A Single Platform for AI Risk Management & AI Governance

Lumenova AI combines deep technical evaluation with enterprise-grade risk controls. With it, you can:

- Centralize all AI models in one inventory

- Assign roles, responsibilities, and ownership clearly

- Set and track compliance across regions and industries

- Automate documentation and approvals

End-to-End Oversight

We evaluate every model across five critical risk areas:

- Fairness

- Explainability and interpretability

- Validity and reliability

- Security and resilience

- Data integrity

You get clear visibility into what your models are doing, where they might go off track, and what to do about it.

Monitoring That Actually Monitors

AI doesn’t stop changing after launch. We track live model behavior to catch:

- Data drift

- Model drift

- Shifts in accuracy or decision quality

When something looks off, the right people get notified. Fast.

To gain deeper insights into model drift, its types, causes, early detection, as well as strategies for detection, prevention, and management, we invite you to explore our comprehensive 2-part series, AI Deep Dives:

Ready for the Future

Our RAI platform is built to adapt as new laws emerge. It stays aligned with:

- Global standards on risk, security, and privacy (like ISO 42001)

- U.S. state-level AI policies (like California AB 1008, California SB 942, GAIAA, Colorado SB21-169, Colorado SB24-205 and Utah Artificial Intelligence Policy Act)

- The EU AI Act

And it provides reports and dashboards you can share with auditors, execs, or regulators.

Conclusion

AI governance can feel complex. But the core idea is simple: create structure around systems that can impact people’s lives.

You don’t have to solve everything on day one. Start with a charter. Assign clear roles. Write policies that make sense. Teach your people what matters. Then improve as you go.

And if you want help building an AI governance framework that works at scale, we are here to help.

Book a demo. See how governance becomes simpler, smarter, and stronger with the right platform.