February 5, 2026

Agentic AI Risk Management and the Limits of Human-in-the-Loop Oversight

Contents

The transition from Generative AI to Agentic AI represents a fundamental shift in how enterprises adopt artificial intelligence. We are no longer just asking models to summarize text or generate code snippets; we are granting them the autonomy to execute complex workflows, access external tools, and make decisions that directly impact business operations.

While the productivity gains of “agents” that can autonomously plan and execute tasks are undeniable, they introduce a new paradigm of risk. In this new era, the traditional safety net of manual oversight – the Human-in-the-Loop (HITL) – is quickly becoming a bottleneck that can create a false sense of security.

For enterprise leaders, the challenge is no longer just about model accuracy; it is about managing agentic AI risk management at the speed of software.

The Agentic Shift: From Conversation to Action

To understand the risk, we must grasp the change in capability. Traditional Large Language Models (LLMs) are passive; they wait for a prompt and provide an answer. Agentic AI is active. It is designed to pursue goals.

When an agent is given a high-level objective – such as “analyze our cloud spend and optimize for cost” – it breaks that goal down into sub-tasks. It might query a database, access your billing API, draft an email to a department head, and potentially even change server configurations. In practice, this could mean reallocating cloud resources, modifying access permissions, or triggering outbound communications without a human ever reviewing the intermediate steps.

This autonomy is the core value proposition of agentic AI, but it is also the primary source of risk. An agent doesn’t just hallucinate a wrong fact; it can “hallucinate” a wrong action. And unlike a chatbot, where a bad answer can be ignored by a user, a bad action taken by an agent can have immediate, cascading consequences across your infrastructure.

Why Human-in-the-Loop is No Longer Enough

For years, the gold standard for AI safety has been the Human-in-the-Loop. The logic is sound: if an AI model makes a prediction, a human should verify it before it becomes a decision.

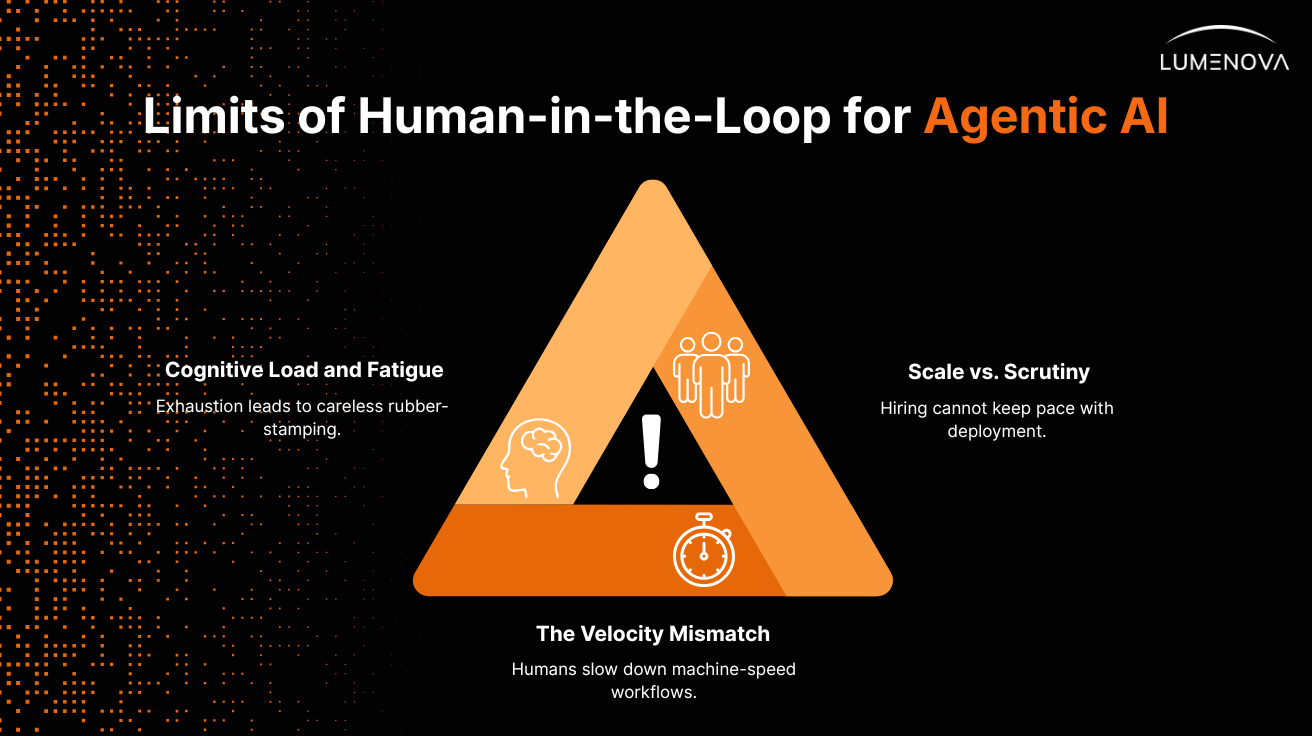

However, as we scale agentic workflows, reliance on manual oversight reveals critical structural weaknesses:

- The Velocity Mismatch: AI agents operate at machine speed. An agent might execute dozens of tool calls and API requests in the time it takes a human reviewer to read the initial prompt. If you require human approval for every step, you destroy the efficiency gains the agent was built to provide. If you only review the final output, you miss the intermediate steps where privacy leaks, security vulnerabilities, or logic errors may have occurred.

- Cognitive Load and Fatigue: Asking humans to endlessly verify AI outputs leads to “rubber-stamping,” where reviewers approve actions without critical scrutiny simply to clear the queue. In complex agentic chains, understanding why an agent made a specific decision requires tracing its reasoning through multiple steps—a forensic task that is often too time-consuming for daily operations.

- Scale vs. Scrutiny: As organizations deploy hundreds or thousands of agents, the ratio of human overseers to AI agents becomes unsustainable. You cannot hire your way out of agentic risk.

While manual oversight remains a key component of an AI risk management system – particularly for high-stakes, final-mile decisions – it is not enough to truly manage organizational risk exposure. Relying solely on it is akin to securing a high-frequency trading algorithm with a manual approval button.

The Solution: Structured, Automated Agentic AI Guardrails

To manage the risks of autonomous systems, you need autonomous defense systems. You need structured, automated guardrails that operate at the same speed and scale as your agents.

Automated guardrails act as an always-on control plane that sits between your agents and your enterprise systems. Unlike a human reviewer, these guardrails can inspect every input, intermediate step, and final output in milliseconds, enforcing policy without slowing down innovation.

Effective agentic AI risk management requires guardrails that can handle three distinct layers of defense:

- Input/Output Filtering: preventing prompt injection attacks that could trick an agent into ignoring its instructions, and ensuring no PII or sensitive IP leaves the secure environment.

- Behavioral Constraints: Defining strict boundaries for agent actions. For example, an agent might be allowed to read from a database but blocked from writing or deleting data unless specific strict criteria are met.

- Logic & Consistency Checks: Automatically validating that the agent’s reasoning path is sound and that its final action aligns with the original intent of the user.

How Lumenova AI Can Help

At Lumenova AI, we recognize that the future of enterprise AI is agentic. And this future requires a robust governance infrastructure. Our platform is designed to provide the automated oversight necessary to deploy agents with confidence. This allows teams to move faster without increasing review overhead, escalation cycles, or compliance risk.

Our solution enables you to define and enforce comprehensive guardrails that act as the “immune system” for your AI architecture.

With Lumenova AI, you can:

- Automate Compliance: Map your internal policies and external regulations (such as the EU AI Act) directly to technical controls that block non-compliant agent actions in real-time.

- Monitor Agent Reasoning: Gain visibility not just into what your agents did, but how they decided to do it. Our platform traces the decision lineage, allowing you to identify drift or misalignment early.

- Scale Without Fear: Deploy agents across various business units, knowing that a centralized policy engine is enforcing safety standards globally, ensuring that a marketing agent doesn’t accidentally violate data privacy rules.

Bridging the Gap: The Lumenova AI Forward Deploy Team

We understand that implementing these guardrails is not just a technical challenge; it is an organizational one, as well. Transitioning to agentic AI requires a deep understanding of both the technology and the unique risk profile of your business.

This is why we offer more than just software. Lumenova AI provides forward-deploy team capacities – a specialized group of technical experts who integrate directly with your organization.

Our Forward Deploy Team acts as a strategic partner to:

- Assess your specific agentic risks: We help you identify where your autonomous workflows are most vulnerable.

- Configure custom guardrails: We don’t believe in one-size-fits-all. We help you tune our platform to balance safety with the specific performance needs of your agents.

- Accelerate time-to-value: By handling the complexities of governance and integration, we free your data science teams to focus on building high-performing agents, knowing the safety architecture is already in place.

The era of Agentic AI is here, and it brings with it immense potential for automation and efficiency. But as we hand over the keys to autonomous agents, we must ensure we haven’t removed the brakes.

Manual Human-in-the-Loop oversight is a necessary backstop, but it cannot be your primary line of defense. To protect your organization from reputational damage, operational failure, and regulatory penalties, you must adopt automated, structured guardrails that scale with your ambitions.

Don’t let risk management be the bottleneck that stalls your AI adoption. Secure your agents, automate your oversight, and scale with confidence.

Ready to see how automated guardrails can secure your agentic workflows? Request a demo of Lumenova AI’s capabilities today.