Contents

For the last few years, the story of enterprise AI has been defined by high expectations and a traditional mindset. Leaders invested in pilots, teams built impressive proofs of concept, and everyone expected a quick path to productivity and ROI.

Yet the reality inside many organizations looks different. According to recent industry research, many companies adopt AI, especially generative AI, but only a minority report meaningful enterprise-level value from those deployments, as highlighted in McKinsey’s 2025 State of AI report.

This pattern is often described as “Pilot Purgatory,” a state where most use cases remain stuck in experimentation and very few reach meaningful scale. When you look closely at why this happens, the problem is rarely the model architecture or the quality of the technology. It is the lack of a structured, trustworthy way to deploy AI responsibly, consistently, and with confidence. This is where AI governance steps into the picture. Not as a blocker, not as a checklist, but as the strategic foundation that helps companies finally cross the finish line.

The Shift: From “Move Fast” to “Move Smart”

For a long time, the mantra in tech was to move fast, which led innovation teams to face immense pressure to adopt Generative AI quickly. But in regulated enterprise environments, such as finance, healthcare, and insurance, moving fast is not really the only option.

Moving from an AI prototype to enterprise-wide deployment without a defined framework is where many initiatives break down. Instead of scaling, teams encounter predictable barriers that prevent models from reaching production.

- Legal may halt progress over copyright and liability concerns.

- Security may flag undefined data risks and potential leakage.

- Business Leaders may freeze, fearing “hallucinations” could damage brand reputation.

- Engineering may lack visibility into model changes after launch.

Without a unified way to make decisions, every promising initiative slows down. This is the moment AI governance becomes essential. It creates clarity and defines who is responsible for what. It brings technical teams and executives into the same conversation, reducing uncertainty so everyone can move forward with confidence.

Governance as an Enabler, Not a Bottleneck

When people hear “governance,” they often imagine bureaucracy or barriers. In reality, effective governance speeds things up, and it prevents rework, because it avoids legal issues before they arise and gives teams a clear set of guardrails so they can innovate without guessing.

A robust governance framework is necessary as it answers key questions before they become roadblocks:

Let’s look at these examples:

- Risk Tiering: Is this a low-risk internal chatbot or a high-risk customer loan algorithm? (Aligning with frameworks like the EU AI Act or NIST AI RMF).

- Data Lineage: What data is allowed to be used, and do we have the rights to it?

- Oversight: Who approves high-impact use cases before they go live?

- Resolution: How are risks detected, escalated, and resolved?

When these answers are consistent and transparent, teams stop operating in the dark. They stop waiting for approvals that never come, they can stop worrying about breaking rules they cannot see. Instead, they bring ideas forward faster because the path is clearly defined.

Why Most Use Cases Stall Before Deployment

From our experience and in conversations with enterprises across finance, insurance, and healthcare, we see the same four barriers stop projects cold. These aren’t technology failures; they are process failures.

1. Lack of Documented Processes (The “Reinventing the Wheel” Trap)

Without a standardized playbook, every new AI idea triggers a chaotic, ad-hoc scramble for permission.

Example: Imagine a marketing team wants to use a generative AI tool to draft emails, while a developer wants to use an AI coding assistant. These are two different risks, but without a process, they both get thrown into the same generic “Legal Review” bucket. Legal doesn’t have a specific policy for “generative AI,” so they freeze the request to research it.

The Result: A simple approval takes four months. By the time it is approved, the tool is obsolete or the team has moved on.

How Governance Fixes It: It creates a “Triage System.” Low-risk internal tools get auto-approved via a standard policy; high-risk customer tools go to a review board.

2. Unclear Ownership (The “Liability Gap”)

In many organizations, there is a dangerous gap between who builds the model and who is responsible for its mistakes.

Example: A bank deploys an AI to approve credit increases. Six months later, an audit reveals the model is denying credit to women at a higher rate than men (a bias issue).

- The Data Science team says: “The math is optimized for accuracy; we didn’t define the ‘fairness’ policy.”

- The Legal team says: “We reviewed the contract, not the training data.”

- The Business Owner says: “I just wanted to automate approvals; I don’t understand how the model works.”

The Result: Finger-pointing ensues, and the model is shut down.

How Governance Fixes It: Governance requires a clear RACI matrix before any code is written. This explicitly defines who is responsible for the work, who is accountable for the outcome, who must be consulted, and who needs to be kept informed. It also requires the business owner to formally sign off on the fairness metrics that data science teams are expected to meet.

3. Limited Visibility After Deployment (The “Silent Failure”)

Most teams treat AI like traditional software: once you ship it, it works until you break it. But AI is different, it degrades naturally over time. This is known as model drift.

Example: A retailer trains a demand forecasting model using data from 2020–2022. It works perfectly at launch.

- The Drift: In 2020, people bought comfortable home goods (sweatpants, candles). In 2025, purchasing behavior shifts back to office wear and travel gear.

- The Failure: The model keeps predicting high demand for sweatpants because its “knowledge” is stuck in the past. It is still “working” technically (no bugs), but it is failing commercially.

How Governance Fixes It: It mandates continuous monitoring dashboards that trigger an alert if the input data shifts significantly from the training data.

4. Fear of Regulatory Exposure (The “Paralysis by Analysis”)

New regulations like the EU AI Act introduce massive penalties (up to 7% of global turnover) for non-compliance. This terrifies executives, leading to blanket bans on innovation.

Example: The fear stems from the “Black Box” problem. If you cannot explain how your AI made a decision, you cannot defend it in court. Companies avoid deploying useful tools because they fear they are “High Risk” under the law, even if they aren’t.

How Governance Fixes It: It aligns the company with standards like ISO 42001. This standard turns the scary abstract concept of “compliance” into a tangible checklist. It allows a company to confidently say, “We have documented our risks, we have tested for bias, and we have a human in the loop.” This turns compliance from a gamble into a defensible process.

AI governance directly addresses all these issues. This is why it is a strategic enabler, not a simple risk function.

What Good Governance Actually Looks Like

To move from theory to practice, enterprises must operationalize governance across three pillars:

1. People: The Cross-Functional Council

Decisions cannot sit with Data Science alone. Successful organizations establish an AI Council involving Legal, Compliance, IT Security, and Business stakeholders. This ensures that a model isn’t just technically sound, but legally safe and commercially viable.

2. Process: Risk-Based Evaluation

Not all AI is equal. Governance allows you to categorize use cases. A “High Risk” model requires deep auditing and human-in-the-loop validation. A “Low Risk” tool might only require automated scanning. This creates a “fast lane” for low-risk innovation.

3. Technology: Continuous Monitoring

Models are not “set and forget.” Governance requires software that tracks performance after deployment. It monitors for data drift (inputs changing over time) and fairness (ensuring no bias creeps in), ensuring reliability throughout the lifecycle.

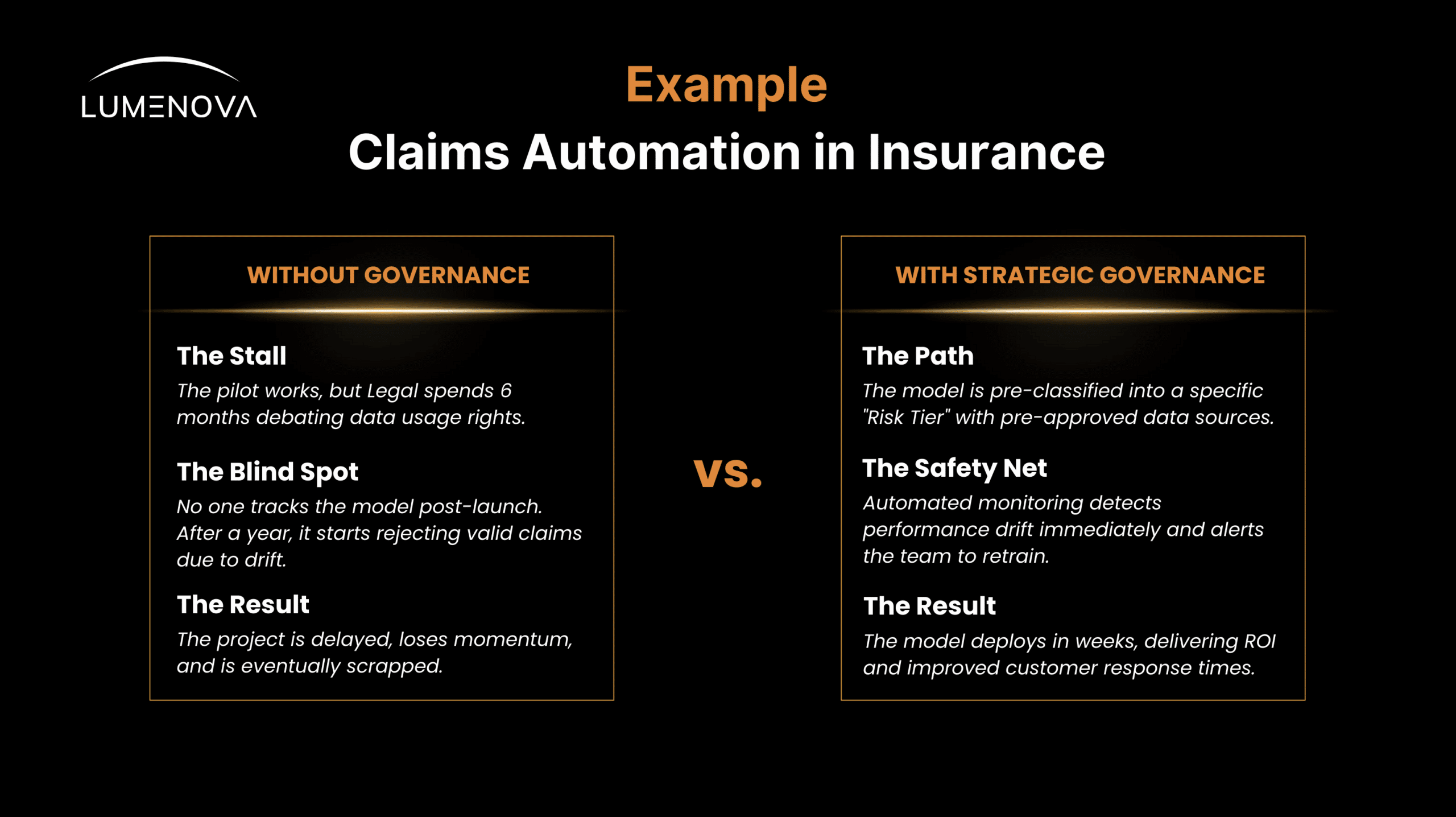

Example: Claims Automation in Insurance

To understand the tangible value of governance, let’s consider an insurer deploying an AI model for claims triage.

Why Most Use Cases Fail (And How Governance Fixes It)

In our work with enterprises across finance, telecom, and services, the same patterns of failure emerge. Governance directly addresses them:

- The Issue: Unclear Ownership.

- The Fix: Governance assigns clear roles, who owns the model, who owns the risk, and who owns the outcome.

- The Issue: Fear of Regulatory Exposure.

- The Fix: By aligning with standards like ISO 42001, companies transform compliance from a terrifying unknown into a managed process.

- The Issue: Limited Visibility.

- The Fix: Centralized model registries ensure that leadership knows exactly what AI is running where, and how it is performing.

2026 and Beyond: The Foundation for Scale

When enterprises stop treating governance as an obstacle and start seeing it as a strategic capability, everything changes. Deployment timelines shorten, AI systems deliver value sooner, and organizations are better protected from risks that could undo years of progress.

Every company wants to scale AI, but very few have done it successfully. Those that have did not win because they moved the fastest. They succeeded because they built the strongest foundation.

AI governance is that foundation. It provides the structure that allows innovation to grow, adapt, and thrive inside complex enterprises. As you plan for 2026, now is the time to set that foundation and speak with a consultant about AI governance.

Are you ready to set your foundation for 2026? Book a demo or a chat with one of our consultants.