Contents

Artificial intelligence is transforming industries at a rapid pace, unlocking innovation and driving new levels of efficiency. Yet, this progress also brings new risks and responsibilities. As businesses embrace AI to automate and optimize their operations, one critical question arises: Who takes responsibility for governing AI itself? To answer this challenge, many organizations adopt an AI governance framework that provides clear guidance on how to design, deploy, and oversee AI systems with accountability in mind.

An AI governance framework is a comprehensive system of rules, practices, and processes that an organization puts in place to ensure its AI initiatives are developed and used responsibly and ethically. It’s the strategic blueprint that aligns AI development with business values, stakeholder expectations, and legal requirements. Think of it as the Constitution for your company’s AI-powered future.

In this article, we’ll break down the key components of an effective AI governance framework, providing organizations with a blueprint that aligns innovation with accountability.

Why an AI Governance Framework Is Essential

With AI systems making decisions that affect customers, employees, and society at large, the stakes are high.

Poorly managed AI that lacks a structured governance approach can lead to:

- Bias and discrimination in hiring, lending, or healthcare decisions.

- Lack of transparency, leaving stakeholders uncertain about how decisions are made.

- Regulatory penalties, as global standards such as the EU AI Act, GDPR, and emerging frameworks in the U.S. and Asia take hold.

- Erosion of trust, damaging a company’s reputation and business prospects.

This is where an AI governance framework becomes not just a nice-to-have, but an absolute necessity for businesses of any size, particularly (but not limited to) those operating in highly regulated sectors like Finance, Healthcare, or Insurance. Such a framework serves one sole main purpose: to ensure that responsible practices are not an afterthought but a foundational requirement in the AI lifecycle.

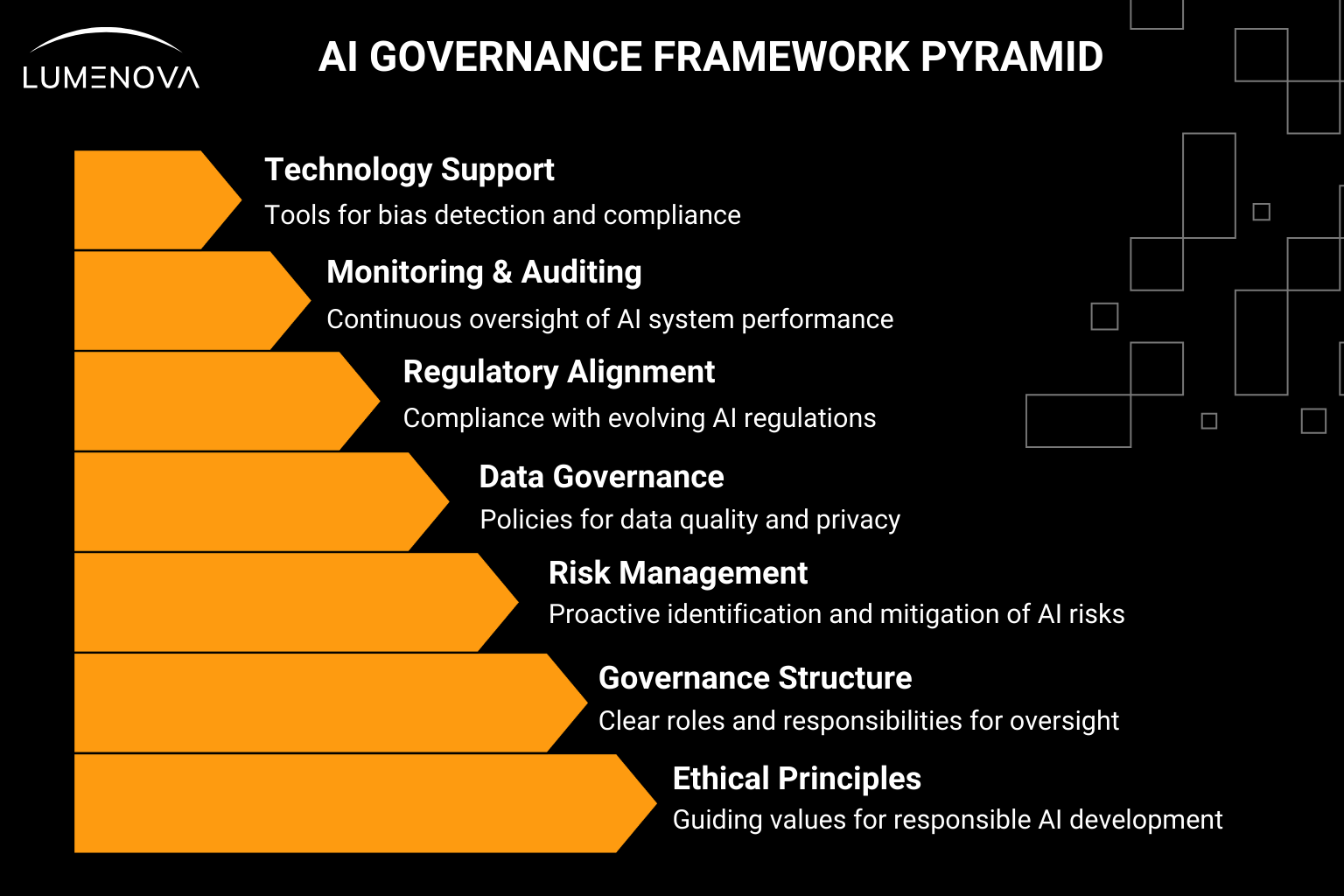

Core Components of an AI Governance Framework

While each organization will adapt governance structures according to size, industry, and regulatory environment, most frameworks share several foundational components.

1. Ethical and Responsible AI Principles

The foundation of a robust AI governance framework is a set of clearly defined ethical principles. This isn’t just about abstract ideals; it’s about codifying your organization’s values into actionable guidelines for your AI systems.

Key principles typically include:

- Fairness and equity: Mitigating bias and ensuring AI systems do not lead to discriminatory outcomes.

- Transparency and explainability: Making AI decision-making processes understandable to developers, users, and regulators.

- Accountability and responsibility: Clearly defining who is responsible for the outcomes of an AI system.

- Privacy and security: Ensuring personal data is protected and handled lawfully; ensuring the system is robust against malicious attacks.

- Human centricity (or human-in-the-loop): Guaranteeing meaningful human oversight in critical decision-making loops, to prevent errors and ensure ethical alignment.

- Sustainability: Considering energy use and long-term societal impacts.

Organizations should formalize these principles in an AI Ethics Charter that serves as the north star for every AI project, from conception to retirement.

2. A Clear Governance Structure, With Well-Defined Roles and Responsibilities

A framework is useless without people to implement and oversee it. Establishing a clear governance structure with dedicated roles within the organization is crucial for accountability. Once the roles are defined, communication between them is also crucial; otherwise, governance often becomes fragmented or siloed.

This structure often includes:

- An AI governance committee or ethics board: A cross-functional team (including legal, tech, ethics, and business leaders) responsible for setting policies, reviewing high-risk projects, and guiding the overall AI strategy.

- Chief AI Officer (CAIO) or Head of AI: A senior leader who champions the AI strategy and ensures the governance framework is effectively implemented across the organization.

- AI Product Owners and developers: The frontline individuals responsible for building and deploying AI systems in accordance with the established guidelines.

- Data stewards: Professionals who manage the quality, integrity, and security of the data used to train and run AI models.

By assigning clear ownership, you ensure that someone is always accountable for the ethical and operational performance of your AI systems. Decision rights assigned to each role should also be defined, i.e., crystal clear escalation paths for approving, modifying, or suspending AI systems.

AI governance cannot succeed as a purely top-down initiative. An effective framework requires buy-in across all stakeholders:

- Employee training: Equipping teams with the skills to build and manage ethical AI.

- Customer transparency: Informing users when they interact with AI and how their data is utilized.

- Multidisciplinary collaboration: Legal, technical, and business units must collaborate rather than work in silos.

Embedding governance in company culture ensures adoption beyond checklists and audits.

3. Robust Risk Management Practices

Just like enterprise infosec risk management, AI risk management should be proactive and ongoing. AI introduces a new spectrum of risks, from biased algorithms causing reputational damage to data privacy breaches resulting in regulatory fines.

An effective AI governance framework must include a systematic process for identifying, assessing, and mitigating these risks. Key practices include:

- Risk assessment at design stage: Conducting AI impact assessments to identify potential ethical, security, or operational risks before a project begins (similar to data protection impact assessments, or DPIAs, under GDPR).

- Continuous monitoring: Assessing AI outputs for drift, anomalies, and potential harms over time. The framework must ensure up-to-date compliance with a growing patchwork of AI regulations.

- Impact assessment tools: Structured frameworks for evaluating social, legal, and ethical implications of new AI products.

Staying ahead of the regulatory curve is not just about avoiding penalties; it’s about building trust and demonstrating corporate responsibility. In practice, this might mean conducting algorithmic audits or using bias-detection tools to surface hidden inequities.

4. Comprehensive Data Governance and Quality Management

AI models are only as good as the data they are trained on. Therefore, strong data governance is a non-negotiable component of AI governance. This means having clear policies and controls for the entire data lifecycle.

An AI governance framework must establish:

- Data provenance controls: Tracking the origin, collection methods, and transformations applied to datasets.

- Data quality standards: Ensuring data is accurate, complete, and representative.

- Privacy and consent controls: Implementing robust measures to protect sensitive information and manage consent.

- Access management: Restricting sensitive data to authorized users only.

Without meticulous data governance, you risk building “garbage in, garbage out” models that create bias, fail to perform, and even break the law.

5. Regulatory and Compliance Alignment

The global landscape for AI regulation is rapidly evolving. An effective AI governance framework must ensure compliance across jurisdictions, such as:

- EU AI Act (risk-based classification of AI systems)

- NIST AI Risk Management Framework (guidelines for trustworthy AI in the U.S.)

- Local industry requirements (e.g., HIPAA in healthcare, PCI DSS in finance).

Governance teams should build compliance checklists and monitoring systems that can adapt quickly as new rules are adopted.

6. Monitoring, Auditing, and Reporting

AI governance is not static; it requires continuous oversight. This involves:

- Automated monitoring of model performance, bias, and data drift.

- Independent audits, either internally or through third parties, to validate compliance.

- Incident reporting processes for when AI systems fail or cause unintended harm.

This feedback loop transforms AI governance from a compliance tool into an enabler of responsible innovation.

7. Technology and Tooling Support

Finally, organizations should reinforce governance with the appropriate technical infrastructure.

Common tools include:

- Bias detection and fairness evaluation frameworks.

- Model monitoring platforms to track drift and accuracy in real time.

- Automated compliance reporting tools aligned with regulations.

- Secure cloud platforms with built-in auditing and role-based permissions.

These tools make governance scalable as AI adoption expands across multiple products and services.

The Way Forward: From Policy to Practice

An AI governance framework is not a one-size-fits-all solution. It must be tailored to organizational contexts, industry requirements, and regional regulations. However, the core building blocks (accountability, ethical principles, regulatory alignment, risk management, data governance, transparency, auditing, stakeholder engagement, and technical enablers) are universally applicable.

When implemented effectively, such a framework doesn’t just protect against risks; it creates a foundation of trust that is critical for AI adoption at scale. Customers, regulators, and employees alike need confidence that AI systems are fair, transparent, and aligned with human values.

Organizations that get AI governance right will not only comply with regulations but also differentiate themselves in an increasingly competitive and trust-sensitive market.

For businesses looking to streamline this process, the Responsible AI (RAI) platform of Lumenova AI is the all-in-one solution for managing the risk and compliance of their AI models. With a library of pre-built, customizable frameworks based on major regulatory and industry standards, Lumenova AI provides tools to detect and track AI risks, ensure up-to-date compliance, and perform technical evaluations of models across key risk verticals like fairness, explainability, and security.

Request a demo today, and see real-life examples of how we can help bridge the gap between technical teams and business executives to foster a culture of responsible AI innovation for your company.