November 18, 2025

Beyond the Audit: Operationalizing AI Model Risk Management Across the Enterprise

Contents

Let’s be honest. For many organizations, managing AI risk feels like a last-minute scramble. It’s often treated as a final tollbooth before deployment, a one-time validation by a separate team, or a backward-glancing audit after something has already gone wrong.

This old-school approach just doesn’t work for the dynamic, complex, and high-stakes world of AI.

When an AI model is making critical decisions, you can’t just “check it for bias” once and hope for the best. Because we’re not in Kansas anymore; the era of simple input-output computer programs has long passed, replaced by the sophisticated AI black boxes where, assuming a questionable quality of model training and a lack of AI model monitoring, just about anything can cook.

Effective AI model risk management (MRM) isn’t a single event; it’s a continuous, collaborative discipline. It must be woven into the entire AI lifecycle, from the first line of code to the model’s eventual retirement.

To build and scale AI you can actually trust, you need a fundamental shift. Sustainable AI adoption requires unified workflows, clear roles, and a shared “single source of truth” that both your technical and risk-focused teams can rely on.

It’s time to get everyone on the same page.

Why Operationalizing AI Model Risk Management Matters

If your current process feels like a game of “pass the hot potato” between data science and compliance, you’re not alone. But the stakes are getting too high to continue this way. Moving past a mere “nice to have”, operationalizing AI model risk management becomes a business necessity.

Wondering why model risk management needs to evolve? Read this post next.

The Regulators Are Catching Up

The days of the AI “wild west” are over. Regulators are no longer giving AI a pass. Groundbreaking rules like the EU AI Act and foundational guidelines like the banking sector’s SR 11-7 are setting a new standard. They don’t just ask if you thought about risk; they demand proof that you are actively managing it with ongoing controls. A dusty report from six months ago simply won’t cut it when an auditor comes knocking.

AI Failures: A Failure of Process, Not Just Code

When an AI model fails in the wild, our first instinct is often to blame the algorithm or “bad data.” But if you look closer, the root cause is usually a human process gap.

- A data scientist builds a model on one set of assumptions.

- The business team, lacking documentation, deploys it for a completely different use case.

- The post-deployment monitoring is tracking the wrong metrics.

A sobering 2025 report from MIT found that a staggering 95% of corporate AI initiatives show zero return. The researchers were clear: the culprit isn’t the technology or the algorithms. The failures stem from the human approach. The report highlights that technology doesn’t fix organizational misalignment; it amplifies it. When you apply AI to a flawed or siloed process, you don’t fix the process; you just “do the wrong thing faster,” institutionalizing the very gaps you were trying to solve.

These are the cracks where risk seeps in. An isolated audit can’t fix a misaligned assumption between teams. Effective AI model risk management closes these gaps by creating a continuous, shared feedback loop.

From Risk Bottleneck to Responsible Accelerator

We’ve all seen this movie before. The data science team, eager to innovate, views the risk team as a roadblock. The risk team, lacking technical visibility, sees data science as an uncontrolled liability.

When you operationalize MRM, you build a bridge between these two worlds. By embedding risk and compliance “guardrails” directly into the AI development pipeline, you’re not slowing things down. You’re actually accelerating responsible innovation. Teams can build and deploy faster, with confidence, knowing that safety and fairness are built in from the start.

The Core Components of an Operational AI Model Risk Management Framework

So, how do we build this bridge?

An operational framework is a structure built on four connected pillars, each one feeding into the next.

1. The AI Inventory: Your Central Hub

You can’t manage what you can’t see. The first step is creating a comprehensive, dynamic AI inventory. This isn’t a static spreadsheet that’s outdated the moment you save it. It’s a living, centralized registry of every model in your organization, from early experiments to critical production systems.

Think of your AI Inventory as the air traffic control tower for your AI. You can’t possibly manage your airspace if you don’t know what planes are flying, where they’re going, and what they’re carrying. A spreadsheet is like a flight plan printed yesterday morning (it’s useless in a real-time, dynamic environment). Your inventory needs to be a live, 360-degree view for all your stakeholders.

2. Pre-Deployment AI Evaluation: The Flight Check

Before a model ever makes a decision affecting a customer or your business, it needs a thorough and standardized evaluation. This goes far beyond just checking for accuracy. It needs to be a repeatable, collaborative workflow.

This is like the pre-launch systems check for a rocket. You wouldn’t just check the fuel level and call it good. You’d run thousands of simulations. You test for robustness, fairness, and explainability.

3. Post-Deployment Monitoring & Observability: The Live Dashboard

This is where so many well-intentioned risk programs fall apart. A model is not “done” at launch. It’s a dynamic system interacting with a constantly changing world. Customer behavior shifts, data patterns change, and the model that was fair and accurate on day one can become a major liability by day 90. This is model drift, and it’s a real-time problem.

This is like your car’s dashboard while you’re driving. The car may have been in perfect condition when you drove it off the lot. But the real world has potholes, bad weather, and traffic. Continuous monitoring is your check engine light, your fuel gauge, and your tire pressure sensor all in one. And crucially, when that “check engine light” (e.g., a bias alert) flashes, it must automatically trigger a service ticket for the right mechanic.

4. Documentation & Auditability: The Ship’s Log

Picture this: An auditor or regulator asks you to justify why your AI model denied a customer’s loan application six months ago. “I’m not sure” or “Let me find that email” are not good answers. The final pillar is a system of automated documentation that captures the entire lifecycle.

This part is like the ship’s logbook in a storm. When you’re being audited (or navigating a crisis), you need a detailed, unchangeable record of your journey: every course correction, every new heading, and who was at the helm. Relying on people’s memory or scattered notes is a recipe for disaster. This logbook needs to be automated, because manual documentation is the first task skipped under a tight deadline.

The Lumenova AI Difference: Unifying AI Model Risk Management

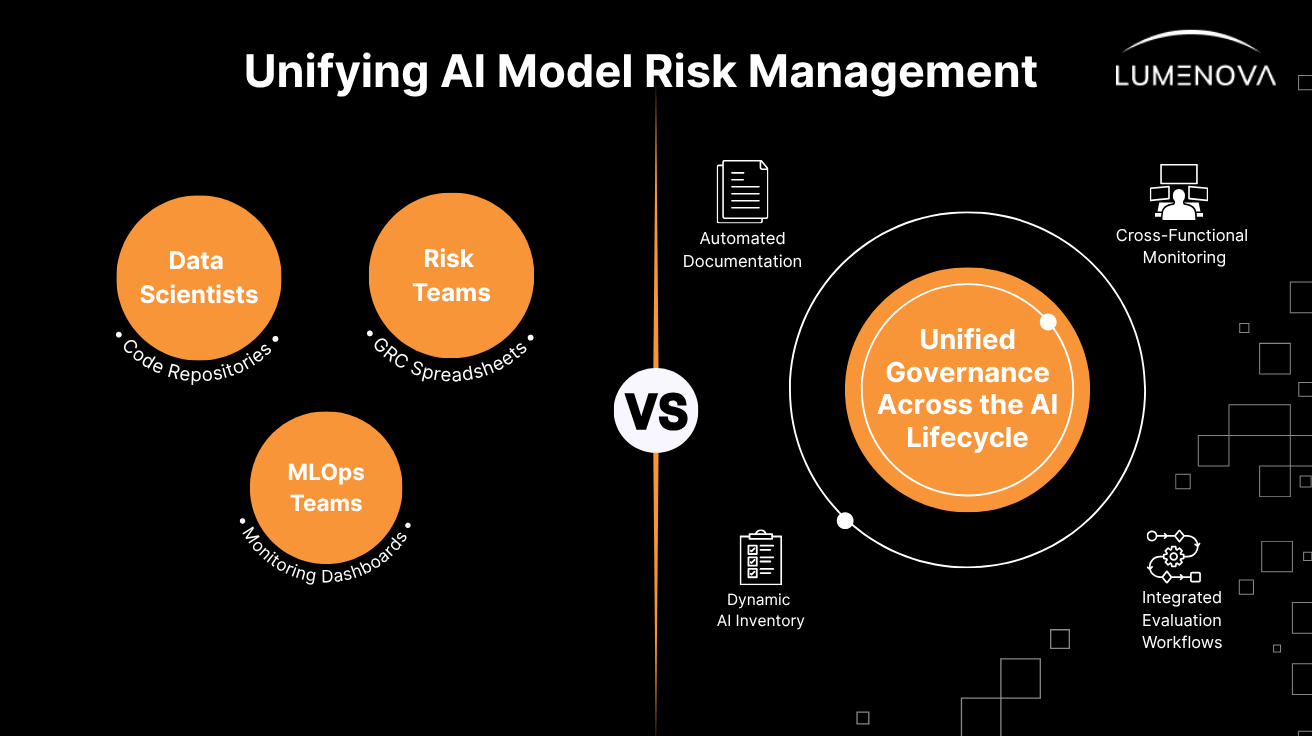

The framework described above (Inventory, Evaluation, Monitoring, and Auditing) is powerful, but it fragments if teams are disconnected. Data scientists live in code repositories, MLOps teams live in monitoring dashboards, and risk teams live in spreadsheets and GRC platforms. This is the central challenge of operationalizing AI model risk management.

This is precisely the gap the Lumenova AI platform is built to close.

Lumenova AI provides a single, unified platform that connects every stakeholder across the entire AI lifecycle, transforming MRM from a series of disconnected handoffs into a seamless, collaborative workflow.

- A dynamic AI inventory: Lumenova AI serves as your single source of truth, continuously registering models and metrics to provide a real-time, 360-degree view of your entire AI landscape.

- Integrated evaluation workflows: Our platform embeds robust pre-deployment testing for bias, fairness, and robustness directly into your existing MLOps pipeline. Risk teams can build custom, risk-based checklists and review policies, ensuring no high-risk model is deployed without proper oversight.

- Cross-functional monitoring: Lumenova AI’s post-deployment monitoring bridges the gap between technical metrics (like data drift) and business-level risk (like fairness degradation). Alerts are automatically routed to the right stakeholders, from data scientists to compliance officers.

- Audit-ready, automated documentation: Lumenova AI automatically generates comprehensive model cards and captures an immutable, time-stamped log of every test, alert, and human-in-the-loop decision. When auditors arrive, your proof of compliance is ready with the click of a button.

By creating a common language and a shared source of visibility, Lumenova AI empowers technical and risk-focused teams to finally collaborate, breaking down the silos that put organizations at risk.

Our Conclusion

AI model risk management has matured. It is no longer an academic exercise or a compliance task to be handled by an isolated committee. It is a dynamic, continuous, and operational discipline that is essential for sustainable business innovation.

The future of AI will be defined not just by the most powerful models, but by the most trustworthy ones. Building that trust requires a new approach, one that operationalizes risk management across the full lifecycle, unifies disparate teams, and provides a single pane of glass for all AI governance.

Ready to move beyond the audit? Schedule a demo of Lumenova AI today to see how you can operationalize your AI model risk management and accelerate responsible AI adoption.