September 3, 2025

Enterprise AI Governance: What Leaders Are Doing, What’s Working, and Where It’s Still Breaking in 2025

Contents

A Lumenova AI case study report on the State of Enterprise AI Risk, Oversight, and Operational Maturity

In Q2 2025, Lumenova AI conducted a targeted poll series to better understand how enterprise organizations are tackling the practical challenges of AI governance. The goal was to move beyond broad Responsible AI theory and uncover what’s actually shaping decision-making across technical, legal, and executive teams.

Over five polls, we gathered responses from a small set of enterprise leaders across sectors, focusing on governance implementation, deployment challenges, and risk perception. The results reveal early indicators of a directional shift: organizations are prioritizing embedded governance practices, but many remain stalled in the transition from strategy to execution.

While we continue to collect data, we invite you to explore the early results in this article.This report summarizes the key findings, paired with an analysis of what these trends signal for the future of AI oversight.

1. Governance Is Moving Into the Risk Function

Poll question: Which AI governance trend will shape enterprise AI the most by 2026?

- Two-thirds of respondents selected Integrated risk and compliance

- One-third selected Explainability tools and systems

- Zero selected Automated evaluations or “Other”

Key insight:

There is a dominant trend emerging: governance is no longer being treated as an ethical layer or innovation strategy. It’s becoming a risk capability, embedded into the same operational systems that manage compliance, auditability, and controls.

Organizations are no longer asking, “How do we explain this?”

They’re asking, “Where does this fit inside our risk stack?”

2. The Blocker to AI Deployment Speed Is Structural, Not Technical

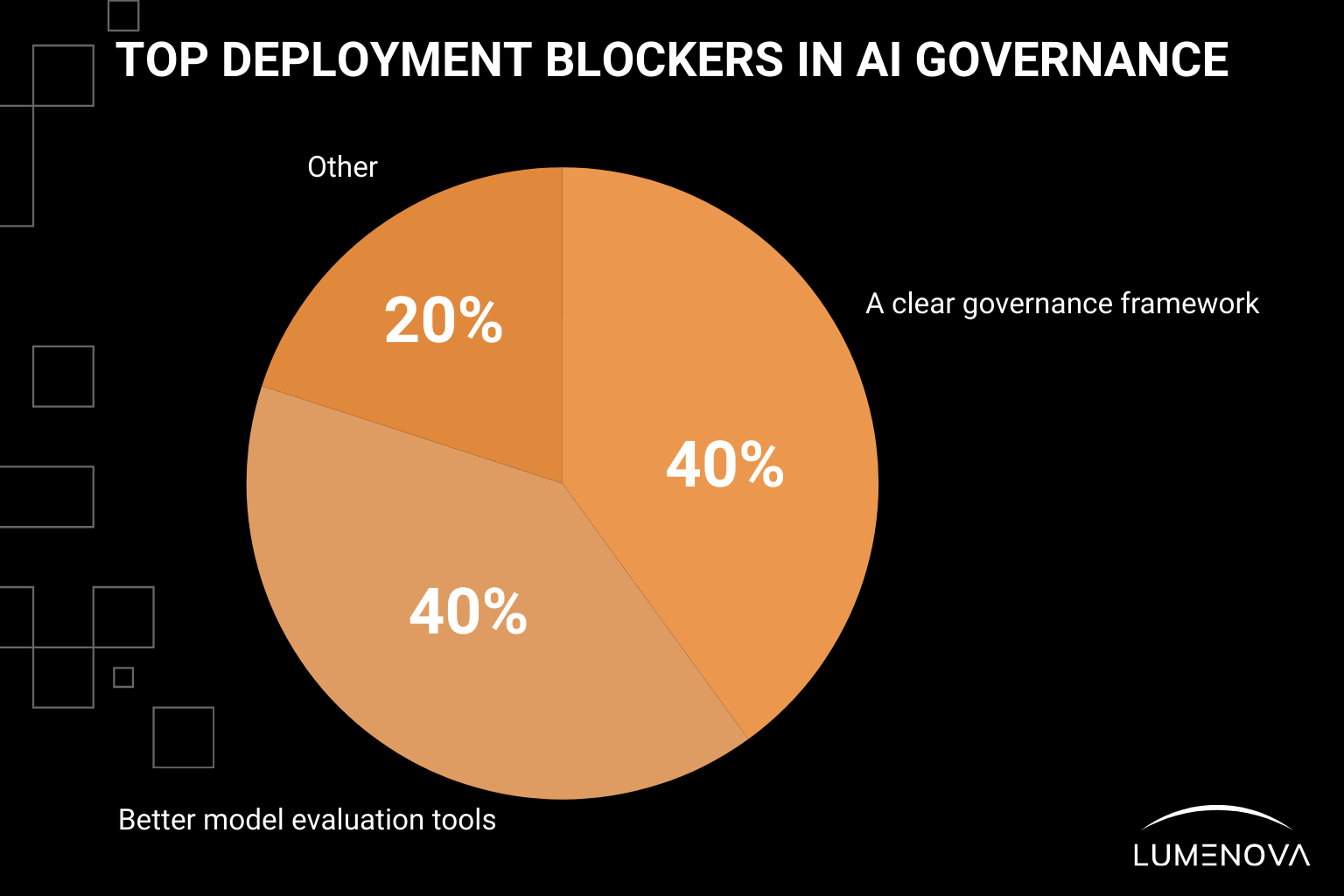

Poll question: What would reduce your AI deployment timeline the most?

- 40% said A clear governance framework

- 40% said Better model evaluation tools

- 20% said Other

- Zero respondents selected Streamlined governance flow

Key insight:

Tooling is important. But without shared expectations (about ownership, oversight, and standards), speed stalls.

Teams aren’t slowed down by regulation. They’re slowed down by ambiguity.

This tells us that governance needs to start with structure, not just software. Otherwise, automation simply accelerates misalignment.

3. Responsible AI Fails Where Execution Begins

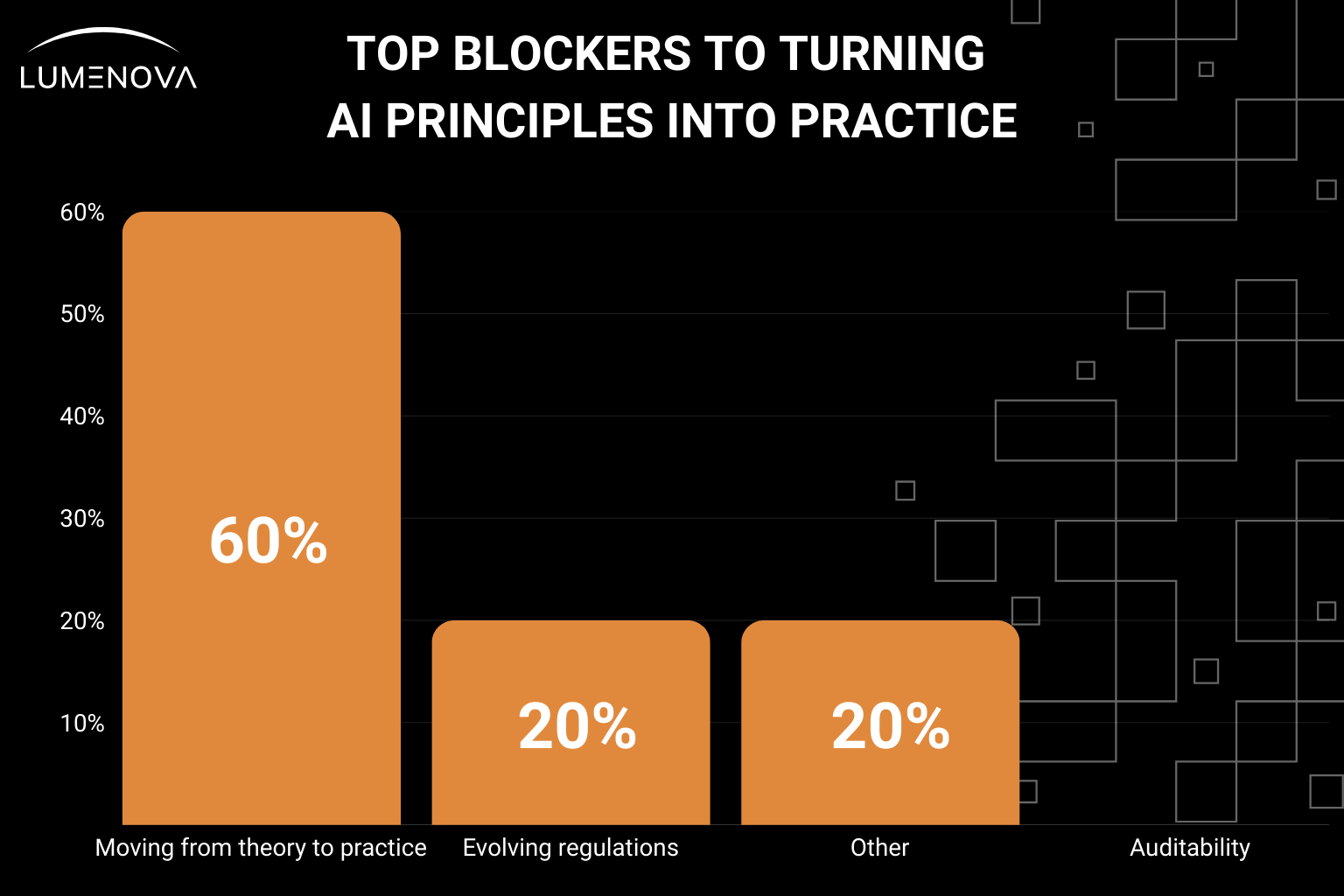

Poll question: What is your organization’s biggest Responsible AI challenge?

- 60% said Moving from theory to practice

- 20% said Evolving regulations

- 20% said Other

- Zero respondents selected Auditability

Key insight:

Most teams understand the “why” of Responsible AI. The challenge is the “how.”

The biggest gap isn’t technical debt or lack of values. It’s the failure to translate those values into embedded processes, documented workflows, and accountable teams.

Governance doesn’t work unless it runs on real infrastructure. And most organizations still don’t have it.

If you want to see what real governance looks like (not just policy slides) start here: The Pathway to AI Governance

4. Trust Is a Multi-Metric Problem

Poll question: What matters most when evaluating AI system performance?

- One-third selected Explainability and fairness

- One-third selected Real-time, actionable metrics

- One-third selected Ethics and alignment

- Zero percent selected “Other”

Key insight:

No single trust signal dominates.

Leaders are no longer looking for “one metric to rule them all.” They’re triangulating across behavior, transparency, and alignment.

This suggests trust in AI is maturing. It’s not being treated as a static number, but being evaluated as a pattern, influenced by model output, governance context, and business risk.

The tooling that supports this can’t be one-dimensional either.

Trust needs visibility across how the model performs, why it behaves that way, and whether that behavior is aligned.

5. Risk Is Broader Than Security (and Harder to Contain)

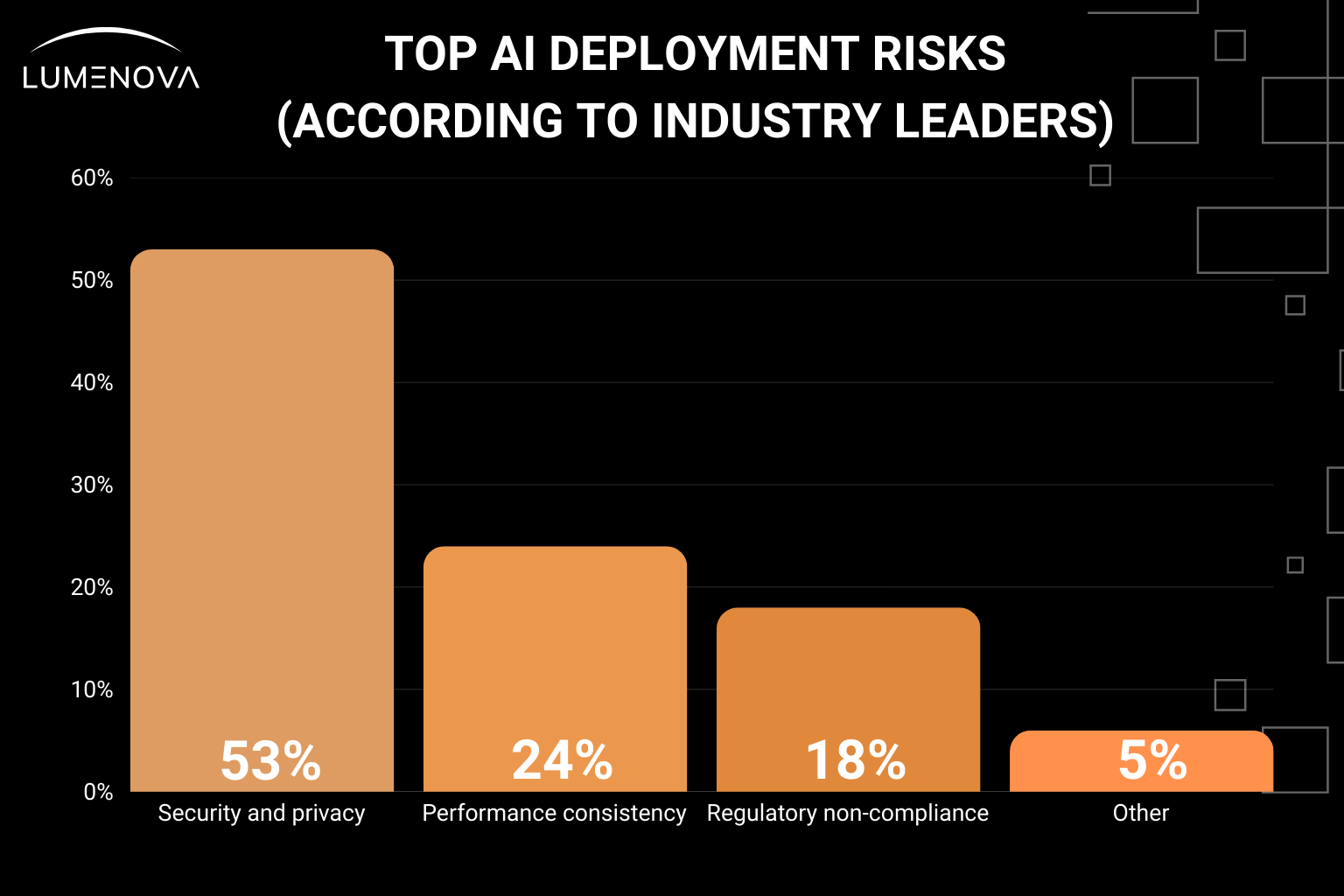

Poll question: What concerns you most when deploying AI at scale?

- 53% selected Security and privacy

- 24% selected Performance consistency

- 18% selected Regulatory non-compliance

- 5% selected other

Key insight:

Privacy and security remain the primary concern. But the diversity of responses shows that AI risk is multidimensional and operational.

Security failures don’t always come from bad actors. They come from weak controls, unreviewed models, misaligned data pipelines, and fragmented ownership.

Performance issues don’t come from undertrained models. They come from unmanaged drift, unlabeled edge cases, and broken handoffs between teams.

This is why AI governance must expand beyond model evaluation and fairness.

It has to account for what happens before, during, and after deployment.

If you’re serious about turning AI security from a checklist into a system, this breakdown will help: Understanding AI Security Tools: Safeguarding Your AI Systems

One of the Most Valuable Insights Came From Outside the Poll

During the discussion, Dr. Steven A. Davis, SVP of Operations & Strategy at Wells Fargo, a leading U.S. financial services provider, commented:

“Consistent business processes and representative datasets could also be additions to the list. Junk in, junk out.”

This cuts to the core of what most governance frameworks miss.

You can monitor models, log outputs, and write up principles. But if your business processes are inconsistent, or your data is poorly sourced or unrepresentative, governance doesn’t hold.

Human oversight has to begin before a model is trained (when requirements are defined, data is collected, and workflows are created).

Otherwise, the best governance tooling in the world will still fail quietly.

Final Reflection: The Next Phase of Governance Is Infrastructure, Not Ideology

This poll series revealed a simple but powerful pattern:

- Enterprise leaders know governance is essential

- They’re aligning it with risk and compliance systems

- But they’re struggling to implement it consistently

- Not because they lack conviction, but because they lack coordination

If you’re ready to move beyond principles and actually embed governance into the model lifecycle, this is where to begin: Implementing Responsible AI Governance in Modern Enterprises

You can view the original poll questions, response data, and executive discussion directly on LinkedIn (either on the Lumenova AI company page or via Avron Anstey, Global Director of Business Development at Lumenova AI).

Here, at Lumenova AI, we’re working with enterprises that are already past the “why.”

They’re solving for the “how.”

Our responsible AI platform brings governance, risk, and compliance into a single operational system, with model reviews, audit trails, explainability, role-based signoff, and continuous monitoring built in from day one.

Because governance can’t just be something you define.

It has to be something you run.