August 26, 2025

Preventing AI Data Breaches: How AI Data Governance Strengthens Security

Contents

Artificial intelligence is transforming how organizations handle sensitive data, from managing customer records to powering smart decision-making systems. But with this power comes a serious responsibility: ensuring AI systems do not become gateways for data breaches. AI data governance for security offers a proactive layer of protection, detecting threats early and reinforcing safety measures across the AI lifecycle.

Let us guide you through how AI data governance not only lowers the risk of breaches but also builds trust and regulatory confidence.

A New Front in Security Risk: AI as a Breach Vector

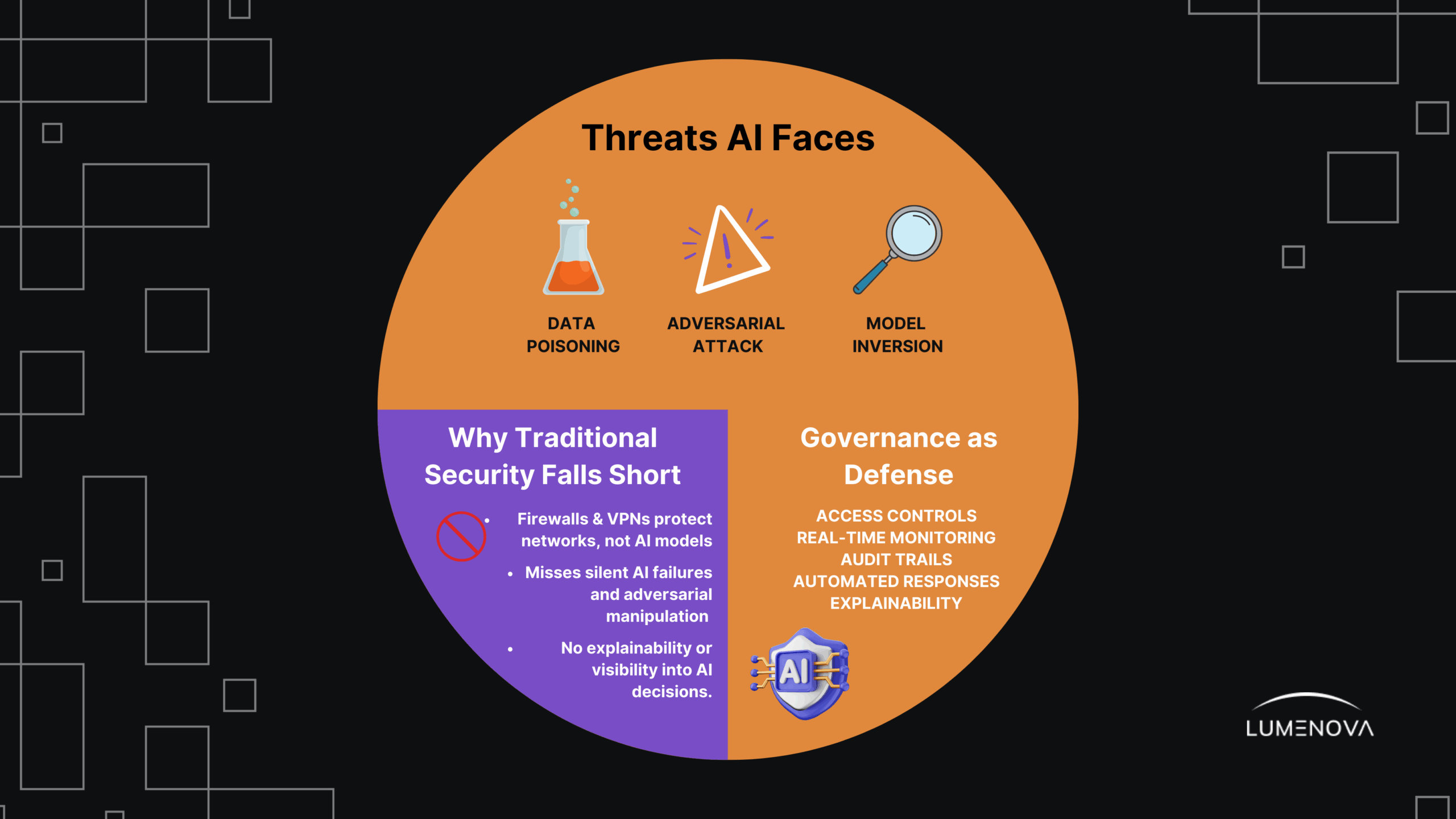

AI systems process vast volumes of data, making them attractive targets for cyber threats. Attacks like data poisoning, where attackers feed false or manipulated information into training data to corrupt AI decisions, adversarial manipulation, which means intentionally confusing AI models, and model inversion, which means extracting sensitive inputs from AI outputs, can all jeopardize system integrity and leak confidential information. Without clear governance, these attacks can go undetected and undermine both security and stakeholder trust.

If you want to explore how advanced threats corrupt AI models and how to defend against them, Lumenova’s article on data poisoning attacks outlines AI security risks such as how malicious inputs can manipulate training data and lead to real-world harm. These threats are increasingly common, especially in high-stakes sectors like finance and healthcare.

Embedding Security Through Governance

AI governance isn’t just about policies or compliance checkboxes; it’s an active defense layer. Combined with AI-specific security tools, governance platforms enforce strict access controls, provide real-time data and model monitoring, maintain audit logs, detect anomalies, and enable explainability to trace suspicious actions.

As AI models continuously evolve in production, security must evolve too. Static defenses won’t catch silent failures or sophisticated attacks. Dynamic governance workflows quickly detect threats, respond effectively, and prevent escalation.

As explored in Can Your AI Be Hacked? What to Know About AI and Data Security, organizations need more than static protection. They need dynamic AI governance workflows that can detect threats, respond quickly, and prevent future incidents before they escalate. AI systems often evolve continuously in production, which means security must also evolve with them.

Why Traditional Cybersecurity Falls Short for AI

While firewalls, VPNs, and endpoint protection remain essential, they focus on network infrastructure and not on the unique vulnerabilities of AI models and data pipelines. AI systems introduce risks at every stage, from training data ingestion to live decision-making.

That is why a dedicated governance layer is critical. As Lumenova outlines in Understanding AI Security Tools, many existing tools fail to detect silent AI failures, adversarial attacks, or logic corruption. Organizations relying solely on legacy systems are often unaware of where and how their AI can be compromised.

Governance in Action: From Detection to Defense

Effective AI governance systems do more than observe; they enable fast, intelligent response. For example, audit trails can help trace who accessed or modified sensitive datasets. Monitoring tools can flag sudden shifts in model output that signal potential attacks. And when anomalies are confirmed, governance systems can trigger rollback mechanisms, quarantine affected models, or trigger human review workflows.

If you’re evaluating solutions, ‘3 Questions to Ask Before Purchasing an AI Data Governance Solution’ offers practical advice to help organisations prioritise functionality, ease of integration, and long-term resilience. These considerations are especially important in regulated industries where oversight and traceability are non-negotiable.

Strengthening Governance with Lumenova AI

At Lumenova AI, we help enterprises close the security gaps that traditional tools overlook. Our platform enables you to define granular data policies, monitor model and data integrity in real time, trace model lineage for compliance, and automate actions when threats are detected.

With Lumenova’s integrated governance workflows, you gain a continuous feedback loop between security, compliance, and operations. Explainability is built-in, so security teams, data scientists, and business leaders can work together to understand and mitigate risks before they impact customers or compliance.

Governance Is the Best Defence

Preventing AI data breaches is not just about securing infrastructure, it is about proactively managing the entire AI lifecycle. Data governance frameworks give organizations the visibility, controls, and responsiveness needed to keep sensitive data safe and AI systems trustworthy.

As AI becomes more deeply embedded in critical business systems, governance must evolve from a checkbox to a strategic function. If you’re ready to turn AI governance into a true security asset, we are here to help. Book your demo now.