October 1, 2025

6 Steps to Embed Responsible AI Guidelines into Your AI Development Lifecycle

Contents

Most companies say they care about responsible AI principles. You’ll see it on their websites, tucked into mission statements, and mentioned on stage at big tech events.

But when it’s time to actually build something (a model, a pipeline, a product), that commitment often fades into the background. Deadlines move fast. Teams prioritize speed. And the idea of “doing it right” turns into something people promise to handle later.

Responsible AI falls short because most teams haven’t seen what it looks like in real life. The blockers aren’t dramatic ethical debates. They’re more basic: unclear goals, fuzzy roles, and a development process that treats governance like an afterthought.

Below, you’ll find six clear steps to help bring fairness, transparency, privacy, and accountability into your everyday workflow. These aren’t vague ideas or box-ticking exercises. They’re practical actions you can use right away.

When you build with these in mind, your models do more than just meet basic standards. They’re easier to explain, easier to improve, and much stronger under pressure (whether you’re talking to users, leadership, or regulators).

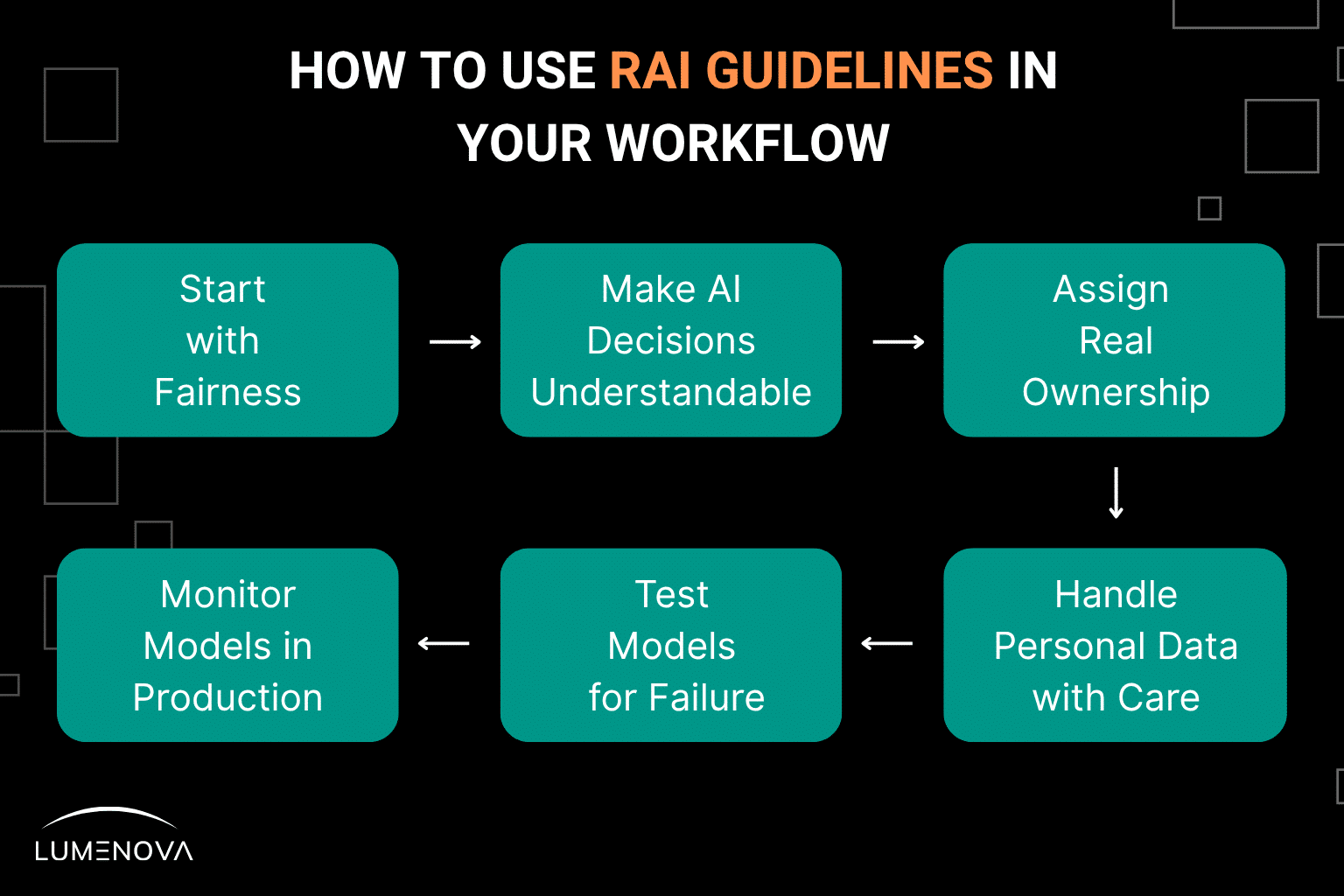

What are the 6 steps to embed responsible AI guidelines into development?

- Define fairness metrics before launch

- Create transparent documentation (e.g., model cards)

- Assign individual ownership to each model

- Enforce data privacy with consent and access controls

- Run robustness tests and prepare incident plans

- Monitor models continuously after deployment

6 Steps to Apply Responsible AI in Your Development Process

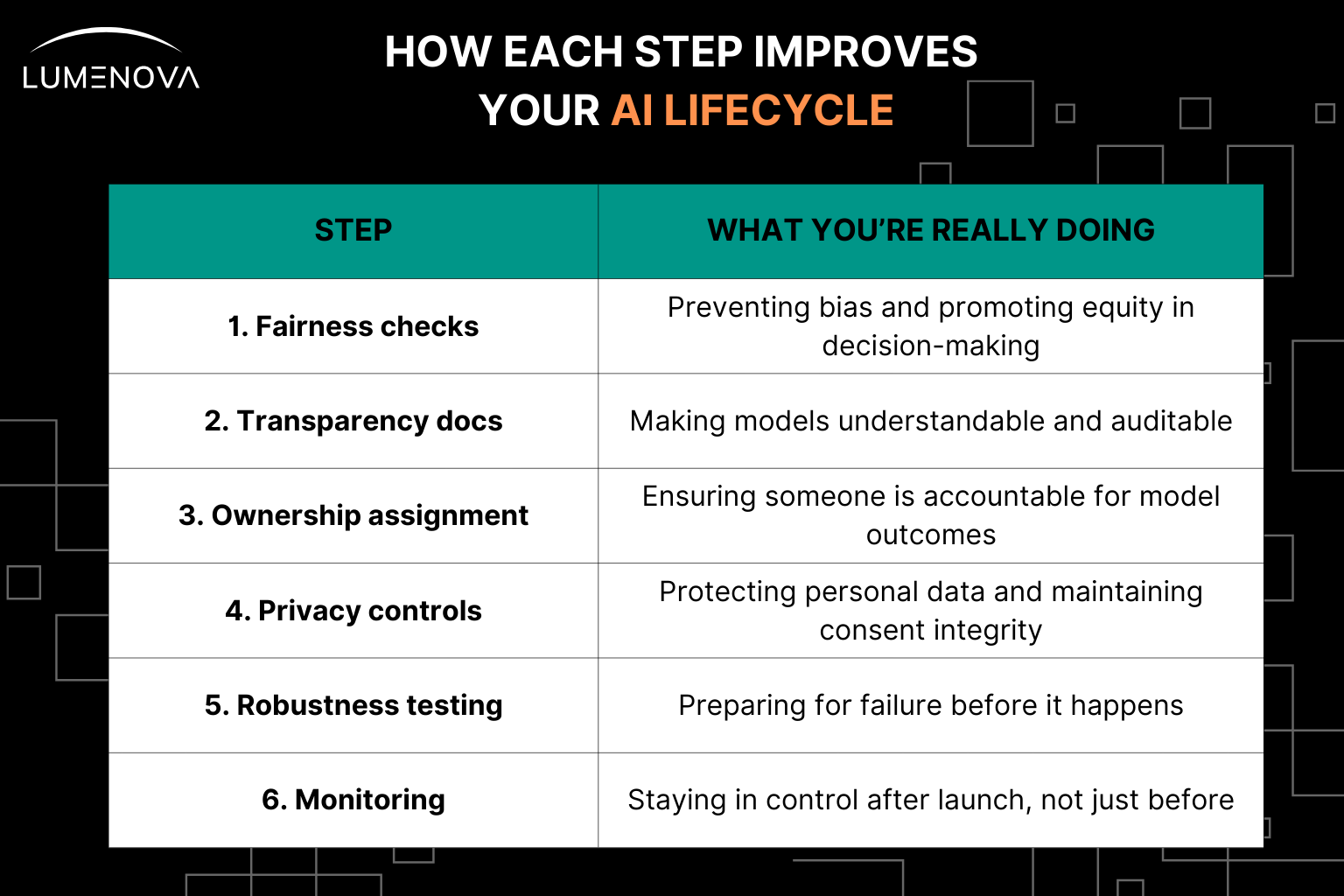

Step 1: Start with Fairness You Can Actually Measure

Imagine your team is about to launch an AI model for credit approvals. The performance looks good. But then someone asks a crucial question: Does this model treat all applicants equally, regardless of gender, race, or age?

You don’t want to rely on assumptions. You want to back your answer with real data.

Fairness doesn’t mean every model will perform perfectly across all groups. But it does mean setting measurable expectations before the model goes live. For example: “False positive rates should not differ by more than 5% between demographic groups.”

That’s the kind of rule you can actually test for. And you should. Because bias shows up in hiring, lending, law enforcement (any domain where AI is used).

To operationalize fairness:

- Define fairness metrics and thresholds before deployment

- Audit your models regularly (quarterly is a good minimum)

- Document what happens when fairness checks fail (who owns the resolution, and what are the next steps)?

According to ITPro, only 28% of companies test their models for bias at all. So if you start here, you’re already ahead of the curve.

Step 2: Make AI Decisions Easy to Understand

Let’s say a customer gets denied a loan. They want to know why. But when your team digs into the model’s output, no one can explain the decision clearly. It just returned a “no.”

That’s not acceptable (not to the customer, not to regulators, and not to your internal teams).

Transparency starts with documentation that stays connected to the model throughout its lifecycle. At a minimum, you should know:

- What data the model was trained on

- What it’s designed to do

- What its known limitations are

- Who reviewed and approved it for use

This is the purpose of a model card. It’s not a formality, but your foundation when someone asks, “How does this model work?”

Beyond documentation, clear, human-readable explanations are critical. If a model impacts someone’s life, they deserve to understand why that decision was made. Responsible AI guidelines help to make this happen.

Make AI transparency a non-negotiable part of your workflow, just like testing or deployment. And if you operate in regions with regulations like the EU AI Act, robust documentation is imperative. The Act already requires disclosures around purpose, energy use, and human oversight, with more to come.

Step 3: Assign Real Ownership to Every Model

Here’s a simple but surprisingly tough question: Who owns this model?

Not a team. Not a department. A specific person.

Ownership doesn’t just mean keeping the model running. It means taking responsibility for how the model performs, how it impacts people, and how it aligns with regulatory requirements.

Right now, that kind of accountability is rare. A 2024 report in the Financial Times noted that even organizations deploying AI in sensitive domains like audits often fail to track model impact beyond access logs.

To shift this:

- Assign a Responsible AI Owner to every production model (someone who is publicly accountable)

- Log any decisions made by the model that affect people’s rights (e.g., hiring, loans, medical care)

- Require sign-off from Legal, Risk, and Compliance before launching or modifying the model

When no one owns a model, no one feels responsible when it fails. That’s when avoidable risks start slipping through.

Step 4: Handle Personal Data with Care and with Proof

Privacy isn’t just a legal requirement. It’s a trust signal. And if you get it wrong, the damage is fast and lasting.

If your AI systems use personal data, you need two things at a minimum:

- Documented consent to use that data

- Technical controls that restrict access to only those who need it

Too often, organizations approve datasets without tracking who gave permission or what exactly was included. Developers frequently have access to far more than necessary, including sensitive fields like health data or income.

To build real safeguards:

- Don’t train models on personal data unless you have traceable, auditable consent

- Use de-identification or anonymization techniques wherever possible

- Implement role-based access so sensitive data is only visible to the right people

People are increasingly skeptical about how their data is used in AI. Surveys show growing discomfort, especially when they feel uninformed or powerless. By taking privacy seriously, you earn trust and reduce exposure.

Step 5: Test Models Like You Expect Them to Fail

Plenty of models look great during testing (until something changes).

Maybe the data shifts. Maybe a new use case emerges. Maybe a bug that wasn’t caught starts to affect decisions. The point is, you can’t assume that post-deployment performance will stay consistent.

This is where robustness testing and incident response planning come in.

Before launch, ask your team: What happens when this model behaves unpredictably?

Build in processes to:

- Run stress tests and edge case simulations

- Define what constitutes a critical failure

- Develop an incident response plan with clear roles: Who gets notified? Who can shut the model down? Can it be rolled back quickly?

Take inspiration from Sasha Cadariu, our AI Researcher and Strategy Leader, whose work in the AI Experiments section tests the boundaries of AI. His work probes the boundaries of frontier models, using complex prompts to evaluate reasoning depth, emotional nuance, and perceptual acuity, uncovering both the emergence and disruption of patterns in AI.

Step 6: Monitor Models Like They’re in Production (Because They Are)

AI models drift. They degrade. Small issues become major problems over time.

That’s why post-launch monitoring matters as much as pre-launch testing.

Stay in control with:

- Drift detection for input data and outputs

- Ongoing fairness and accuracy monitoring

- Alerting and routing for issues that affect users or compliance

Lumenova AI makes this part easy. Our RAI platform gives you real-time visibility into model behavior with built-in drift detection, fairness and accuracy monitoring, and automated alerting for anything that could affect users or regulatory expectations.

Monitoring isn’t a task. It’s an operational mindset.

What These Six Steps Really Do

When you build these six steps into your development process, you’re not just “doing responsible AI.” You’re creating systems that are safer, more explainable, and easier to defend.

Final Thoughts: Responsible AI Starts with Habits, Not Headlines

You don’t need a 100-page policy of responsible AI guidelines to do this right. What you need are stronger habits, better visibility, and tools that help your teams make the right decisions without slowing them down. Responsible AI is not a barrier. It is a safeguard. It makes your systems more resilient, more transparent, and more capable of standing up to long-term scrutiny.

Book a demo to see how we help organizations embed accountability into every model from initial design to production deployment.

Questions to Take Back to Your Team

- Which of these six steps is already in place at your organization, and which still needs work?

- Who owns your production AI models, and how is that ownership recorded and communicated?

- How are you tracking fairness, bias, and performance issues in already live models?