January 29, 2026

The ROI of AI Model Validation and Safety Platforms: More Than Risk Reduction

Contents

AI model validation and safety platforms are often positioned as cost centers. They are funded under compliance, justified by regulation, and evaluated primarily on how much risk they help avoid. That framing understates their impact.

In practice, these platforms change how quickly AI reaches production, how reliably it performs at scale, and how confidently leaders approve its use. The return shows up not just in avoided downside, but in measurable gains across speed, efficiency, and adoption.

Faster Time to Production, Measured in Weeks, Not Narratives

According to a survey of enterprise teams, 75% of organizations spend between a few months to over a year bringing machine learning models into production when working without streamlined validation and governance processes, largely due to fragmented workflows and lack of standardized deployment pipelines; centralized validation and automated governance solutions have been shown to reduce deployment timelines by 30–50% in similar contexts by automating evidence collection and standardizing approval workflows.

Instead of recreating documentation for every stakeholder, validation artifacts are generated continuously as models evolve. Risk, legal, and compliance teams review the same live data, rather than requesting separate reports late in the process.

The result is faster approvals without relaxing standards, because validation is embedded rather than retrofitted.

Preventing Incidents That Quietly Drain Value

Most AI failures do not start as major incidents. They begin as small blind spots: untested edge cases, data drift, or model behavior that changes once exposed to real-world conditions.

In financial services, AI-driven fraud detection systems paired with proactive monitoring have demonstrated a significant impact. Industry research shows that modern, real-time AI fraud platforms can reduce fraud losses by 40 to 60% compared to traditional rule-based systems, largely because model weaknesses and emerging patterns are detected early, before exposure scales.

This is also where external validation becomes critical. Internal teams are often too close to the model to see certain risks clearly, especially in high-stakes or regulated environments. An independent validation layer helps surface blind spots that internal testing may miss.

We explore this dynamic in more depth in our article Avoiding Costly Mistakes: How External Validation of AI Models Minimizes AI Risk Exposure, which looks at how external validation strengthens trust, accountability, and resilience in production AI systems.

Operational Efficiency That Compounds Over Time

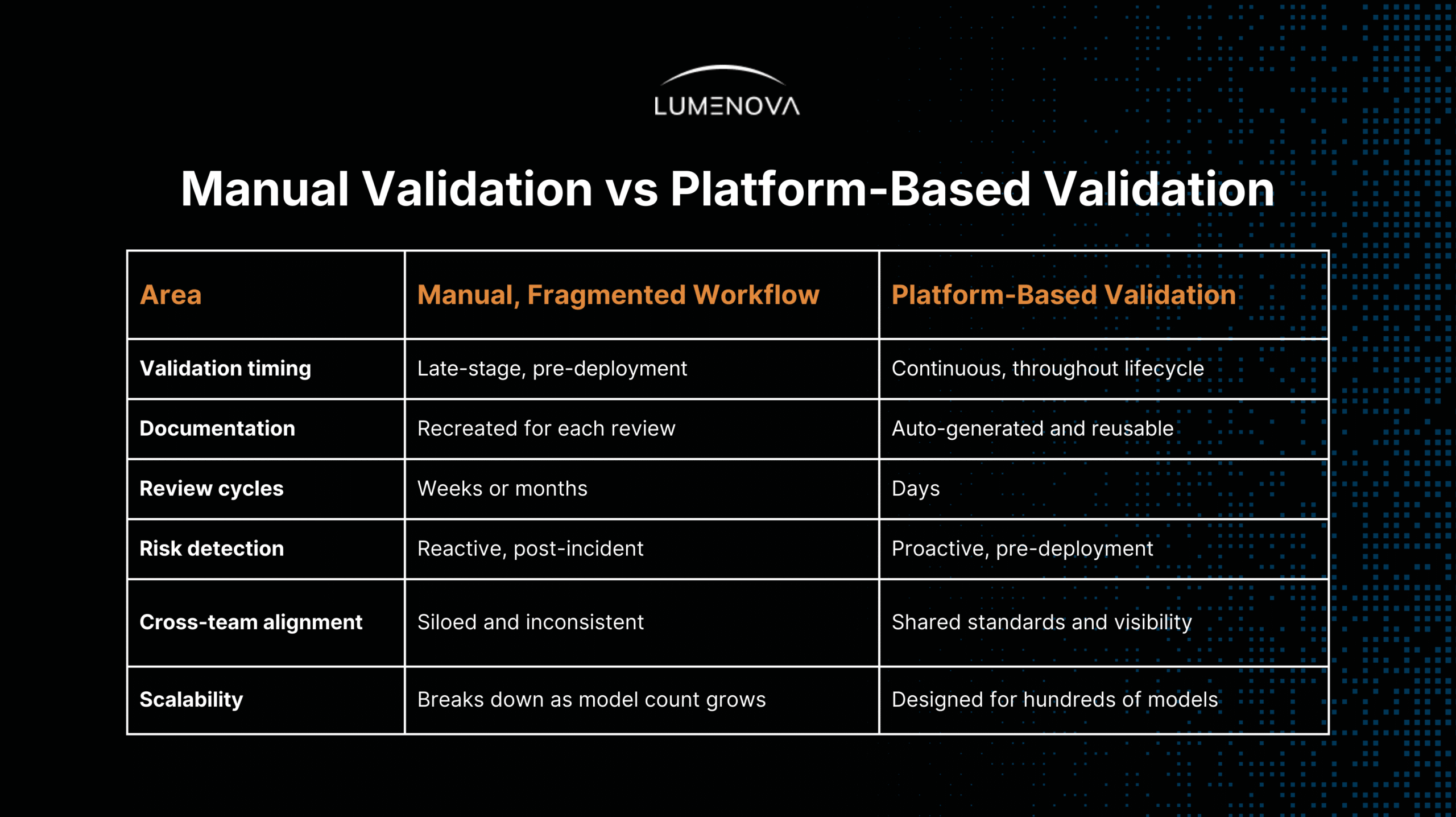

Without a platform, validation work is duplicated across teams. One group builds bias checks, another maintains explainability templates, and a third creates its own approval checklist. Each effort is valid, but collectively quite inefficient.

Organizations that move from manual, team-specific model validation to centralized, automated platforms consistently report significant reductions in validation effort and review cycles, driven by reusable checks, standardized documentation, and shared workflows. Industry research on MLOps maturity shows that teams adopting automation and standardization deploy models faster, reduce operational overhead, and eliminate duplicated validation work across functions, compared with fragmented, manual approaches.

This efficiency compounds as AI portfolios grow. What feels manageable at ten models becomes unsustainable at one hundred without shared infrastructure.

Curious about the competitive edge of continuous AI model evaluation? Then head over to our article, which outlines why ongoing validation is essential for scaling AI safely and efficiently across the enterprise.

Executive Confidence Is an ROI Multiplier

One of the most underestimated returns of AI validation platforms shows up in leadership conversations.

When executives cannot clearly see how models behave in the real world, who owns them, or how risks are being managed, decisions slow down. Not because leaders are opposed to AI, but because approving what feels opaque carries personal and organizational risk. As a result, promising, high-impact use cases often stall, and AI remains stuck in pilot mode.

Centralized oversight changes that dynamic. With clear audit trails, live performance signals, and real-time alerts, leaders are no longer relying on assumptions or after-the-fact reports. They can see what is running, how it is behaving, and where controls are actively in place.

That level of visibility does not eliminate risk, but it makes it legible. And once risk becomes something leaders can understand and govern, AI stops feeling like a leap of faith and starts feeling like a decision they can stand behind.

Scaling Governance Without Slowing Innovation

AI programs rarely fail at the pilot stage; they fail when scaling introduces inconsistency.

Different teams apply different standards, models proliferate without clear ownership, and oversight becomes reactive.

Which is why it is vital to have a platform approach that enables consistent governance across business units and geographies. In this way, policies are enforced uniformly, validation travels with the model, and scaling does not require reinventing governance every time AI expands into a new domain.

This is where long-term ROI becomes structural rather than incremental.

Acknowledging the Real Challenges

No platform delivers value if it cannot integrate with real-world environments. Data silos, legacy systems, and fragmented tooling are common barriers. Without observability, even the best validation framework becomes blind once models are deployed.

This is where platforms like Lumenova AI focus beyond static checks. By integrating observability and real-time alerts, validation does not stop at deployment. Model behavior is continuously monitored across data shifts, usage patterns, and emerging risks. Issues surface early, with context, rather than as late surprises, which can bear higher costs down the road.

The goal is not to achieve perfect foresight, but to have faster detection and clearer response when conditions change.

Where Lumenova AI Fits In

Lumenova AI approaches AI model validation and safety as core infrastructure for modern AI programs. By automating validation, embedding risk checks early, and providing continuous observability, the platform helps organizations deploy AI faster without sacrificing control. Teams spend less time negotiating approvals and more time delivering value, while leaders gain the visibility needed to scale AI with confidence.

If your AI initiatives are growing and governance is starting to slow progress, it may be time to move beyond manual validation.

Explore how Lumenova AI enables safer, faster, and more scalable AI deployment across the enterprise. Request a demo today.