October 16, 2025

Top 7 AI Adoption Challenges Enterprises Face in 2025 (and How to Overcome Them)

Contents

Even though the US has crossed $40B in enterprise spend on GenAI, MIT reports that 95% of companies are seeing no real return. None.

So what’s really going wrong?

The gap is in the execution. More specifically, in how teams adopt, govern, and scale AI across the enterprise.

In many cases, companies drop AI onto broken workflows, ship models before thinking, automate without understanding risk, and celebrate speed over results. Fast looks impressive. Results matter more.

The consequences follow naturally. AI adoption stalls. Compliance drags. Models gather dust. ROI stays invisible. Enterprise scaling turns into chaos. What starts as a breakthrough quickly becomes a headache.

That’s why we’re highlighting the main blockers slowing teams down in 2025 (even when everything seems to be working). We’ll also share practical ways to overcome them and avoid the common traps that keep AI pilots from delivering real business impact.

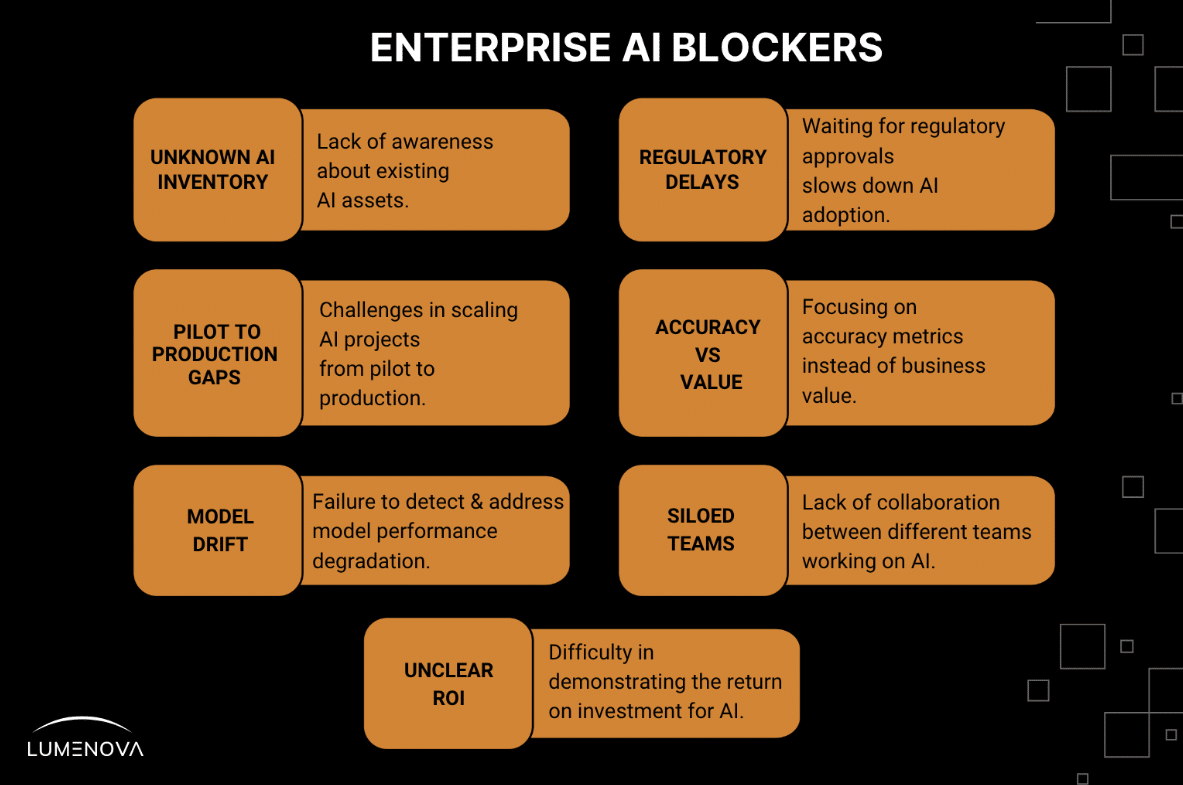

7 Biggest Enterprise AI Blockers

- Unknown AI inventory

- Waiting on regulators

- Pilot to production gaps

- Measuring accuracy, not value

- Model drift goes unnoticed

- Teams working in silos

- ROI isn’t clear

1. You Don’t Know Where Your AI Is (Or What It’s Doing)

This one sounds basic, but it’s everywhere: Most enterprises don’t know how many models they’ve got running, let alone who owns them or what they’re doing.

Some started as shadow IT projects. Others came from vendor integrations. A few might’ve been built in-house for narrow use cases. Nobody’s keeping track. And that’s a problem.

Because when you don’t have a handle on your AI inventory (models, datasets, workflows, endpoints), you can’t manage risk, performance, or compliance. You’re flying blind.

Want to fix it?

Start by mapping what’s already live. Build your AI registry and include:

- What model it is (open-source, vendor, custom?)

- Who owns it (tech team, ops, marketing?)

- What it touches (data types, customers, processes)

Whether it’s been assessed for risk (spoiler: probably not)

Learn how embedding responsible AI guidelines into your development lifecycle can improve fairness, transparency, and accountability across your AI projects.

2. You’re Waiting on Regulators Instead of Getting Ready for Them

The EU AI Act is here. In the U.S., states are developing their own AI legislation, with more to come. But too many enterprises are still in “wait and see” mode, assuming they can deal with governance after launch. You can’t. And by the time the rules hit, it’s already too late.

If your model is already in production and you don’t have explainability, documentation, testing procedures, and risk logs, you’re going to have to unwind everything later. That’s expensive. And public.

You don’t need a 400-page framework.

You do need:

- Clear model ownership

- Documented training data lineage

- Audit trails

- Usage guardrails

- A way to explain how the model works, in plain language

- It’s not exciting. But it’ll keep your program alive.

While companies wait for regulations to arrive, they risk being unprepared for compliance. One way to proactively address this is by exploring the NIST AI Risk Management Framework, which helps reduce risks and align with global standards.

3. Pilots Don’t Equal Progress

We talk to a lot of enterprise leaders. The pattern is the same:

“We’ve got a few GenAI pilots running.”

“They’re promising!”

“But they haven’t scaled yet.”

“We’re still figuring out the production plan.”

Translation: It’s stuck.

Why? Because pilots happen in controlled environments with happy paths and a handful of users. Real deployment means security reviews, change management, training, systems integration, compliance, QA… you get it.

AI doesn’t fail because it’s bad tech. It fails because the organization doesn’t know how to operationalize it.

That’s why, at this step, you need to implement AI lifecycle governance. It means having defined steps, clear checkpoints, and accountability at every stage (from concept to production to ongoing monitoring).

4. Nobody Knows What “Good” Looks Like

You’re probably not measuring success, just accuracy. If your AI model did a better job today than yesterday, would you know?

Most companies don’t have a handle on AI performance outside of model accuracy. But business impact? Operational value? Compliance risk? That’s foggy. And that’s a problem.

We’ve seen companies deploy AI for underwriting, sales prioritization, even internal automation, and then fail to measure whether it’s actually helping.

Want to know if it’s working? Ask:

- Are decisions being made faster and more accurately?

- Are humans trusting the model, or second-guessing it?

- What happens when the model is wrong? Who catches it?

- Would anyone notice if we turned it off?

- Without those answers, you’re building a black box no one can manage.

5. Your Models Are Drifting

AI models decay. It’s not dramatic, just slow and subtle.

A few months pass, and suddenly, predictions get weirder. The context shifts. The data changes. The model doesn’t know. You don’t know.

That’s model drift. And it kills trust. Changes in consumer behavior, market conditions, and other external factors can slowly erode a model’s predictive power, often without immediate warning.

Consequently, organizations risk making decisions based on outdated insights, which can impact revenue, compliance, and customer trust.

To understand how to detect, prevent, and manage model drift effectively, and to explore real-world strategies for maintaining AI reliability, take a deeper look at our Model Drift Deep Dive:

If you’re not retraining regularly, not validating your outputs, and not watching for change over time, your “great” model from six months ago might now be spreading garbage.

Solution?

- Watch for drift in the same way you monitor security events.

- Set up retraining checkpoints based on real-world triggers.

- Log outputs and feedback (not just inputs).

- And yes, include humans in the loop.

6. Your Teams Work in Silos

IT builds the infrastructure. Data science builds the model. Legal asks questions after it’s live. Finance wonders what happened to the budget.

That’s the norm. And it’s a recipe for AI projects that technically work but never go anywhere.

Cross-functional teams are imperative here. AI governance means bringing all stakeholders into the same conversation early, and keeping them there.

That includes:

- A shared review board

- Clear escalation paths

- Regular working sessions between risk, tech, and business teams

You’re not building a model. You’re building a system. And that system needs buy-in from more than engineers.

7. You Can’t Show ROI, So AI Feels Like a Cost Center

Everyone’s busy hiring ML engineers. But who’s thinking about AI ethics? Who’s checking explainability? Who spots risk before it becomes a problem?

Most companies are underinvesting, and finance is starting to ask the hard questions. They’ve signed off on licensing, cloud credits, and new hires. Now they want to know:

“What are we actually getting from this?”

Many AI projects can’t answer that (not because they aren’t valuable, but because the value was never defined). The smartest teams build ROI into AI from day one:

- Pick KPIs that matter (time saved, errors reduced, revenue lifted)

- Test AI against human performance

- Track adoption, not just deployment

💡Did you know? To assist you in answering the above question, Lumenova AI offers an AI ROI & Cost Analysis accelerator that helps you quantify the real value of AI adoption and usage. We do that by:

- Inputting into the platform’s ROI module to establish human cost.

- Comparing AI vs. human costs.

- Configuring savings dashboards and sensitivity analysis.

- Defining patterns and templates of approved AI architectures.

- Building business cases for scaling or retiring AI.

Ready to make data-driven decisions, based on the measurable economic value of AI? Ask us about it!

Final Thought

If your AI doesn’t move fast, stay compliant, and prove its value, well… it’s not helping. It’s just racking up cloud bills and creating liability.

Success doesn’t come from deploying more models. It comes from building an operating model where governance, compliance, and business value are part of the process, not barriers to it.

Lumenova AI helps you get there (from visibility to policy enforcement to monitoring) with tools built for real-world AI adoption, at enterprise scale.

Ask Yourself (or Your Team):

- Do we have a clear inventory of our AI models and owners?

- If a regulator called tomorrow, are we ready?

- Would the business notice if we turned off our AI tools?

If not, you’ve got work to do. We can help. Request a custom demo for your team.