Contents

Responsible AI isn’t a barrier to innovation. It’s the discipline that makes innovation stick.

AI is almost everywhere now. It helps choose what videos we see, which resumes get noticed, and even helping schedule appointments with doctors or process loan applications. It’s present in schools, hospitals, banks, stores, and even on your phone. Since AI is now nearly ubiquitous, there’s an ongoing concern on the horizon: what happens if the AI gets things wrong?

What if it picks the wrong person? Leaves someone out? Shares something private? That’s when things go from “cool tech” to real-world problems and consequences. And that’s why more people are starting to ask a better question:

How do we make sure AI does the right thing?

This is where Responsible AI (RAI) steps in. It’s not just a rulebook for developers. It’s a way for businesses, governments, schools, and everyday people to ensure AI works for them.

In this article, we’ll break down:

- What RAI really looks like in the real world

- Why it’s such a hot topic right now

- The core values and systems that keep AI on track

- Simple steps anyone can take to keep AI working the right way

What Responsible AI Really Means (And Why It Matters in 2025)

RAI is a simple idea with a big meaning: it’s about making sure AI systems are used in ways that are fair, safe, and respectful to people – not just powerful, but thoughtful.

It’s about asking, “Should we?”, not just, “Can we?”

AI is good at patterns. It finds trends in data and makes decisions based on what it learns. But what if the data is biased? What if the system makes choices that affect real people, and nobody knows why?

Let’s look at some real examples from 2025:

- Tenant Screening Bias: In 2025, a lawsuit highlighted issues with AI-driven tenant screening systems. A Massachusetts woman was denied an apartment due to a low score from an AI tool, despite having a solid rental history. The AI system disproportionately scored minority renters using housing vouchers lower than other applicants, leading to discrimination claims. The case settled with the company agreeing to cease using such scoring systems for five years.

- School Surveillance Concerns: A Texas school district faced scrutiny over using AI-powered surveillance tools to monitor student activities on school-issued devices. Concerns were raised about the privacy implications and potential for these systems to erroneously flag student behavior, impacting students’ privacy and right to self-expression.

- Insurance Chatbot Data Breach: An insurance company’s AI-powered chatbot inadvertently shared customer details with unauthorized individuals during live chats. This design flaw compromised customer trust and highlighted the need for stringent data security measures in AI implementations.

These cases showcase how AI can go off track (even when people don’t mean for it to). That’s why RAI is growing fast. In 2025, it’s not just something tech teams care about. It’s also showing up in boardrooms and governmental meetings.

RAI means paying attention to questions like:

- Who might be left out?

- Is the system treating everyone fairly?

- Can we explain how the AI made that choice?

- Who’s watching the AI to make sure it’s working?

- What do we do if it causes harm?

It’s not just about following laws (though those are knocking at the door, especially in Europe). It’s about addressing problems before they have the chance to materialize. That’s why RAI is no longer optional, but imperative.

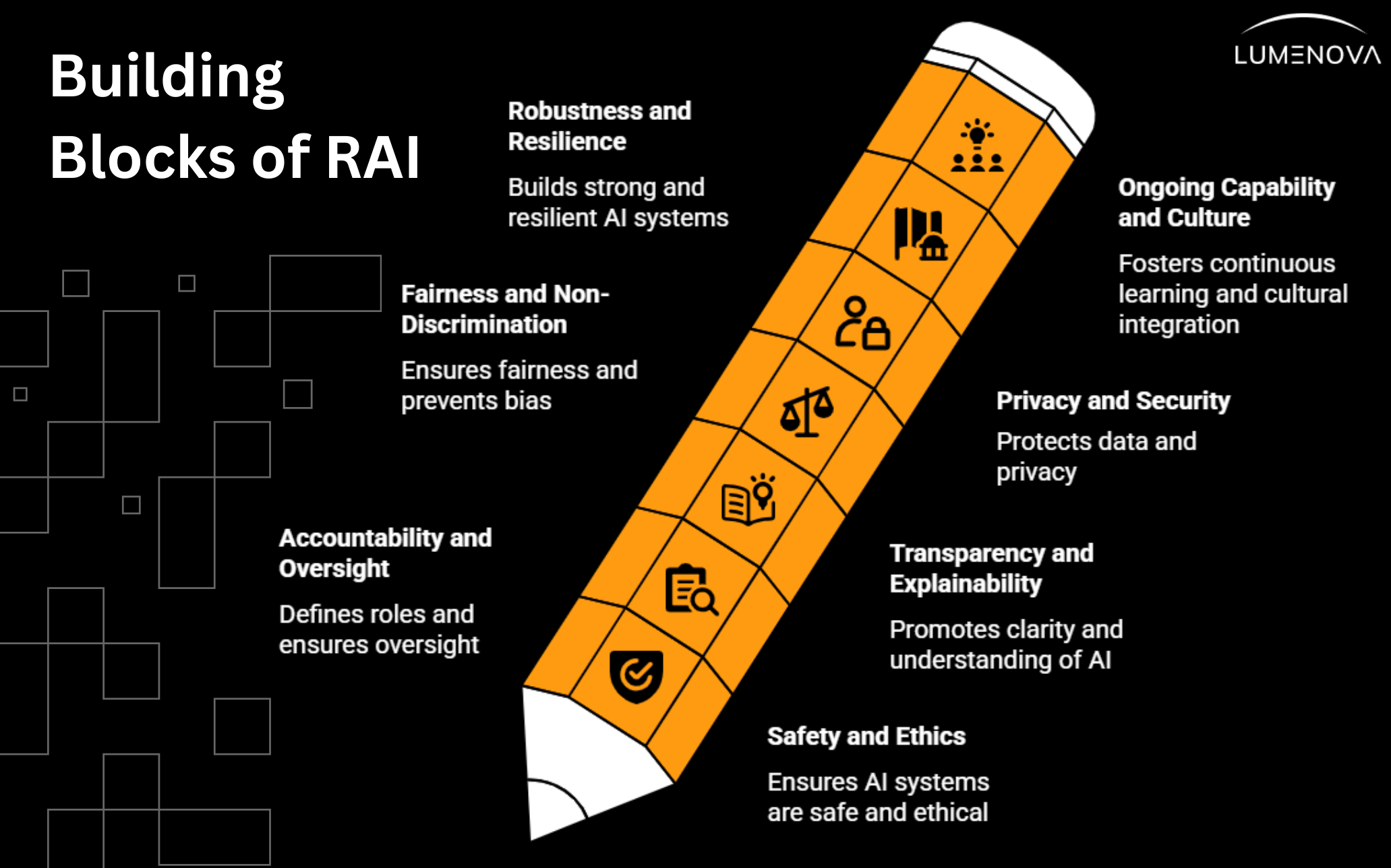

Principles Behind Responsible AI

RAI works best when guided by clear, human-centric principles. You can think of these as the rules that keep AI honest, fair, and safe to use in real life.

Let’s break down several of the most important ones:

1. Fairness and Non-Discrimination

AI should treat everyone equally. That means it shouldn’t favor one group of people over another (whether by age, gender, language, or place of residence). If an AI tool gives better scores to certain names or zip codes, that’s unfair. RAI looks out for bias and ensures it’s fixed before people get hurt.

2. Transparency and Explainability

People have a right to know when AI is being used and what it’s doing. If an AI helps decide who gets a job interview or qualifies for a benefit, there should be a clear explanation of how it works. No guessing. No hiding behind “black box” systems.

3. Accountability and Oversight

A human should also be in charge of the AI, not vice versa. If something goes wrong, a person (or team) needs to step in, take responsibility, and make it right. “The system made a mistake” is not an excuse.

4. Privacy and Security

AI systems often use personal or sensitive data that must be stringently protected. RAI ensures private information is kept safe, only used with permission, and never shared without consent. It’s like locking up a diary and guarding the key.

5. Safety

Before an AI system is used in real life, it should be vigorously tested. Think of it like test-driving a car before letting someone drive it on a busy road. Good AI needs guardrails to keep people and systems safe.

Want to dive deeper into how Responsible AI can create real business value? Explore The Benefits of Responsible AI for Today’s Businesses and see how you can lead with trust, performance, and purpose.

Core Mechanisms: The Systems That Make Responsible AI Real

RAI doesn’t happen by accident. It’s not something you tackle at the end of a project or hand off to the compliance team after deployment. It’s built through intentional systems, aligned with RAI principles, and carried through every phase of design, development, and use.

These mechanisms aren’t theoretical. They are the practices and protocols organizations implement to ensure their AI systems stay safe and effective. Some are technical, some operational, and some are cultural. Together, they form the foundation of real-world RAI.

Let’s take a closer look, grouped by the principles they’re designed to uphold.

Fairness and Non-Discrimination

1. Bias Audits

Auditing for AI bias means regularly checking how a model performs across different groups. This includes examining the data, the outcomes, and the unintended patterns that can emerge. Independent audits are especially valuable for building trust.

2. User Rights and Disclosures

People have the right to know when AI is used in decisions that affect them. They should also be able to request an explanation, opt out of automated information processing, or file a complaint when things go wrong. These rights need to be clearly communicated and actionable.

3. Clear Labelling and Communication

Whether it’s AI-generated content or decisions, transparency requires plain language. Users should not have to guess what’s AI and what isn’t, or what role it played in a given outcome.

Transparency and Explainability

4. Lifecycle Documentation

Every stage of the AI lifecycle, from data inputs to system purpose, should be well documented. This improves internal alignment, enables external accountability, and simplifies regulatory compliance.

5. Model Cards and Surrogate Models

Model cards are structured reports that describe how a model was built, what data it used, and where its limits are. Surrogate models help explain decisions made by complex systems by mimicking them with simpler, more interpretable logic.

6. Tools for Interpretability

Techniques like attention maps, SHAP (SHapley Additive exPlanations), and LIME (Local Interpretable Model-Agnostic Explanations) help teams understand how a model arrived at a decision. They’re not just for data scientists. These tools can be used to communicate with affected users, regulators, or leadership in plain terms.

Accountability and Oversight

7. Defined Roles and Responsibilities

Everyone working with AI should know their role. Clarity on who is responsible for what, from model development to risk review, helps prevent gaps in oversight and speeds up decision-making when problems arise.

8. Human and Expert Oversight

AI systems that make meaningful decisions about people should never operate without human oversight. These systems should maintain a human-in-the-loop approach, ensuring that individuals are actively involved in validating results, assessing edge cases, and confirming that outcomes align with the organization’s intent and values.

9. Incident Response and Corrective Action

Mistakes will happen. What matters is how quickly and transparently an organization can respond. Responsible teams prepare for incidents in advance, document what went wrong, and implement improvements that prevent recurrence.

Privacy and Security

10. Data Minimization and Purpose Limitation

Only collect what’s necessary, and only use it for the reason it was collected. This is a core principle in nearly every privacy regulation and an essential part of building user trust.

11. Modern Privacy Techniques

Techniques like differential privacy, federated learning, and homomorphic encryption allow organizations to extract insights without compromising individual data. They help reduce risk without limiting capability.

12. Access Controls and Breach Response

Not everyone needs full access to every AI system. Strong access controls limit exposure and reduce risk. A tested response plan makes it easier to contain the damage and respond to legal and regulatory requirements in case of a breach.

13. Automated Threat and Anomaly Detection

AI systems should include automated mechanisms to detect threats and anomalies in real time. These tools help identify unusual patterns, potential intrusions, or misuse, enabling rapid response before risks escalate. Incorporating such detection supports both security and operational continuity.

Safety and Ethics

14. Risk and Impact Assessments

No AI system is without risk. Responsible organizations regularly assess what could go wrong, how likely it is, and who could be affected. This isn’t a one-time event. It should happen before deployment, after updates, and on a regular schedule, especially for high-impact systems that could affect human health, rights, or safety.

15. Recall Protocols and Fail-Safes

When an AI system causes harm or veers off course, you need a plan to intervene. Recall protocols define how to pause, update, or decommission a system. Fail-safes allow humans to override decisions, shut systems down, or prevent cascading errors in complex environments.

16. AI Governance Frameworks

Good governance connects ethics, compliance, operations, and business strategy. A strong framework doesn’t just outline values. It defines how decisions are made, how risk is managed, and how AI stays aligned with organizational goals over time.

Robustness and Resilience

17. Red Teaming and Stress Testing

AI systems should be tested under pressure. Red teams simulate adversarial attacks or unusual edge cases to expose weak points before real harm occurs. These teams often include people from different disciplines to bring diverse perspectives to the table.

18. Uncertainty Estimation and Confidence Scoring

Some AI systems can now flag when they’re unsure about a decision or output. Confidence scores help humans step in when a model is uncertain, reducing the likelihood of decisions based on overconfident but incorrect outputs.

19. Scenario Planning and Crisis Simulation

Organizations that rely heavily on AI should rehearse what happens when it fails. Running simulations of AI failures (whether technical or ethical) helps prepare teams to respond quickly and thoughtfully when real problems arise.

Ongoing Capability and Culture

20. Upskilling and Skills Assessments

RAI relies on people who understand it. Training programs and skills assessments help ensure that staff know how to use, evaluate, and monitor the systems they work with. It’s about giving people the context and confidence to use AI responsibly.

21. Awareness Campaigns and Communication

RAI isn’t just a technical goal. It’s a cultural one. The most successful organizations ensure that people across the business understand why this work matters and how it connects to their role, their team, and the organization’s larger mission.

22. External Partnerships

You don’t have to figure everything out on your own. Working with academics, industry peers, civil society groups, and regulators helps you stay current and hold your systems to a higher standard.

23. Anonymous Reporting and Whistleblower Protection

Establishing anonymous risk and incident reporting channels enables employees to safely raise concerns about AI misuse, ethical breaches, or system vulnerabilities. Whistleblower protections are essential to ensure that individuals feel empowered to report without fear of retaliation. These mechanisms strengthen transparency and reinforce a culture of accountability.

Why RAI Is a Smart Business Move (Not Just a Moral One)

There’s no question that RAI is the right thing to do. However, the most innovative organizations also see it as a competitive edge. When done well, RAI doesn’t slow progress. It sharpens it. It helps you move faster with fewer surprises, better results, and stronger relationships.

Here’s why the business case is growing clearer by the day.

1. Trust Builds Everything

Trust isn’t abstract. It shows up in retention, brand reputation, and user loyalty. People notice when your AI systems are explainable, fair, and responsive. Customers stay longer. Partners feel more confident. Regulators give you more room to operate. And when something goes wrong, as it eventually will, how you respond is everything. RAI earns trust before you need it, and protects it when it matters most.

2. Avoiding Headlines (And Legal Trouble)

AI-related lawsuits, data breaches, and algorithmic failures are becoming increasingly common. They’re real costs (financial, operational, and reputational). Irresponsible use of AI can spark public backlash or regulatory penalties that take years to recover from. Building with responsibility upfront is far cheaper than dealing with the consequences later.

3. It’s Becoming a Market Requirement

RAI is showing up in procurement documents, due diligence checklists, and customer contracts. Organizations want to know that their vendors and partners are thinking seriously about fairness, privacy, and accountability. If you can show that your systems meet high ethical and operational standards, it’s not just a differentiator. It’s a door-opener.

4. Regulations Are Moving Quickly

From the EU AI Act to U.S. executive orders, the regulatory environment is evolving fast. Companies that treat RAI as a future problem will be left scrambling. The ones that get ahead of the curve will be better prepared to comply, adapt, and even influence the direction of new rules. Being proactive today makes future compliance a lot easier and far less disruptive.

5. Better Design Means Better Outcomes

The best part? RAI isn’t just safer. It works better. Thoughtful design leads to more inclusive models, stable systems, and fewer unexpected failures. You get solutions that generalize well, align with real-world needs, and improve over time, not just on paper, but also in practice.

22 Strategic Questions for Leaders Implementing AI

RAI doesn’t begin with models or metrics. It begins with governance. And governance begins with thoughtful, structured, and specific questions.

These aren’t abstract concerns or compliance formalities. They are the questions that define your organization’s readiness to deploy AI systems with confidence and accountability. They help uncover gaps, surface risks, and inform better decisions throughout the lifecycle of AI development and adoption.

You don’t need all the answers immediately. However, a mature RAI program knows precisely where to look, who to ask, and when to act.

Accountability

- Who holds formal accountability for this system’s outcomes, and is that role clearly understood across teams?

- Do we have a structured governance process to review and reassess the system at key stages in its lifecycle?

Fairness & Non-Discrimination

- Have we clearly identified the intended beneficiaries of this system, and those it might

disadvantage?

- Are we systematically evaluating model performance across demographic groups, edge cases, and underrepresented segments?

- Does the training data reflect the people, environments, and decisions this system will touch?

- Have we considered the accessibility and usability of the system across diverse user groups and contexts?

Transparency and Explainability

- Can we provide a clear, non-technical explanation of how the model works and what influences its decisions?

- Are end-users or affected individuals informed when AI plays a role in shaping outcomes that affect them?

- What assumptions, constraints, and limitations are embedded in the model, and how are they tracked over time?

Privacy, Data Use, and Security

- Are we limiting data collection to what is strictly necessary for this use case?

- Have we been transparent about how data is used, stored, and protected (internally and externally)?

- What controls are in place to prevent unauthorized access, model misuse, or data leakage?

Human Oversight

- In what scenarios are humans expected to intervene, review, or override AI-generated decisions?

- Can individuals challenge, appeal, or request human review of an outcome that affects them materially?

Risk Management and Resilience

- What is the worst plausible outcome this system could trigger, and do we have mitigation strategies?

- Have we conducted adversarial testing, scenario planning, or red-teaming to identify points of failure or misuse?

- If an incident occurs, who is notified, how is it escalated, and what recovery actions are predefined?

- What is the protocol if this system causes harm, fails unexpectedly, or is no longer aligned with our risk appetite?

Long-Term Implications

- How could this system shift operational workflows, decision authority, or stakeholder dynamics over time?

- Are we monitoring post-deployment performance and outcomes across different contexts and cohorts?

- Would we feel confident defending this system’s design, oversight, and impact (internally, publicly, and ethically)?

- Could this system introduce or amplify systemic risks across industries, critical infrastructure, or society, primarily through scale, interdependence, or automation?

These are not only questions for the build phase. They belong in board-level conversations, product roadmaps, risk reviews, and regulatory engagements. Organizations that take them seriously will not only avoid harm. They’ll build systems that are more durable, effective, and aligned with the complexity of the real world.

Conclusion

AI isn’t a futuristic concept anymore – it’s operational. And that means the way we build and manage these systems matters more than ever. If you’re in charge of AI, risk, compliance, or tech strategy, this is the work you should be focusing on. It’s not just about protecting your organization. It’s about creating systems that are fair, clear, and able to stand up to real-world pressure (models that deliver value and do it in a way you can explain, defend, and improve).

Want to See What Responsible AI Looks Like in Practice?

If you’re thinking, “Okay, we’re ready to go deeper with this,” we’d love to show you how. Lumenova’s Responsible AI Platform helps organizations put the right structures around their AI, so you can manage risk, document decisions, catch issues early, and meet regulatory expectations without slowing your team down.

In a quick demo, we’ll walk you through how our RAI platform can help you:

- Identify and manage risk across your AI portfolio

- Monitor bias, fairness, and performance automatically

- Stay aligned with frameworks like the EU AI Act and NIST AI RMF

Book a demo and let’s take the pressure off your teams and help you build AI that works (and that holds up under scrutiny).

Frequently Asked Questions

Responsible AI refers to the practice of designing, developing, and deploying AI systems in ways that are ethical, transparent, fair, and aligned with human values. It ensures that AI systems are safe, explainable, accountable, and used to benefit individuals and society, not just organizations or algorithms.

One key principle of Responsible AI is Fairness. This means AI systems should not produce biased or discriminatory outcomes. Fairness ensures that people are treated equitably, regardless of factors like race, gender, age, or background, and that the data and decisions behind AI are inclusive and representative.

Responsible AI practices reduce risk, build trust, and support long-term innovation. They help organizations stay compliant with emerging regulations, avoid reputational damage, and create AI systems that perform reliably in the real world. Organizations can scale with confidence by embedding ethics and governance into AI workflows.

A responsibility gap happens when it’s unclear who is accountable for the actions or outcomes of an AI system. This can occur when AI decisions are automated, opaque, or made without clear oversight. Responsible AI aims to close that gap by defining ownership, decision boundaries, and human oversight at every stage of the AI lifecycle.

Responsible AI can help mitigate algorithmic bias (one of the most common and harmful risks in AI). By auditing systems, improving data quality, and embedding fairness checks, organizations can reduce discriminatory outcomes and ensure AI decisions are accurate and equitable across different groups.