AI Audits

What is an AI Audit?

AI Audits are systematic evaluations of AI systems to assess compliance with ethical, legal, and technical standards, focusing on fairness, transparency, security, and performance. As AI adoption expands, organizations must implement AI auditing to assess AI-driven decisions and mitigate risks associated with AI bias, security vulnerabilities, and regulatory non-compliance.

The Importance of AI Auditing

The purpose of AI auditing is to ensure AI models operate as intended. Without proper evaluation, AI can generate biased results, compromise data security, and violate privacy regulations. AI auditing services provide businesses with tools to monitor AI’s decision-making processes and performance, assessing whether systems align with ethical standards and legal requirements.

Key Components of AI Auditing

AI audits encompass various elements to assess AI models effectively:

- AI Bias Audit: Detecting and mitigating biases in AI algorithms to prevent discrimination.

- AI Security Audit: Evaluating AI models for vulnerabilities that could expose sensitive data or compromise performance.

- AI Performance Audit: Assessing the efficiency, accuracy, and scalability of AI systems.

- Regulatory Compliance Audit: Ensuring AI systems adhere to global laws such as GDPR, CCPA, and emerging AI governance regulations.

AI in Auditing: Transforming the Audit Industry

AI in auditing is revolutionizing traditional audit practices by automating risk detection, fraud analysis, and financial assessments. Companies now leverage AI audit software and AI audit tools to enhance efficiency, identify irregularities, and improve compliance reporting. AI for auditing enables real-time data analysis, reducing manual errors and increasing audit accuracy.

AI Auditing Frameworks and Best Practices

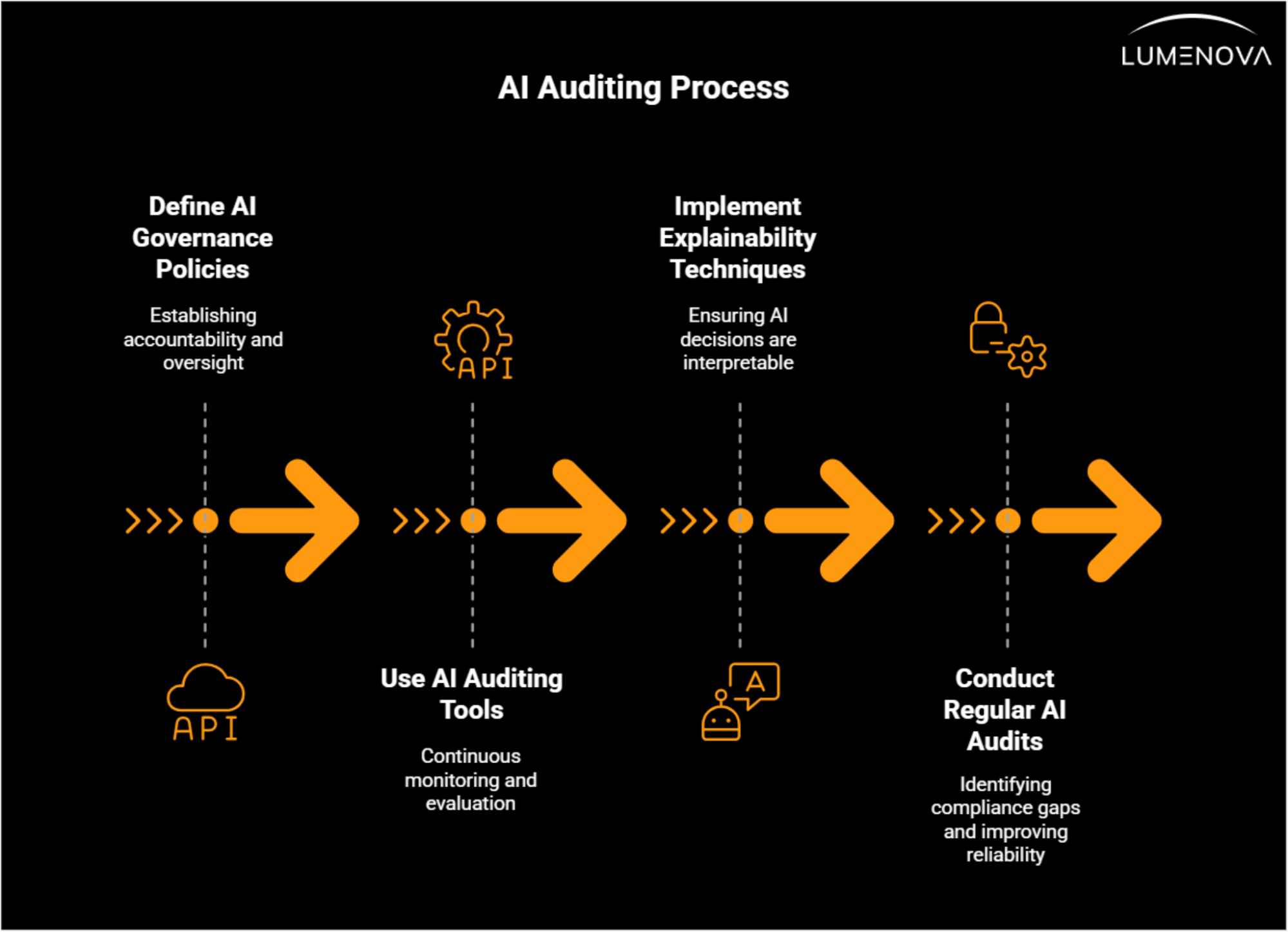

Organizations adopting AI must follow structured AI auditing frameworks to maintain transparency and accountability. Best practices for auditing AI systems include:

- Defining AI governance policies to establish AI accountability and oversight.

- Using AI auditing tools for continuous monitoring and evaluation, pre- and post-deployment.

- Implementing explainability techniques to ensure AI decisions are interpretable.

- Regular AI audit services to identify compliance gaps and improve AI reliability.

AI Audits in Internal and External Auditing

AI is transforming both internal and external auditing functions. For internal auditing, companies can utilize AI to analyze vast datasets, detect anomalies, and streamline compliance workflows. By contrast, AI auditing companies provide external AI auditing services to businesses, ensuring adherence to evolving regulatory standards. AI and audit teams work together to establish robust AI governance models that foster transparency.

AI Auditing Tools and Software

The rise of AI audit software has enabled organizations to automate compliance checks and improve risk assessment. Leading AI auditing tools integrate machine learning algorithms to enhance fraud detection, financial audits, and security analysis. These tools help businesses ensure their AI systems remain accountable and perform optimally.

AI Website Audits

Beyond financial and operational audits, companies conduct AI website audits to assess AI-driven content generation, SEO compliance, and security vulnerabilities. Auditing AI-powered websites ensures adherence to search engine guidelines and improves user experience by eliminating AI-generated errors.

Conclusion

The demand for AI auditing will continue to grow. Organizations must prioritize auditing AI systems to enhance reliability, prevent bias, and maintain regulatory compliance. Whether through AI auditing services, AI bias audits, or automated AI audit tools, businesses must establish strong AI governance practices to ensure ethical and responsible AI use.

Frequently Asked Questions

An AI Audit is a systematic evaluation of AI systems to assess compliance with ethical, legal, and technical standards. It focuses on fairness, transparency, security, and performance. Conducting AI audits is essential to ensure AI systems operate as intended while mitigating risks related to bias, security, and regulatory non-compliance.

The frequency of AI Audits depends on factors such as the complexity of AI systems, regulatory requirements, and the rate of model updates. Regular audits help maintain system integrity and detect potential issues early, ensuring continued compliance and optimal performance.

AI Audits typically include four main components: AI Bias Audit to detect and mitigate biases, AI Security Audit to evaluate vulnerabilities, AI Performance Audit to assess efficiency and accuracy, and Regulatory Compliance Audit to ensure adherence to laws such as GDPR and CCPA.

AI Audits enhance decision-making by ensuring AI systems remain fair, transparent, and accurate. They help identify biases in AI-driven decisions, improve the interpretability of AI outputs, and ensure compliance with relevant regulations, resulting in more reliable and trustworthy AI-assisted decision-making.

Widely used AI-audit frameworks include the NIST AI Risk-Management Framework (RMF), the draft ISO/IEC 42001 AI Management System, and the OECD AI Principle-based Assessment. Practical toolkits range from open-source options (Google’s Model Cards and What-If Tool, Microsoft’s Responsible AI Dashboard) to commercial platforms such as IBM AI FactSheets, Fiddler AI, Arthur Bench, and Holistic AI. Teams pick a mix of these frameworks and tools according to model type, regulatory scope, and in-house validation expertise.

AI Audits play a critical role in ensuring compliance with data protection regulations such as GDPR and CCPA. They help identify privacy risks, evaluate data handling practices, and confirm that AI systems process sensitive data without violating legal requirements. This proactive approach reduces the risk of penalties and strengthens customer trust.

Regular AI Audits provide multiple business benefits, including improved AI system performance, enhanced risk management, increased stakeholder confidence, and stronger alignment with ethical and regulatory standards. They can also reveal opportunities for innovation and efficiency improvements in AI-driven processes.