Controllable AI

What is Controllable AI

Controllable AI refers to artificial intelligence systems that operate under human oversight and intervention, ensuring predictable behavior and preventing unintended or harmful consequences, especially in high-stakes applications.

As AI advances, maintaining a human-in-the-loop to control AI models is crucial to aligning current and future systems with human values and ethical standards. AI control ensures that AI operates safely within predefined boundaries, preventing scenarios where AI escapes or undermines human control and behaves unpredictably, pursuing veiled objectives or producing irreparable harmful impacts.

Why Controlling AI is Essential

Without effective oversight, AI systems can pose serious risks, including misinformation, bias, and security threats. Consider these statistics:

- 80% of cybersecurity executives believe AI-driven cyber threats are outpacing security measures, underscoring the need for AI control mechanisms.

- AI-powered drones in conflict zones have a 70-80% success rate in hitting targets, raising concerns about the risks and impacts of autonomous weapons on modern warfare.

- Another strong example of a “loss-of-control” event is the 2012 Knight Capital trading-algorithm glitch. A routine software update accidentally activated dormant code on some (but not all) of the firm’s high-frequency trading servers; within 45 minutes the algorithms bought and sold erratically, racking up ≈ $440 million in losses and forcing Knight Capital to seek an emergency bailout.Like the Flash Crash, the incident shows how split-second, autonomous decision loops can outrun human intervention, prompting regulators to mandate circuit-breakers and “kill switches” for trading bots to ensure they can be paused or shut down when they go off the rails

These figures highlight the urgent need for controlling AI systems to prevent unintended consequences while maximizing their benefits. Who controls AI technology, and how can we control AI effectively, are key questions driving global discussions.

Challenges in AI Control

Robust AI control systems must be implemented, raising several challenges including:

- Capability Control: As AI becomes more sophisticated, it can find ways to bypass human control mechanisms.

- AI Hallucinations: AI-generated misinformation can mislead users, leading to flawed decisions and stressing the importance of AI validation and authentication.

- Autonomous Weaponization: AI in military applications could lead to unintended consequences (e.g., civilian casualties), dehumanizing warfare while making it extremely difficult to pinpoint “just” versus “unjust” acts of war.

How to Keep AI Under Control

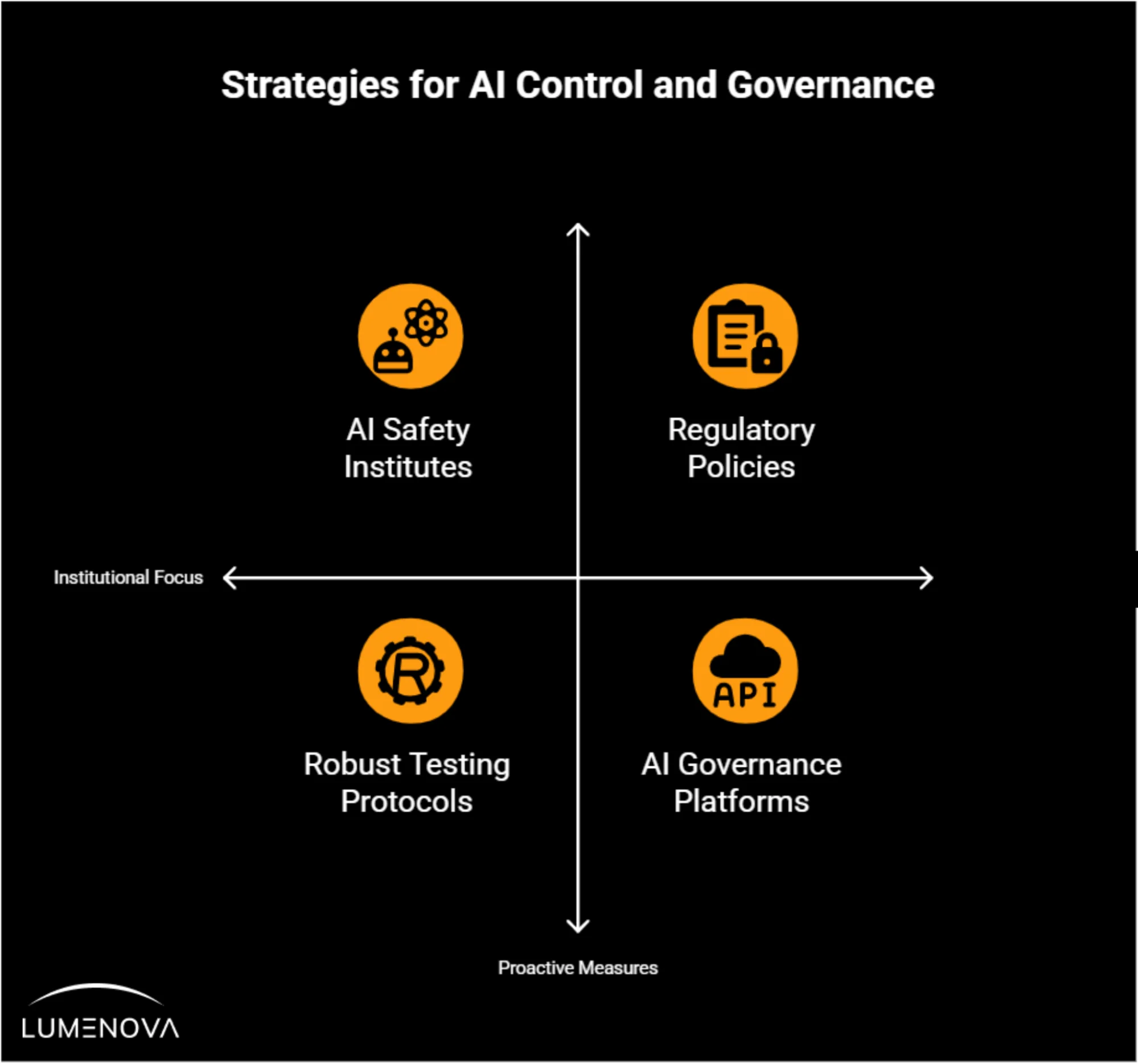

Governments, organizations, and researchers are implementing strategies to ensure controllable AI remains a priority:

- AI Safety Institutes: Institutions like the UK’s AI Safety Institute are actively researching ways to mitigate AI control problems and ensure human control over AI.

- AI Governance Platforms: Organizations rely on AI governance platforms to enforce transparency, compliance, and responsible AI use. An example is our RAI Platform, which delivers Governance, Risk Management, and Compliance solutions, bridging the gap between technical innovation and business oversight to ensure AI remains safe and trustworthy.

- Robust Testing Protocols: AI systems are being stress-tested to identify vulnerabilities before and after deployment, preventing out-of-control AI situations.

- Regulatory Policies: Global regulations are emerging to prevent AI misuse in cyberattacks, bioweapon development, and misinformation, raising concerns not only about how AI is controlled but also who or what controls it. .

The Future of Controllable AI

Human oversight is crucial to ensuring AI systems align with ethical, social, and operational guidelines. The key to AI’s future lies in balancing innovation with responsible control, allowing for progress without compromising safety. Current debate focuses on who should govern advanced AI, how humans can retain meaningful control, and which oversight frameworks best balance rapid innovation with safety.

By implementing strong governance, ethical frameworks, and real-time monitoring, AI can remain a powerful tool under human control rather than an unpredictable force.

Frequently Asked Questions

AI control is the ability to keep a system operating within boundaries set by humans. As models grow more capable, reliable control layers (policies, kill-switches, monitoring) prevent them from drifting into actions their designers never intended, crucial in high-stakes domains like finance, transport, and healthcare.

AI now makes or accelerates many decisions, but full autonomy is still rare; most systems run inside human-review loops. The risk is that, as automation deepens, humans may be tempted to relax those loops. Strong governance frameworks (risk registers, audit trails, and real-time override mechanisms) ensure people stay the final authority.

Yes, but it’s a trade-off. Bringing multiple stakeholders into rule-setting (open development guidelines, transparent decision logs, ethics boards) spreads responsibility and reduces single-point failure. While more “moving parts” introduce extra complexity and new failure paths, diversified oversight makes it far harder for any single glitch, goal-drift, or actor to push the system outside agreed boundaries.

Generative AI won’t “hijack” your work (the output mirrors the guidance you provide) so control comes from deliberate use: begin with a clear brief (audience, tone, structure), craft specific prompts and follow-ups to keep the model on target, fact-check every claim, and treat the draft as raw material you refine into your own voice, ensuring you remain fully accountable for the final text.

The question of who controls AI development is multifaceted, involving technology companies, research institutions, and governments. This reinforces the need for comprehensive AI control systems that include technical safeguards, ethical guidelines, and legal frameworks. Maintaining a balanced human-AI control relationship requires ongoing vigilance and adaptive governance.

Controlling the output of generative AI systems is essential to ensure accuracy, safety, and ethical use. Unchecked AI outputs can spread misinformation, generate harmful or biased content, and violate privacy or intellectual property rights. By implementing governance, risk management, and compliance measures, organizations can align AI outputs with regulatory standards and ethical guidelines. This helps maintain trust, prevent misuse, and ensure AI serves its intended purpose responsibly.