April 8, 2025

How to Build an AI Use Policy to Safeguard Against AI Risk

Contents

Let’s start with a “what if” scenario. You’re leading a team that’s just started using a powerful AI assistant. At first, it’s smooth. Tasks get done faster. People are impressed. Then something odd happens. The AI makes a strange call. Customers start asking why. No one is quite sure how the decision was made.

Now there’s a problem.

The tool isn’t the issue. The real problem is this: no one planned for how AI should be used, tracked, or explained. There were no written guardrails, no shared understanding, and no one designated to keep the system in check. And when that happens, the risks get real. Fast.

Without an AI use policy, organizations can face serious fallout. Think compliance violations, operational failures, customer complaints, and even public backlash. When people don’t understand how decisions are being made (or worse, when those decisions cause harm) it erodes trust. That includes trust from regulators, your customers, and even your own team.

Let’s take the time to walk through what a good policy looks like. Our short guide is divided into five sections to help you understand the whole process:

- What an AI Use Policy is (and who needs one)

- The core elements every policy should include

- How to build the policy, step by step

- Best practices from the field

- How a platform like Lumenova AI can support the process

What Is an AI Use Policy, and Who Needs One?

An AI use policy is a set of written guidelines that explain how your organization will use AI. It covers what is permitted, what is restricted, and what must be reviewed, tracked, reported, and documented. A good policy is simple enough to understand and implement but thorough enough to manage risk.

Any organization that uses AI in decision-making, data analysis, automation, or customer interactions should have one. This includes:

- Financial institutions (banks, lenders, insurers)

- Consulting and professional services firms

- Technology companies

- Healthcare providers

- Government agencies

- Manufacturing and logistics companies

If AI tools are part of how your business runs, this policy is not optional. It protects your brand, keeps you compliant, and ensures your team uses AI safely and responsibly.

It’s also a leadership tool. Chief AI Officers, Risk Officers, Data Governance leads, and Compliance teams use it to align AI strategy with business goals, legal requirements, and ethical expectations.

Core Parts of an AI Use Policy

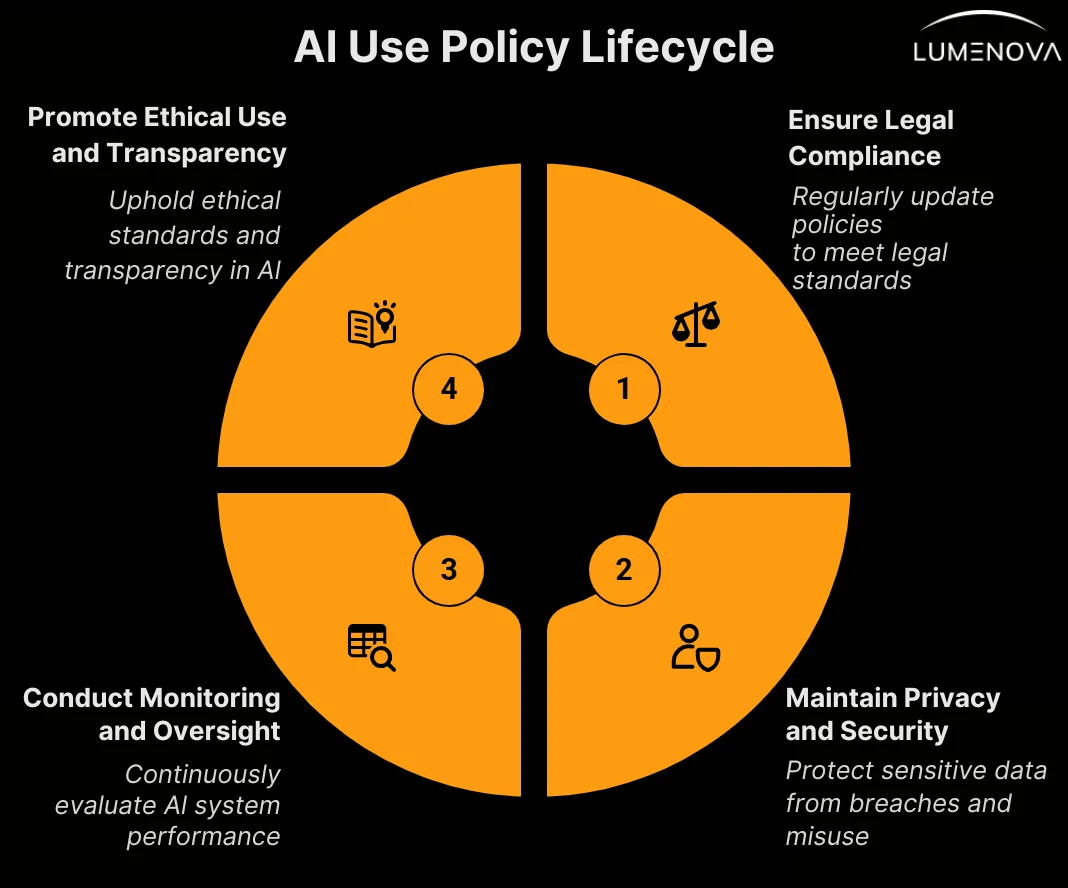

A policy doesn’t have to be long, but it does have to be clear. The most effective ones are built around four pillars:

1. Legal Compliance

Your policy must reflect the laws and rules that apply to your sector and geography. This includes:

- Data privacy laws (e.g., GDPR, CCPA)

- Sector-specific rules (e.g., HIPAA, FINRA)

- Regional AI regulations (e.g., EU AI Act)

You need to explain:

- Who monitors legal changes

- How those changes are built into your AI lifecycle

- What happens if a system is found to be non-compliant

2. Privacy and Security

AI systems work with data (often personal, sensitive, or regulated data). The policy should define:

- How data is collected, stored, shared, and processed

- Data characteristics (e.g., structure, completeness, relevance, accuracy, etc.)

- The purpose for which data is collected (e.g., targeted advertising, sales automation, etc.)

- Who has access to what

- What data can be used to train models

- What happens if there is a data breach

Without clear rules here, you’re exposed to risk both from attackers and from internal misuse.

3. Monitoring and Oversight

AI systems evolve. They need to be checked regularly. Your policy should say:

- How often models are reviewed

- What metrics are tracked (accuracy, AI bias, model drift)

- How decisions are documented

- When and how models are retrained

- What triggers a human review

Ongoing oversight is how you catch small issues before they cause real harm.

Want to explore what effective AI oversight really looks like in practice? Check out our guide: “The Strategic Necessity of Human Oversight in AI Systems”, it’s packed with insights to help your organization lead with responsibility.

4. Ethical Use and Transparency

AI isn’t just a technical tool. It reflects your organization’s values. The policy should describe:

- What ethical principles you follow (e.g., fairness, explainability)

- How you make AI decisions clear and understandable to users

- How people can question or appeal those decisions

How to Build an AI Use Policy in 7 Steps

If you don’t have a policy yet, you’re not alone. Many companies are just now writing their first. Here’s how we recommend getting started:

Step 1: Build a Core Team

Form a small team from legal, compliance, risk, IT, data science, and business operations. This working group will:

- Set the scope

- Draft the policy

- Test it against real systems

- Manage updates over time

Step 2: Map Your Current AI Use

Before writing anything, list every AI system in your organization. For each one, answer:

- What does it do?

- Who owns it?

- What data does it use?

- What decisions does it make or influence?

- What risks and impacts does it inspire?

- What risk management and oversight protocols are required?

This shows you where the real risk is and helps you prioritize your work.

Step 3: Set Clear Objectives

Your AI policy should serve a purpose beyond compliance. Define why you’re using AI in the first place. Examples:

- Speed up internal workflows

- Improve decision accuracy

- Enhance customer experience

- Cut operational costs

Your policy should protect these benefits while preventing unwanted side effects.

Step 4: Identify Your Principles

Start by anchoring your policy to established Responsible AI (RAI) principles. These help ensure your AI use supports not just business goals, but also safety, accountability, and fairness.

You don’t need to adopt every RAI principle out there, just the ones that make sense for your organization and how your AI systems are used. Common ones include:

- Human oversight

- Fair outcomes

- Transparency

- Privacy by design

These values will shape how you write rules, handle AI risk, and communicate AI use (both inside your organization and with the outside world).

Step 5: Draft the Policy

Now you write. Include:

- A short intro explaining the purpose of the policy

- Definitions for key terms (like “AI system” or “high-risk model”)

- Rules for model development, testing, and deployment

- Steps for ongoing monitoring, review, and documentation

- Reporting lines and stakeholder responsibilities

Keep the tone simple. Use plain language. Make it readable for both engineers and business users.

Step 6: Test and Review

Once it’s drafted, test it against 2 or 3 real use cases. Ask:

- Does this policy help the team act safely?

- Are the rules clear?

- Is anything missing?

Get feedback, adjust, and prepare it for rollout.

Step 7: Roll It Out and Train Teams

Don’t just send a PDF. Host town halls. Run workshops. Add training to onboarding. Make sure:

- Everyone knows where to find the policy

- People understand why it matters

- Teams know how to apply it to their work

Best Practices

The strongest AI policies have a few things in common:

- Short and clear. 10 pages or fewer, written in plain English.

- Owned and updated. Each section has a named owner. Reviews happen once a year.

- Tied to real tools. Policy links to templates, checklists, and workflows people actually use.

- Visible. Posted in your internal knowledge base, not buried in a compliance folder.

- Tested. Used in real AI projects to make sure it works.

- Adaptable. Can be refined or modified efficiently and effectively.

And remember, a policy that lives only on paper is no policy at all.

How Lumenova AI Helps

Writing the policy is just the first step. The harder part is living by it. Therefore, AI governance platforms such as Lumenova AI are essential in this regard.

We help organizations:

- Map AI systems across departments

- Assign ownership and review cycles

- Monitor model performance over time

- Track regulatory compliance

- Generate audit-ready reports

- Monitor approval workflows based on risk

It doesn’t just store your policy. It helps enforce it, day-to-day. That means fewer surprises, stronger oversight, and faster responses when things change.

Conclusion

AI is no longer a future concern. It’s here, in your tools, your decisions, and your products. The risks are real, but so are the opportunities. An AI use policy helps you stay in control. It gives your teams the clarity they need to move fast and remain safe. It builds trust across your company, with your clients, and with your regulators.

And if you don’t have one yet, don’t wait for a problem to show up. The best time to build a policy is before it’s needed. The second-best time is now.

Curious how Lumenova AI can elevate your organization’s approach to AI governance? Book a personalized demo to see our platform in action, explore features aligned with your needs, and get answers to your most pressing questions from our team.

Frequently Asked Questions

An AI use policy is a targeted set of rules that governs how AI systems are selected, deployed, monitored, and retired inside an organization. It sits beneath the broader AI-governance framework and focuses specifically on usage protocols (permitted applications, prohibited practices, data-handling rules, human-in-the-loop requirements, and incident-reporting lines). By clarifying these guardrails up front, the policy prevents ad-hoc experimentation, helps teams meet legal and ethical obligations (e.g., GDPR, EU AI Act), and provides a transparent basis for ongoing oversight and risk management.

Any organization that deploys AI (regardless of sector or impact level) should adopt an AI-use policy to ensure responsible, transparent, and accountable practice.

An effective AI use policy is built around four key pillars: legal compliance, data privacy and security, monitoring and oversight, and ethical use with transparency. It should explain how AI systems comply with laws, how sensitive data is handled, how models are monitored over time, and how ethical principles like fairness and explainability are upheld in practice.

Building a policy involves seven steps: assemble a core team, map current AI use, define clear objectives, identify responsible AI principles, draft the policy, test it against real scenarios, and roll it out with training and support. This process ensures the policy is practical, understandable, and aligned with real business needs and regulatory expectations.

Lumenova AI helps operationalize AI use policies by mapping AI systems, assigning accountability, monitoring performance, tracking compliance, and generating audit-ready reports. It transforms static policies into living frameworks that teams can apply in daily workflows, ensuring consistent oversight and faster response when risks or changes arise.