February 3, 2026

What Effective Generative AI Governance Looks Like in 2026

Contents

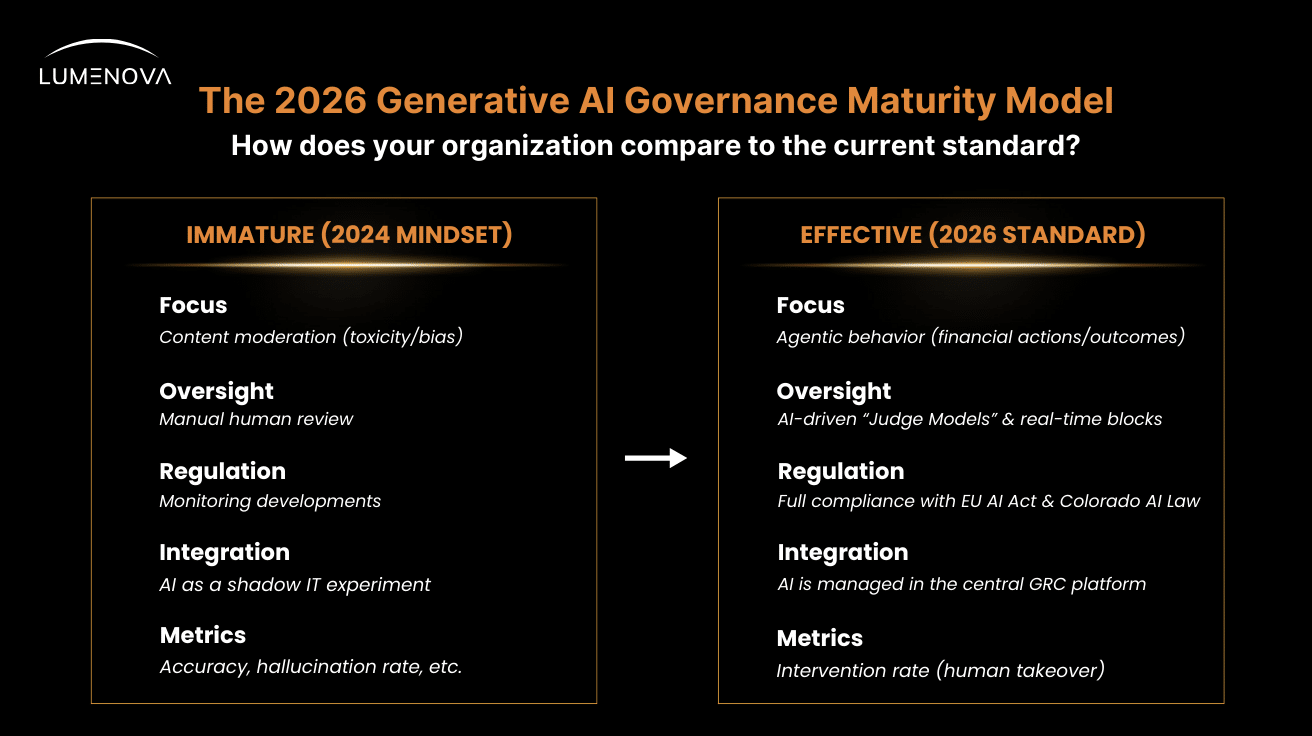

If 2024 was the year of unchecked experimentation, and 2025 was the year of “compliance scrambles”, 2026 has officially ushered in the era of operationalized trust.

For leaders in finance, insurance, and highly regulated sectors, the definition of effective generative AI governance has fundamentally shifted. It is no longer a bureaucratic brake designed to slow down innovation; it is the engine that enables it.

In our State of AI 2025 overview, we identified the critical pivot point where AI ceased to be a discretionary tool and became technological infrastructure. We noted that governance was moving from “check-the-box” exercises to continuous, auditable management practices.

Now, as we navigate the second half of 2026, that prediction has solidified into hard reality. The “pilot purgatory” is empty. Banks are deploying autonomous wealth managers, insurers are automating claims via agents, and hedge funds are synthesizing alpha – all powered by governance frameworks that have evolved as rapidly as the models themselves.

Here is what effective generative AI governance looks like today, and how leading organizations are separating themselves from the laggards.

Generative AI Governance as “Credit Rating”

Two years ago, governance was a back-office function. Today, it is a front-office asset. In the institutional markets of 2026, a firm’s AI governance maturity is directly correlated to its ability to win business and secure capital.

Institutional clients now treat AI safety certifications (such as the evolved ISO/IEC 42001) like credit ratings. Due diligence questionnaires demand proof of model lineage, training data attribution, and hallucination rates. If a firm cannot prove the safety lineage of its AI agents, it risks losing the mandate. In this environment, governance isn’t just about avoiding fines; it’s about preserving the “trust premium” required to operate in high-stakes markets.

The Rise of Guardrail Latency and the Judge Model

The old model of Human-in-the-Loop – where a human reviews every chat log – is mathematically impossible at 2026 scales. Effective governance has shifted to continuous assurance powered by automated Generative AI guardrails.

These guardrails are no longer just passive filters; they are active, intelligent defense layers. Leading engineering teams now budget for guardrail latency – the 300–500 milliseconds required for a specialized “Judge Model” to audit an agent’s output before it is executed.

For example, in an insurance context:

- The Primary Model drafts a claim denial letter.

- The Judge Model instantly scans the draft against fair lending statutes and internal policy.

- The Result: If aggressive language or bias is detected, the Judge Model blocks the output and routes it to a human compliance officer.

This automated oversight allows enterprises to deploy Generative AI guardrails that manage risk in real-time, preventing hallucinations and policy violations without bottling up workflows.

Governing Agentic AI: Permissions and Kill Switches

The most critical evolution in 2026 is the shift from governing content (what the AI says) to governing behavior (what the AI does). We are no longer just worried about a chatbot being rude; we are worried about a financial agent executing a bad trade or approving a fraudulent loan.

Effective governance for agentic AI relies on two non-negotiable mechanisms:

Tiered Action Allowances

Just as employees have tiered authority, AI agents operate under strict action allowances, for example:

- Tier 3 (Low Risk): An agent can draft emails and answer FAQs.

- Tier 2 (Mid Risk): A procurement agent can execute purchases up to $500 autonomously.

- Tier 1 (Critical): Any transaction over $10,000 requires a cryptographic “human key”—a digital approval from a verified supervisor.

The Kill Switch Protocol

Every autonomous system in 2026 must have a hard-coded “kill switch”. If an investment agent begins to drift outside its risk parameters (e.g., violating concentration limits), the governance layer must be able to sever its API access instantly, independent of the model’s own logic.

The Sovereign Cloud Reality

The dream of a borderless global AI infrastructure has been replaced by the reality of data sovereignty. To comply with the fully active EU AI Act (as of August 2026), global firms have bifurcated their infrastructure.

- EU Instances: Operations in Frankfurt or Paris run on sovereign clouds where data never crosses the Atlantic, ensuring compliance with Fundamental Rights Impact Assessments (FRIA).

- US Instances: Operations in New York or San Francisco are optimized for the specific liability frameworks of state-level regulations like the Colorado AI Law and California SB 53.

Effective governance in 2026 means maintaining this complex compliance layer – one that is robust enough to satisfy EU regulators yet flexible enough to adapt to the speed of US innovation.

The Cultural Shift: Move Fast and Prove Things

Perhaps the biggest change is cultural. The “Move Fast and Break Things” era is dead. We can say that the mantra for 2026 is “Move Fast and Prove Things”.

Compliance teams have evolved beyond lawyers to include Model Risk Analysts and Prompt Engineers. The role of the AI Risk Officer has become as central to the C-Suite as the CISO. In this culture, the ability to innovate is predicated on the ability to document that innovation.

Our Conclusion

As we look toward the future, the question for leaders is no longer “Can we use AI?” but “Can we trust our AI to act on our behalf?”

The winners in 2026 aren’t just the companies with the smartest models. They are the companies with the smartest guardrails – because in a regulated world, control is the ultimate competitive advantage.

Is your organization ready for the August 2026 EU AI Act deadline?

Contact Lumenova AI today to automate your governance journey.