January 8, 2026

Is Your Existing AI Governance Enough? Why GenAI Demands a New Framework

Contents

The enterprise AI landscape has undergone a seismic shift. For the better part of a decade, artificial intelligence in a business context largely meant predictive analytics – forecasting churn, optimizing supply chains, or detecting fraud. These systems were complex, but they were generally contained.

Then came along Generative AI.

Suddenly, AI wasn’t just analyzing numbers; it was writing code, drafting legal contracts, and interacting directly with customers. The democratization of these powerful tools has outpaced organizational control, creating a “shadow AI” environment where employees use unvetted tools to speed up their workflows – mainly because they are required to. While the productivity gains are undeniable, the risks are existential.

To bridge the gap between innovation and security, organizations must move beyond traditional IT policies and adopt a dedicated Gen AI governance framework. This isn’t just about compliance; it’s about creating a safe harbor where innovation can thrive without sinking the ship.

The Shift: Traditional Predictive AI vs. Generative AI

To understand why our old rulebooks don’t work, we first need to know how the game has changed. Traditional AI (predictive) and Generative AI are fundamentally different technologies requiring different oversight mechanisms.

Predictive AI is typically discriminative. It looks at historical data to classify input or predict future outcomes (e.g., “Is this email spam?” or “What will sales look like in Q3?”). It is often deterministic and easier to validate against ground truth.

Generative AI, on the other hand, is creative. It generates entirely new data – text, images, code – that resembles the training data but doesn’t replicate it exactly. It is probabilistic, meaning the same prompt can yield different answers at different times.

For a deeper dive into these technical distinctions, Zapier provides an excellent breakdown of Generative AI vs. Predictive AI.

This shift from analysis to creation is precisely why legacy governance models are failing.

Where Traditional Governance Frameworks Fall Short

Most existing IT and data governance frameworks were built for a deterministic world. They focus heavily on access control (who can see the data?) and data lineage (where did the data come from?). These frameworks were never designed for systems that create content, reason probabilistically, and interact directly with humans, and while these controls remain important, they fail to address the unique behaviors of Large Language Models (LLMs):

- The “black box” & explainability: In traditional modeling, you could often trace the decision path of a decision tree or regression model. With GenAI, explaining why a model outputted a specific hallucination or biased response is notoriously difficult (check out our team’s AI jailbreak experiments for proof!). On the other hand, traditional frameworks rarely account for non-deterministic outputs where “right” and “wrong” are subjective.

- Output monitoring vs. input security: Traditional security focuses on preventing malicious inputs (SQL injection). GenAI introduces the risk of prompt injection (tricking the AI into ignoring its rules) and requires intense monitoring of the output. A standard firewall cannot detect if your AI chatbot just promised a customer a $1 product or used hate speech.

- User input risks (IP leakage): In a traditional app, a user queries a database. In GenAI, the user’s query is the training data for future sessions (in some public models). Employees pasting proprietary code or sensitive meeting notes into public LLMs is a massive data leakage vector that standard DLP (Data Loss Prevention) tools often miss.

Navigating the Evolving Regulatory Landscape

A robust Gen AI governance framework must now account for a complex web of emerging regulations. The “wait and see” approach is no longer viable as major standards bodies have moved from theory to enforcement.

1. The EU AI Act

The EU AI Act is the world’s first comprehensive AI law. Crucially for GenAI, it imposes specific obligations on General Purpose AI (GPAI) models.

- Transparency: Providers must maintain detailed technical documentation and comply with copyright laws.

- Training data: You must publish a detailed summary of the content used to train the model.

- High-risk classification: If your GenAI system is used in critical areas (e.g., HR recruitment, credit scoring, medical advice), it falls under “High Risk” and faces strict conformity assessments, human oversight requirements, and quality management obligations.

2. ISO/IEC 42001

Published in late 2023, ISO/IEC 42001 is the global standard for AI Management Systems (AIMS). Think of it as “ISO 27001 for AI.”

- It doesn’t tell you how to build a model, but how to manage the organization building it.

- It requires a “Plan-Do-Check-Act” cycle specifically for AI, mandating that leadership demonstrates commitment, risks are continuously assessed, and controls are implemented to mitigate those risks (including GenAI-specific risks like bias).

3. NIST AI Risk Management Framework (AI RMF)

The NIST AI RMF is voluntary but highly influential, especially in the US. It breaks governance into four functions: Govern, Map, Measure, and Manage.

- For GenAI, NIST emphasizes the “Measure” function – requiring organizations to quantitatively and qualitatively assess validity, reliability, safety, and explainability before deployment.

- It specifically highlights the need to manage “socio-technical” risks, acknowledging that GenAI affects people and society (e.g., deepfakes, bias) in ways traditional code does not.

The Business Risks of Governance Gaps

Failing to implement a specialized Gen AI governance framework exposes the organization to risks that go far beyond a “computer glitch.”

- Intellectual property (IP) leakage: When employees use public GenAI tools for work, they may inadvertently hand over trade secrets to the model provider. Samsung, for instance, famously restricted GenAI use after engineers leaked proprietary code to ChatGPT.

- Brand reputation damage: GenAI hallucinations are confident but wrong. Air Canada was held liable when its chatbot invented a refund policy that didn’t exist. Without governance rails, your AI is a loose cannon representing your brand.

- Regulatory fines: Non-compliance with the EU AI Act can lead to fines of up to €35 million or 7% of global turnover.

- Shadow AI & IT sprawl: Without a sanctioned framework, business units are bound to procure their own AI tools. This leads to fragmented data silos, unvetted vendors, and a complete lack of visibility for the CISO.

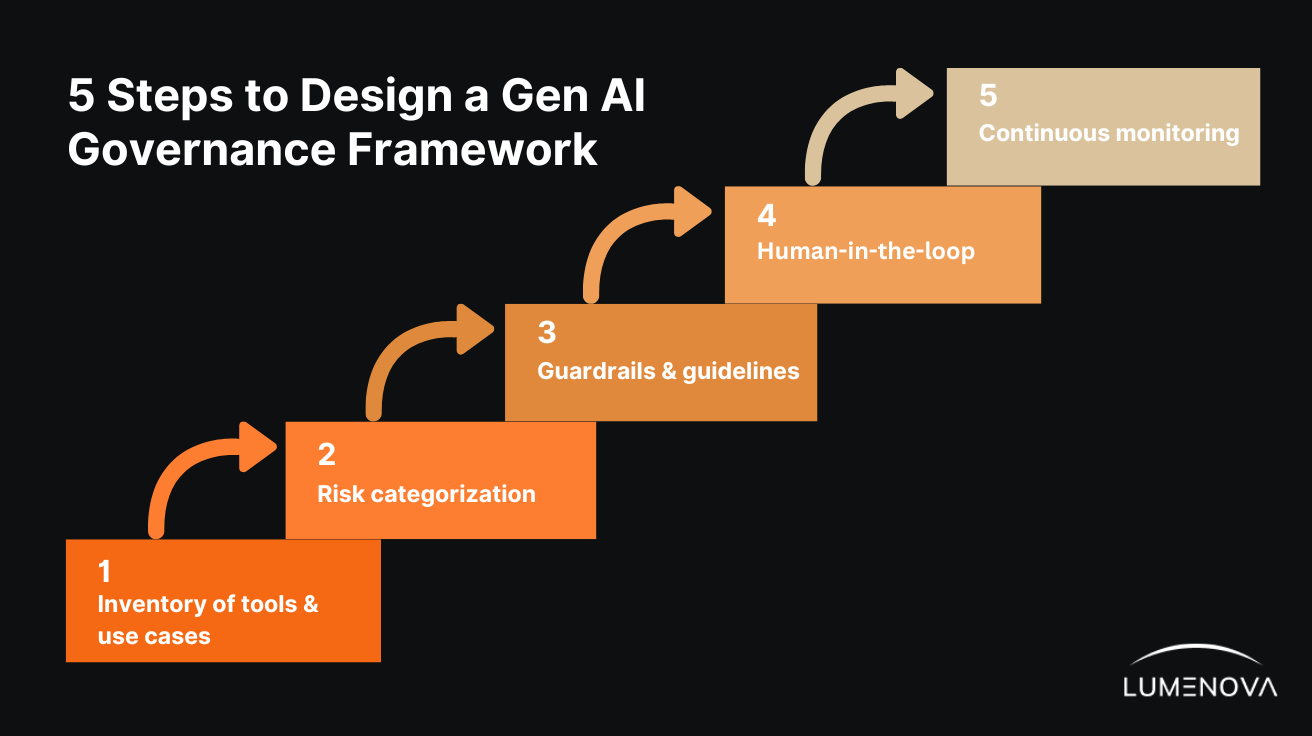

A Roadmap for Evolving Your AI Governance

To move from chaos to control, organizations need a roadmap that evolves their existing governance into a Gen AI governance framework.

Phase 1: Discovery & Inventory

You cannot govern what you cannot see.

- Scan the network: Identify which AI tools are currently being accessed by employees.

- Register use cases: Create a mandatory registry for all GenAI projects. Ask: Is this internal-facing or customer-facing? Does it use PII (Personally Identifiable Information)?

Phase 2: Risk Categorization

Not all GenAI is equal. A tool generating marketing copy has a different risk profile than one summarizing medical records.

- Tier your risks: Adopt a tiered approach (e.g., Low, Medium, High, Unacceptable).

- Align with regulations: Map these tiers to the EU AI Act’s risk categories to ensure future compliance.

Phase 3: Guardrails & Technology

Policy is not enough; you need technical enforcement.

- Implement an AI gateway: distinct from a standard firewall, this sits between your users and the LLMs. It can anonymize PII before it leaves your environment and block harmful content from coming back in.

- Prompt engineering guidelines: Standardize system prompts to reduce hallucinations.

Phase 4: Human-in-the-Loop (HITL)

For high-stakes use cases, the AI should never be the final decision-maker.

- Mandate review: Governance policy should dictate that all AI-generated code, legal documents, or financial advice must be reviewed by a qualified human.

- Feedback loops: Use human corrections to fine-tune the models and improve future performance.

Phase 5: Continuous Monitoring

GenAI models ”drift.” A model that is safe today might bypass its guardrails tomorrow after an update.

- Automated Evaluation: distinct from uptime monitoring, you need to monitor for quality. Are hallucination rates increasing? Is the tone shifting?

- Red Teaming: Regularly employ “red teams” to try to break your AI (jailbreaking) to find vulnerabilities before bad actors do.

For further information on creating a governance framework aligned with your organization’s risk tolerance, read this article next.

Conclusion

Generative AI is not merely a trend; it is the new operating system for business. However, the speed of adoption cannot come at the expense of safety and trust. By implementing a dedicated Gen AI governance framework, leaders can do more than just avoid fines – they can build a foundation of confidence. When your employees know the guardrails are solid, they drive faster. When your customers know their data is safe, they engage deeper. Governance, ultimately, is not a brake; it is the steering wheel that allows you to drive at full speed.

Navigating this complex landscape of evolving regulations and technical risks doesn’t have to be a solo journey. At Lumenova AI, we specialize in helping enterprises bridge the gap between innovation and compliance.

Ready to build a governance strategy that fits your unique organization?

Book a demo with Lumenova AI today for a dedicated consultation. Let us show you how to develop a Gen AI governance framework tailored to your specific internal needs, ensuring you can harness the full power of AI responsibly and securely.