July 8, 2025

Implementing Responsible AI Governance in Modern Enterprises

Contents

Your AI is up and running now, sorting through job apps, catching fraud, and making predictions. You did the hard part: building and deploying it. But now? Now the real questions show up.

Like:

“So… why did the model make that decision?”

“Can we explain this to our legal team?”

“Wait, who’s actually responsible for this thing?”

See, that’s governance. It’s not just rules or red tape. It’s how we keep AI fair, safe, and explainable before something breaks. And honestly? A lot of teams are realizing they need it.

And that’s why this article outlines how responsible AI governance works in practice and why it’s the foundation for trust, resilience, and sustainable innovation.

What Is Responsible AI Governance?

Put simply, responsible AI governance is the system of rules, processes, and accountability that ensures AI is used safely, ethically, and legally.

It goes beyond data science and model accuracy. It asks:

- Who decides where and how AI is applied?

- How are risks identified and mitigated?

- Are decisions explainable (and by whom)?

- Who is responsible if something goes wrong?

Effective enterprise AI governance includes:

- Clear policies

- Risk and impact assessments

- Transparency requirements

- Bias mitigation protocols

- Human oversight

- Continuous monitoring

In short, governance ensures that AI doesn’t just “work” — it works responsibly.

Why This Is Now a C-Suite Priority

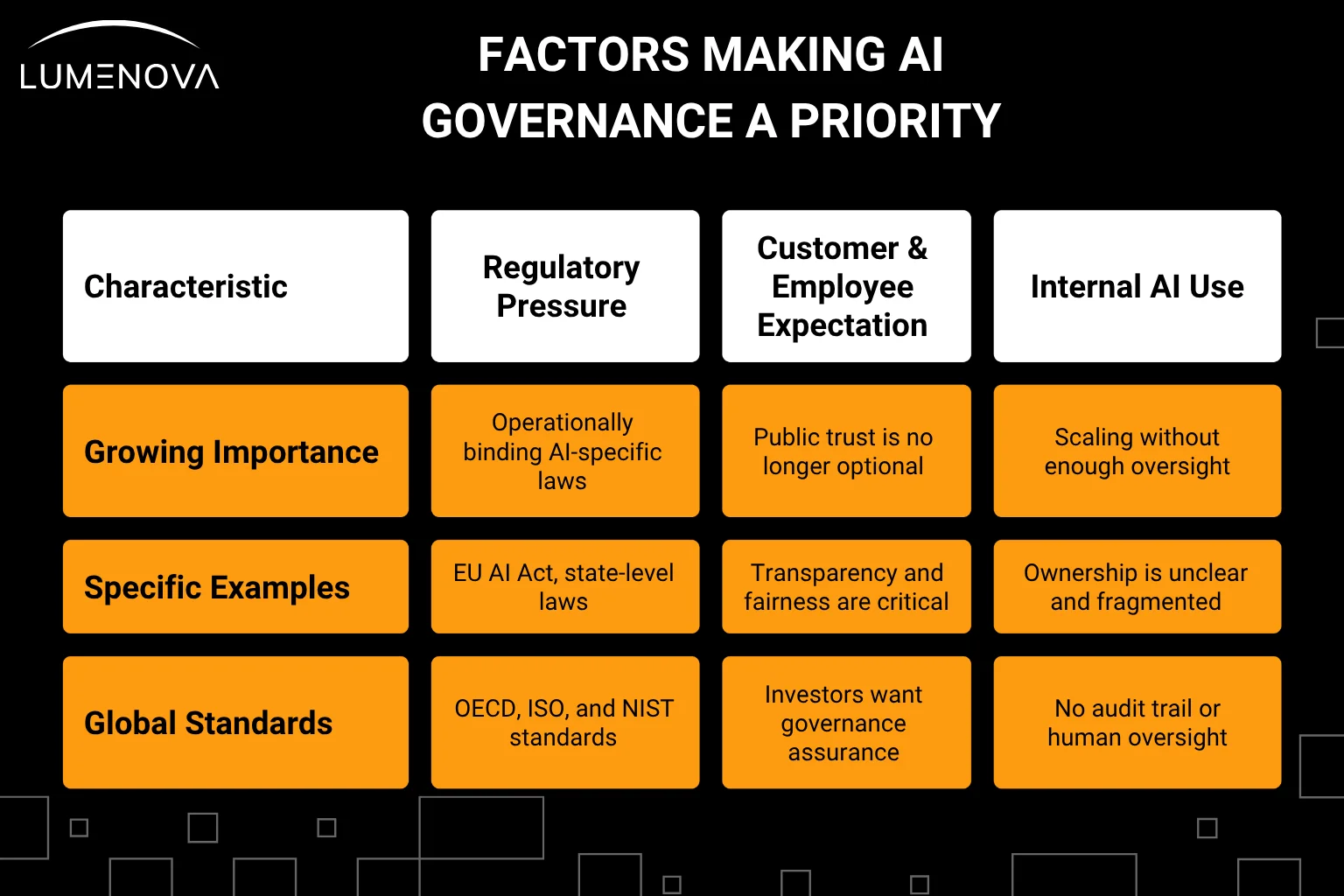

Three factors have made responsible AI governance a board-level concern:

1. Regulatory pressure is real and growing

AI-specific laws are becoming operationally binding:

- The European Union has finalized the EU AI Act, which introduces detailed rules based on system risk levels, including prohibited applications.

- In the United States, state-level laws such as the California AI Transparency Act and the New York City Bias Audit Law are in effect or in progress.

- Other countries, including Canada, the UK, and Brazil, are advancing similar frameworks.

In parallel, global bodies such as the OECD, ISO, and NIST are setting widely adopted standards. Enterprises must navigate overlapping jurisdictions, prepare for audits, and demonstrate proactive risk management.

2. Customers and employees expect ethical AI

Public trust is no longer optional. Consumers want to know how AI impacts their lives. Employees want to know how it impacts their work. Investors want assurance that governance is in place.

Recent 2025 research confirms that trust remains cautious and people continue to worry about bias, data misuse, and lack of accountability.

- A KPMG/University of Melbourne global study (2025) spanning 48,000+ respondents across 47 countries found:

- 58% of people view AI as untrustworthy, even as 83% believe it brings benefits.

- Only 29% of U.S. consumers believe current AI regulations are adequate, with 72% saying more regulation is needed. Moreover, 81% indicate they’d trust AI more if proper laws and oversight existed.

- According to 2025 consumer surveys, 62% of consumers would trust a brand more if it were transparent about its AI usage.

- IBM’s 2025 projections for enterprise AI highlight that nearly 45% of organizations cite concerns about data accuracy or bias as a major adoption barrier.

Those being said, to earn consumer, employee, and investor trust in 2025, organizations must invest in transparency, ethical design, bias mitigation, clear communication, and robust governance frameworks.

3. Internal AI use is scaling without enough oversight

AI is being embedded into existing workflows, often via third-party APIs, vendor platforms, or automated decision systems. In many organizations, ownership is unclear. Governance responsibilities are fragmented. There may be no audit trail, no human in the loop, and no formal accountability.

Responsible data governance addresses these gaps. It aligns data, compliance, and technology teams, sets clear controls, and allows businesses to scale AI without scaling exposure.

What Responsible AI Governance Looks Like

Organizations that take AI governance seriously tend to focus on five interconnected principles:

Fairness and Bias Mitigation

AI systems must not discriminate across race, gender, age, or other protected attributes. This means identifying and correcting both direct and indirect bias in data and model outcomes. Tools like IBM Fairness 360 and Aequitas help measure and reduce these risks.

Transparency and Explainability

AI models should not be black boxes. Stakeholders must be able to understand how decisions are made, what factors drive them, and where uncertainty exists. Techniques like SHAP and LIME help teams interpret model behavior and communicate outcomes to business and compliance audiences.

Privacy and Data Protection

Data used to train AI models must be securely managed, purpose-limited, and compliant with regulations like GDPR and CCPA. Responsible governance includes controls for consent, access, retention, and minimization of personal data.

Accountability and Oversight

There must be clear ownership of every AI system. Governance assigns roles and responsibilities across teams (from data science to legal), ensuring models are reviewed, approved, monitored, and, when necessary, retired.

Human-Centered Design

AI should support human goals, not replace them. This means engaging affected stakeholders early, conducting impact assessments, and integrating ethical review into the model lifecycle.

Why It’s Harder Than It Looks

Even the most well-intentioned organizations struggle with responsible AI governance. Common barriers include:

- Ethics washing: Companies publish high-level AI principles but do not follow through with implementation.

- Fragmented ownership: Legal, data, risk, and engineering teams may operate in silos, leading to gaps in review and monitoring.

- Limited resources: Smaller organizations lack the tooling, expertise, or bandwidth to develop governance programs from scratch.

- Cultural resistance: Employees worry AI may replace them or violate their privacy, leading to low trust and adoption.

Addressing these issues requires more than policies. It requires tools, coordination, and a governance framework that is operational, not theoretical.

From Strategy to Execution: Implementing AI Governance That Scales

In a previous article, we explored how AI has moved from experiment to infrastructure, and what that shift means for enterprise governance. We outlined the case for Responsible AI, discussed its critical components, and shared a roadmap to help organizations establish oversight that aligns with legal, ethical, and business demands. (Read more: Enterprise AI Governance: Ensuring Trust and Compliance)

Now we focus on what comes next: implementation.

Governance strategy without execution is just intent. Leading organizations are now asking a different question: How do we operationalize AI governance (across every business unit, model, and lifecycle phase) without slowing innovation or burdening our teams?

This article offers a detailed framework to help you do exactly that. We’ll show how to move from high-level principles to day-to-day accountability, and how Lumenova AI supports that process at scale.

Why Implementation Matters More Than Ever

AI systems don’t remain static. They evolve with data, context, and user behavior. Governance, therefore, cannot be treated as a compliance checkbox. It must be operationalized as an ongoing system that guides how AI is designed, deployed, monitored, and improved.

What’s at stake?

- Legal exposure under new and emerging AI regulations

- Loss of stakeholder trust when systems behave unexpectedly

- Business disruption from flawed, biased, or opaque models

Even well-intentioned companies can fall short if governance mechanisms aren’t embedded across departments, roles, and workflows. That’s why implementation isn’t just about rules, but also about capability.

A Practical Framework for Implementing AI Governance

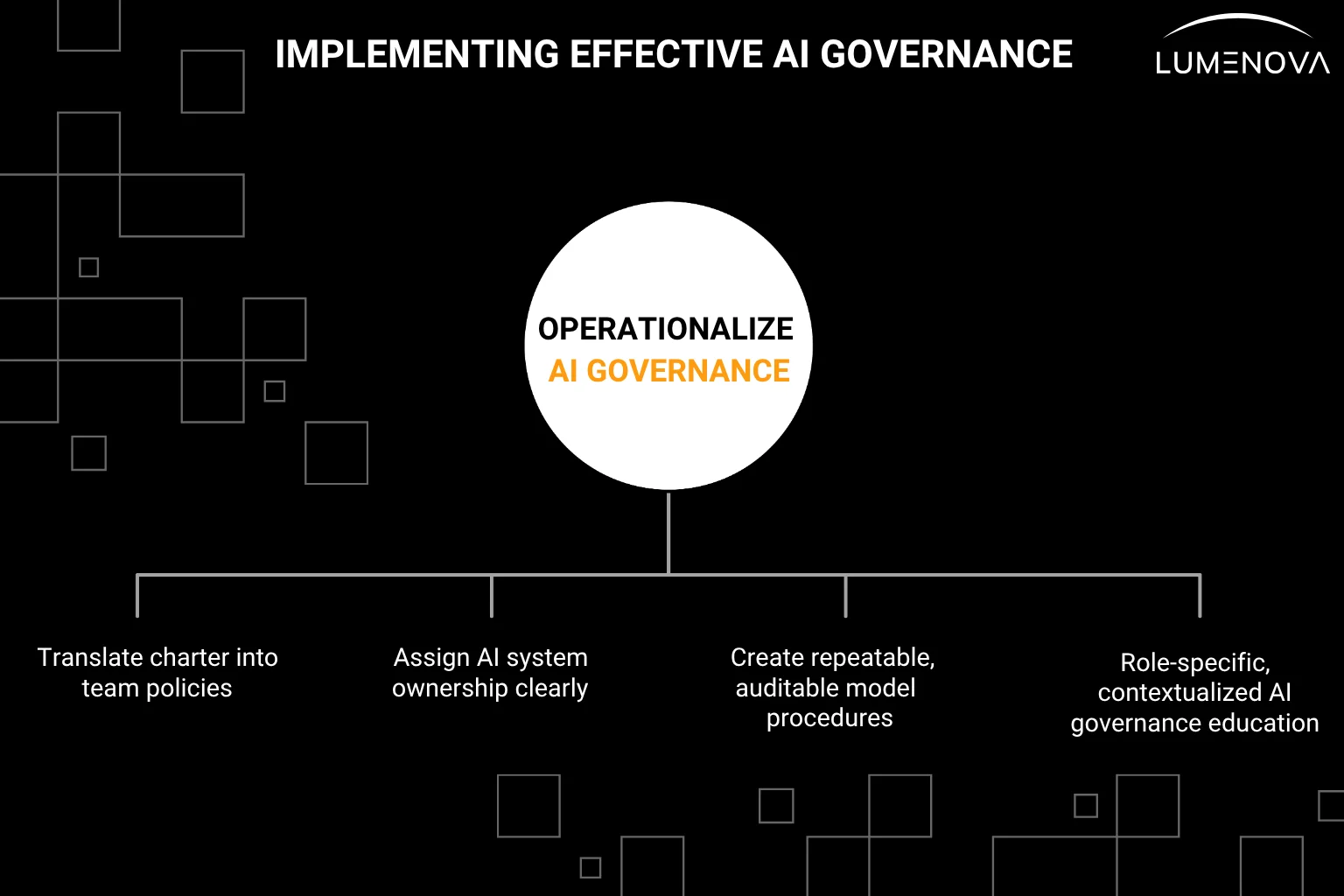

In another article, The Pathway to AI Governance, we outlined four foundational steps: define your program, assign responsibilities, create policies, and educate your people. These remain essential, but here we expand them into operational tactics that move organizations from strategy to impact.

Step 1: Activate Your Governance Charter

If your organization has already defined its AI governance mission and scope, the next step is to operationalize that vision.

- Translate your charter into team-level policies and project-specific workflows.

- Use the same language across legal, tech, and business teams to avoid misinterpretation.

- Map AI governance to broader enterprise risk and compliance efforts

Our advice: Treat the charter as a living document. Schedule quarterly reviews to align them with regulatory updates and lessons learned from audits or model incidents.

Step 2: Embed Roles Within Real Workflows

Most companies define governance roles on paper, but few successfully embed them into their operating model.

To close the gap:

- Assign AI system ownership at the model level (from development to monitoring)

- Ensure cross-functional review boards meet regularly to assess risk and policy adherence

- Equip application owners with tooling that supports compliance (not just checklists)

Every AI model should have a named accountable executive. No exceptions.

Step 3: Translate Policies into Repeatable Procedures

Effective governance relies on procedures that are both repeatable and auditable. These must go beyond broad principles and reflect how teams actually build and use AI.

Your operational procedures should include:

- A model intake process that flags risk levels before deployment

- Guidelines for documentation, including data lineage and performance metrics

- Testing protocols for bias, explainability, robustness, and security

- Review cycles to ensure continuous monitoring and retraining

At Lumenova AI, we’ve seen that translating policies into platform-based processes significantly reduces the risk of policy drift.

Step 4: Educate With Context, Not Just Content

Training programs are often too generic. Effective education is role-specific and contextualized.

- Legal and risk teams need to understand the technical implications of model behavior.

- Engineers need to see how their decisions connect to governance goals

- Business leaders need metrics that link AI risk to strategic priorities

Consider embedding learning checkpoints into project milestones (e.g., model launch gates or quarterly reviews). Make governance part of delivery, not a sideline responsibility.

Beyond Frameworks: The Future of AI Governance Is Operational

What we’re seeing across leading organizations is a realization: responsible AI governance cannot live in static documents or quarterly review decks. It must be practiced: embedded into workflows, reinforced through systems, and aligned to the pace of both technological change and regulatory pressure.

At this stage of AI maturity, implementation is not just about internal controls or policy enforcement. It’s about building an operating model that allows AI to scale safely, ethically, and compliantly, without slowing innovation or overburdening teams.

Enterprises that get this right don’t treat AI governance as a siloed function. They design it into the model lifecycle. They ensure data scientists, product managers, compliance leaders, and executives are working from a shared understanding of risk, value, and responsibility. They automate the right things, but never remove human judgment from the loop.

They understand that trust is a measurable business asset, and governance is how it’s earned.

Where We Go From Here

The landscape is only getting more complex. The EU AI Act will reshape global regulatory expectations. U.S. state-level policies are expanding in scope and enforcement. Industry-specific standards are tightening. And public scrutiny (from customers, employees, investors, and the media) continues to rise.

AI governance is no longer a back-office task. It is a board-level issue, a brand differentiator, and increasingly, a determinant of operational resilience.

For enterprise leaders, the question is not whether to implement responsible AI governance — it’s how quickly and effectively it can be done.

At Lumenova AI, we help organizations bridge that gap between principle and practice. Our platform was purpose-built to operationalize governance at scale: assigning ownership, automating evaluations, tracking compliance, and providing visibility across the entire AI portfolio.

Because governing AI well is no longer just about avoiding harm. It’s about enabling AI to deliver on its full promise: confidently, accountably, and at enterprise scale.

Reflective Questions for Leaders

- How well is your organization translating AI principles into operational controls?

- Can you confidently explain your AI risk posture to a regulator, a board member, or a customer?

- What infrastructure do you have in place to ensure your models remain compliant, explainable, and aligned with your business values over time?

If those answers aren’t clear (or if you’re ready to move from theory to execution and build an AI governance framework), we’re here to help. Book a demo to see how our platform enables governance to become part of your enterprise DNA, a foundation for responsible, scalable AI.