September 16, 2025

Responsible AI Governance in Healthcare: Who Signs Off?

Contents

The pace of AI adoption in healthcare has been nothing short of remarkable. From diagnostic algorithms outperforming radiologists to predictive models anticipating patient deterioration, AI is revolutionizing how care is delivered. But with great innovation comes an even greater responsibility: ensuring these technologies serve patients ethically, safely, and equitably.

That is where responsible AI governance becomes essential. It serves as a comprehensive framework for accountability, transparency, and stakeholder inclusion, not just a policy checklist. Many healthcare leaders are now asking an important question: Who signs off on this? Who holds responsibility when AI produces harmful outcomes or delivers the right results for the wrong reasons?

Setting the Stage: AI Is Reshaping Healthcare (Fast)

AI is no longer waiting in the wings. It has stepped into the room, pulling up a chair beside our doctors, quietly shaping the choices that can mean everything.

We’re seeing:

- AI diagnostic tools are being embedded into radiology, pathology, and dermatology workflows

- Predictive analytics forecasts everything from disease progression to hospital readmission

- Natural language processing (NLP) is transforming electronic health record (EHR) interactions.

But this acceleration comes with inherent risks: algorithmic bias, opaque decision-making, unequal access, and, of course, questions of legal and ethical responsibility.

This is why responsible AI governance is non-negotiable.

To understand how unchecked AI bias impacts medical decisions (and how governance can help), read The Hidden Dangers of AI Bias in Healthcare Decision‑Making.

What Is Responsible AI Governance in Healthcare?

At its core, responsible AI governance means having the right structures, policies, and people in place to oversee AI systems across their lifecycle (from development to deployment to continuous monitoring).

It requires asking questions like:

- Is this AI system fair and unbiased?

- Is it explainable to both doctors and patients?

- Is it compliant with current healthcare regulations?

- Who’s accountable when the AI fails, or when it succeeds at the wrong goal?

This governance model should include clinical stakeholders, technologists, compliance officers, patient advocates, and legal experts. Without these checks and balances, the promise of AI can quickly become a liability.

For a structured, step‑by‑step guide to implementing governance across an AI lifecycle, explore How to Build an Artificial Intelligence Governance Framework.

Why the “Sign-Off” Matters

In any regulated sector, someone always signs on the dotted line. In healthcare, that accountability usually falls on a mix of:

- Chief Medical Officers (CMOs)

- Chief Information Officers (CIOs)

- Clinical Governance Boards

- AI Ethics Committees

- Regulatory and Compliance Teams

But AI complicates things. Unlike a drug trial, AI isn’t static, but it evolves. It learns. So, who signs off when it changes? Is it still safe? Still fair?

What’s needed is not a one-time approval, but an iterative sign-off process that adapts as the AI system does.

Building Blocks of a Responsible AI Governance Framework

So, what does a solid governance framework actually include? Here are the foundational elements that forward-thinking healthcare organizations are embracing:

1. Clarity and Comprehensibility

Governance must be readable (not just by lawyers and engineers), but also by clinicians and patients. Policies should be written in plain language and describe how decisions are made, who is involved, and what the expectations are.

2. Transparency and Documentation

Stakeholders deserve to know how the AI system works (what data it was trained on, what assumptions were made, and what its limitations are). Transparency builds trust, especially in clinical settings.

3. Ethical Oversight

An internal ethics board or review committee should be involved in the lifecycle of any AI system. This group should represent clinical, technical, legal, and community perspectives.

4. Regulatory Alignment

Any responsible framework must be aligned with HIPAA, GDPR, and upcoming AI-specific laws like the EU AI Act or FDA guidelines. Governance without compliance is just a theory.

5. Adaptive Monitoring

AI systems evolve. Model drift, changes in input data, or system upgrades can all alter performance. A responsible governance framework includes real-time monitoring and clear escalation paths for when things go wrong.

So, Who Signs Off?

Here’s how different roles typically contribute to the governance and approval process:

| Role | Responsibility |

| Data Scientists | Validate model performance, fairness, and explainability |

| Clinical Leaders (e.g., CMOs) | Ensure clinical appropriateness and ethical alignment |

| Compliance & Risk Officers | Confirm regulatory adherence and audit readiness |

| Patient Advocates | Represent patient concerns and health equity impacts |

| IT Leaders (e.g., CIOs) | Manage system integration, data privacy, and infrastructure safety |

Instead of one person signing off, it’s a distributed accountability model, one that requires coordination and shared ownership.

The Role of Iterative Review Mechanisms

Sign-off is a cycle.

As AI systems evolve, so should governance. Regular review intervals are essential, especially when:

- Models are retrained or updated

- Input data changes significantly

- New patient populations are added

- Regulations evolve

A continuous improvement loop (supported by clear documentation, logging, and retrospective audits) ensures the system stays safe, ethical, and effective over time.

Real-World Lessons: What Implementation Looks Like

Case Study 1: Ambient AI Scribes at The Permanente Medical Group

- Deployment scope & outcomes: Between October 16, 2023, and December 28, 2024, TPMG rolled out ambient AI scribes across 17 Northern California centers, involving 7,260 physicians, saving approximately 15,000 hours of documentation time with over 2.5 million uses in a year.

- Ethics & patient privacy governance: The system is voluntary, requires patient consent, doesn’t retain raw audio, and adheres to privacy safeguards overseen by ethics committees.

- Pilot testing and clinician feedback loops: TPMG executed a 10‑week responsible‑AI pilot in early 2024, gathering clinician insights through a QA feedback loop that included over 1,000 physicians.

- Post‑deployment monitoring by IT/ops teams: Continuous evaluation encompassed accuracy, usage metrics, and clinician sentiment, monitored by quality assurance and clinical‑ops teams.

How did they manage governance?

- Ethics committees signed off on patient privacy standards.

- Clinicians participated in pilot testing and feedback loops.

- IT teams monitored system behavior post-deployment.

Case Study 2: Radiology AI Tools and Career Anxiety

In contrast, a survey of radiology residents (3666 Chinese residents) found anxiety around AI’s impact, with associations between AI concerns, work stress, and career competency found mixed feelings about AI. While most acknowledged its utility, many feared job displacement and questioned their career paths.

This example illustrates a governance blind spot: stakeholder sentiment. Successful governance must account not just for outcomes, but for the human impact across professions.

The Ethical Minefield: Bias, Privacy, and AI Literacy

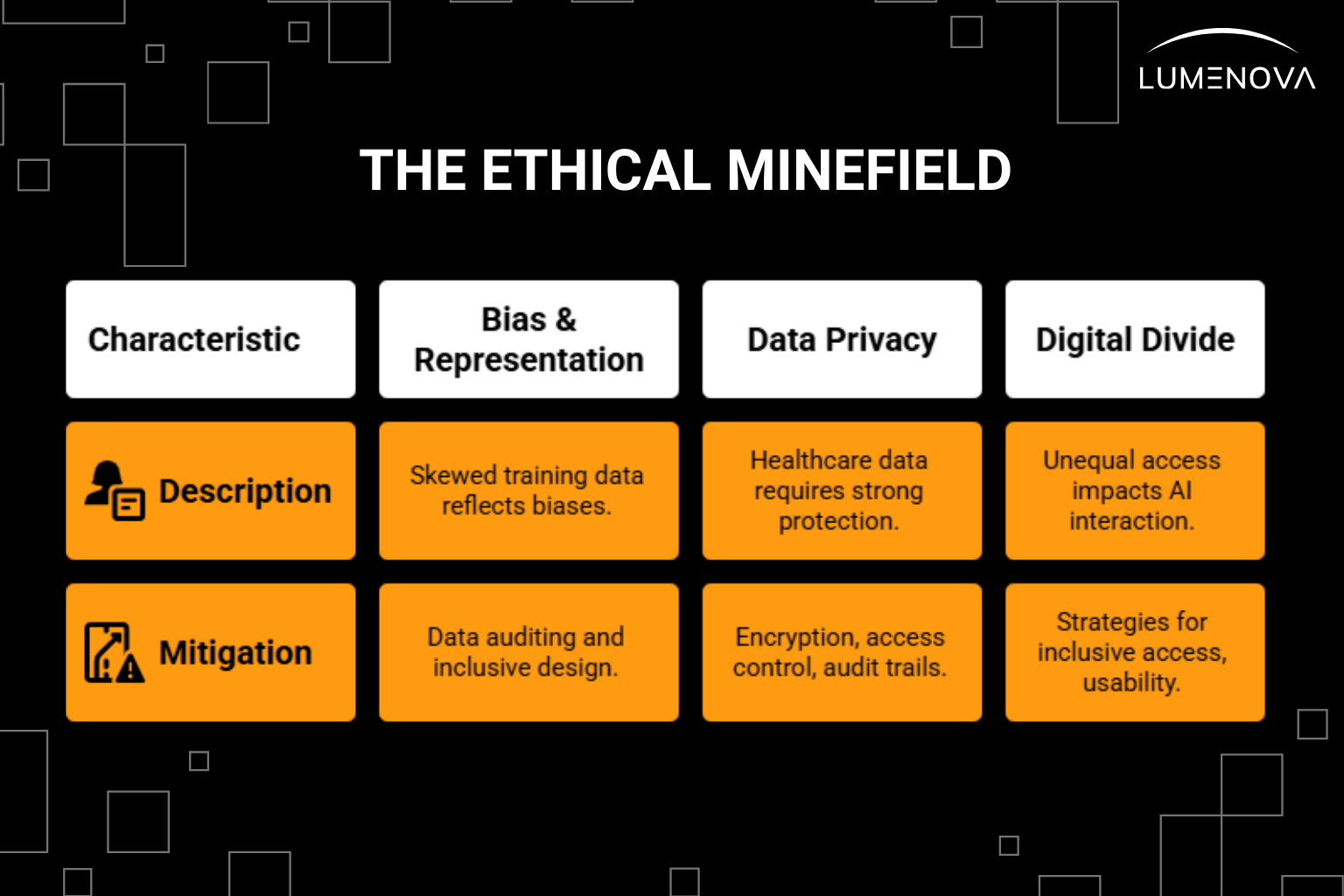

Despite best intentions, AI systems can fail, or worse, reinforce existing inequities. Here are the top governance challenges we see:

1. Bias and Misrepresentation

If your training data is skewed toward one demographic, your model will reflect those biases. That could mean poorer outcomes for underrepresented patients. Mitigating bias requires intentional data auditing and inclusive design.

2. Data Privacy

Healthcare data is among the most sensitive in the world. Responsible AI platforms must support a comprehensive set of safeguards, including but not limited to:

- End-to-end encryption

- Access controls

- Audit trails

- Clear patient consent policies

- Data anonymization and de-identification

- Regular security risk assessments

- Compliance with healthcare data regulations

- Secure data storage and backup procedures

3. Digital Divide

Not all patients interact with AI in the same way. Some may lack the digital literacy or access needed to benefit. Governance must include strategies for inclusive access and usability.

Navigating the Regulatory Landscape

Regulation is catching up, fast. The EU AI Act, expected to pass final stages in 2025, introduces a tiered risk framework for AI systems, with healthcare falling squarely into the high-risk category. Meanwhile, in the U.S., the FDA continues to expand its role in regulating AI as a medical device (SaMD).

To stay ahead, organizations should:

- Implement policy enforcement checks during AI development

- Maintain version-controlled documentation

- Conduct regular internal audits against regulatory benchmarks

Being proactive is far cheaper (and safer) than facing non-compliance penalties down the line.

What Comes Next? A Call for Adaptive Governance

Looking ahead, AI governance in healthcare must evolve in step with technology. That means:

- Developing AI-specific governance frameworks, not just retrofitting existing policies.

- Fostering multi-stakeholder collaboration (patients, providers, technologists, regulators).

- Embedding governance into daily workflows, not isolating it to compliance reviews.

- Incorporating public dialogue, especially in communities most affected by healthcare disparities.

Healthcare doesn’t need a thousand new rules. It needs a clear, transparent process for ethical oversight that evolves alongside the technology.

Final Thoughts: Accountability Is the New Innovation

AI can do incredible things for healthcare, but only if we build the right guardrails. Innovation without accountability is dangerous. But accountability without innovation is stagnation.

Responsible AI governance gives us a path forward. One where patients benefit from smarter care, clinicians feel supported (not replaced), and regulators have the clarity they need to protect public trust.

And the sign-off? It is rooted in a culture of shared responsibility, with every stakeholder committed to doing the right thing at every step. Regulators and the public expect a clear point of accountability. Effective governance balances distributed responsibility inside the organization with external accountability through a designated leader representing its commitments.

For example, the PPTO case study in Canada describes an AI governance committee with many roles, and still designates a single executive & clinician leader to be externally responsible. In the same way, the AMA Governance toolkit also emphasizes that while teams share work internally, executive leadership must have clearly assigned accountability.

Call to Action

At Lumenova AI, we help healthcare organizations put governance into practice. Our RAI platform supports real-time AI monitoring, stakeholder collaboration, compliance automation, and full audit readiness (so that your innovations don’t just work. They’re accountable, transparent, and trustworthy).

Talk to our team to learn how we can support your AI governance strategy from concept to compliance.

Reflective Questions

- Who in your organization currently “signs off” on AI decisions, and is that still the right person?

- Are patients and clinicians actively involved in your AI governance process?

- How often do you review and revise the ethical assumptions behind your AI tools?

Frequently Asked Questions

Responsible AI governance in healthcare refers to the policies, processes, and oversight mechanisms that ensure AI systems are implemented ethically, safely, and in compliance with regulations. It involves engaging stakeholders, monitoring performance, and prioritizing transparency and fairness throughout the AI lifecycle.

There is no single signer. Sign-off typically involves a collaborative group that may include clinical leaders, compliance officers, data scientists, patient advocates, and IT leadership. Each plays a role in ensuring the AI system is clinically sound, legally compliant, and ethically aligned.

AI models can change over time due to updates or shifts in data (a phenomenon called model drift). Continuous monitoring ensures the system remains accurate, fair, and aligned with ethical standards even after deployment.

Organizations prevent bias by auditing datasets, involving diverse stakeholders in design, testing for fairness across demographic groups, and regularly reviewing AI outputs to detect and correct any disparities in performance.

Regulatory bodies set standards and frameworks to ensure AI is used safely and fairly. They monitor compliance, issue guidelines (like the EU AI Act or FDA approvals), and help enforce accountability through audits and penalties when necessary.