July 29, 2025

One Huge Risk of AI in Healthcare That Shouldn’t Be Overlooked

Contents

Artificial intelligence is redefining healthcare, from clinical decision support to operational efficiencies and precision medicine. Yet beneath the surface of these advancements lies a critical and underappreciated threat: AI bias in healthcare.

Bias is not just a technical glitch. It is a systemic risk that can quietly but profoundly impact clinical outcomes, patient safety, regulatory exposure, and the overall trust in healthcare systems that use AI. For executives navigating the adoption of AI technologies, understanding and mitigating algorithmic bias is not optional. Healthcare AI governance is a strategic imperative.

AI Bias in Healthcare: A Hidden Threat with Wide-Ranging Impact

Most healthcare leaders are well-versed in clinical risks, operational risks, and compliance risks. Algorithmic bias sits at the intersection of all three. When overlooked, it can erode confidence in AI tools, expose organizations to regulatory scrutiny, and, most concerning of all, lead to disparities in patient care.

AI systems are trained on data, and healthcare data is rarely neutral. Historical inequalities in diagnosis, treatment access, and health outcomes become baked into the data. By extension, these inequalities are embedded into the models trained on that data. Without intentional safeguards, AI simply learns to replicate and even amplify these disparities.

If you want to explore how these risks manifest across healthcare functions, Lumenova’s article, The Hidden Dangers of AI Bias in Healthcare Decision-Making, offers powerful insights and real-world examples.

1. Clinical Decision Support: Skewed Diagnostics and Treatment

AI-driven diagnostics have shown promise in radiology, oncology, and cardiology. However, when models are trained on datasets that underrepresent certain populations, the results are predictable: misdiagnoses, delayed diagnoses, or suboptimal treatment recommendations for those groups.

For example, skin cancer detection algorithms often underperform on darker skin tones. Cardiovascular risk prediction models may miss early signs in women because historical data has disproportionately focused on male populations.

To dive deeper into how these gaps occur and how to address them, Lumenova’s Fairness and Bias in Machine Learning explains common pitfalls and concrete mitigation strategies.

2. Operational Efficiencies: Bias in Resource Allocation

AI is increasingly used to streamline hospital operations, from staffing models to predicting patient no-shows. However, if these algorithms inherit biased assumptions, the risk extends beyond the clinical setting.

Imagine an AI system that predicts which patients are likely to miss appointments. If the training data reflects socioeconomic biases, the system might deprioritize outreach or follow-up for those patients. This would perpetuate existing gaps in care.

For a broader view of bias types across use cases, you can explore 7 Common Types of AI Bias and How They Affect Different Industries, which includes healthcare-specific examples.

3. Population Health and Preventive Care: Incomplete Risk Profiles

Population health initiatives rely on AI to predict risk factors and stratify patient cohorts for preventive interventions. Yet when models are blind to social determinants of health or trained on incomplete datasets, risk profiles can be dangerously misleading.

In practice, this may mean patients who need support most are left out of preventive programs, undermining both outcomes and equity. As Lumenova notes in AI in Healthcare: Redefining Consumer-Centric Care, truly impactful AI must center around representative, explainable data practices.

4. Regulatory Compliance and ESG Risks

Regulators are taking notice. The FDA and the EU’s AI Act are pushing for transparency, fairness, and accountability in AI-driven healthcare applications. Healthcare organizations deploying biased AI systems could face compliance violations, fines, and mandatory audits.

Moreover, AI bias in healthcare directly intersects with ESG commitments. Demonstrating equitable AI practices is not just about regulatory compliance. It is now integral to corporate governance and stakeholder expectations.

If you want to understand how compliance and bias are connected in real-world scenarios, Lumenova’s AI in Healthcare Compliance: How to Identify and Manage Risk is a must-read.

How Healthcare Leaders Can Tackle AI Bias

Identifying bias is not just a technical challenge. It is a governance challenge. Healthcare executives must build a framework for responsible AI that embeds bias detection, mitigation, and continuous monitoring throughout the AI lifecycle.

Lumenova’s article on Top 5 Challenges an AI Governance Platform Solves outlines precisely how organizations can overcome common barriers like model drift, explainability gaps, and audit limitations.

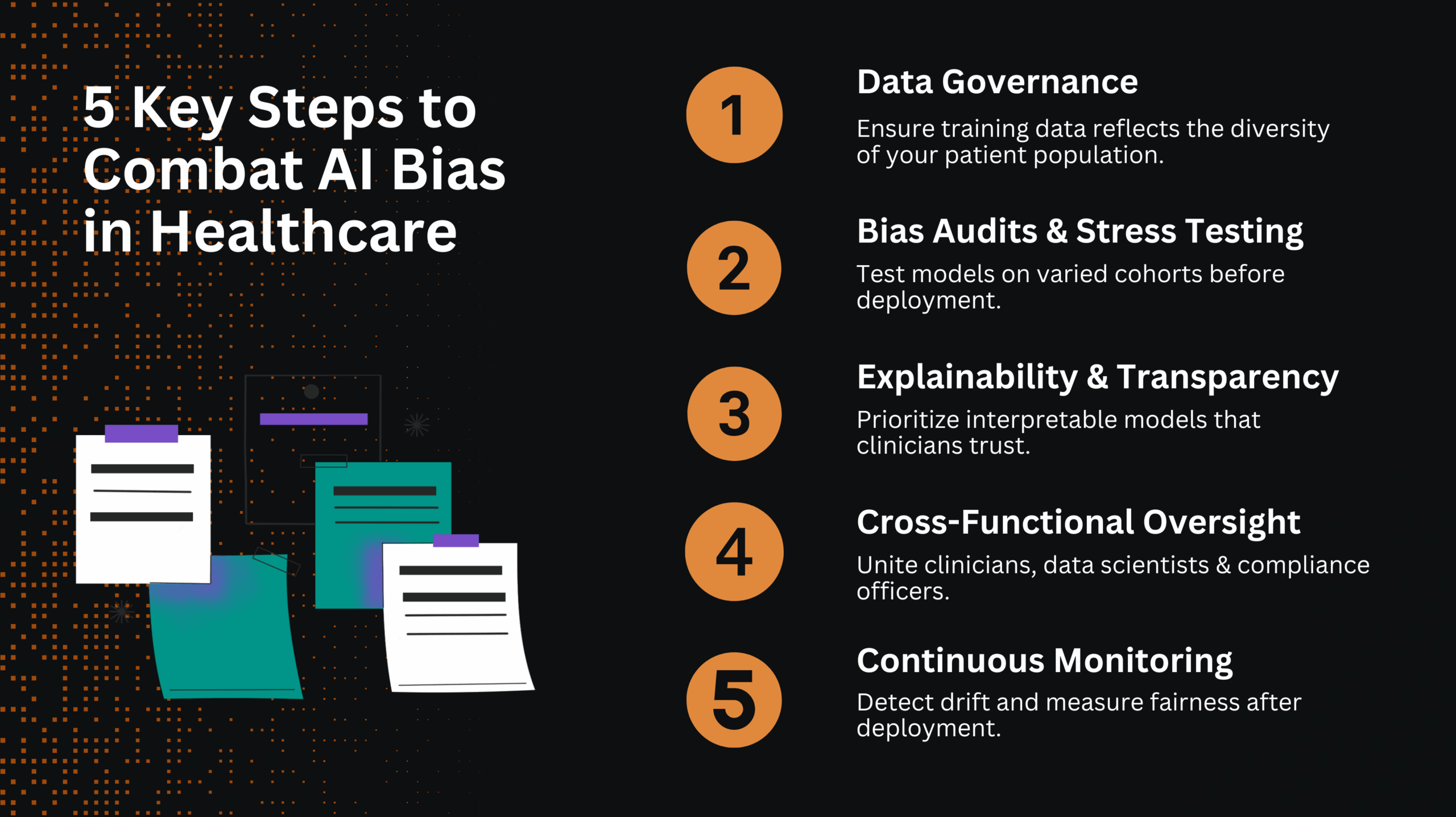

Key steps include:

- Data Governance: Ensure training data reflects the diversity of the patient population.

- Bias Audits and Stress Testing: Evaluate AI models against diverse patient cohorts before deployment.

- Explainability and Transparency: Select models that provide interpretable recommendations for clinicians.

- Cross-Functional Oversight: Engage clinical leaders, data scientists, and compliance officers.

- Continuous Monitoring: Regularly evaluate models to ensure ongoing fairness and accuracy.

If you’d like to explore how human oversight fits into this framework, The Strategic Necessity of Human Oversight in AI Systems provides a compelling rationale.

Healthcare AI Governance with Lumenova AI

At Lumenova AI, we help healthcare organizations navigate the complexities of AI governance, including bias detection and mitigation. Our platform enables leaders to evaluate AI models through a comprehensive risk lens, ensuring that algorithms serve every patient equitably and align with regulatory standards.

Bias in healthcare AI is not just an ethical concern. It is a material risk to patient outcomes, operational integrity, and brand trust. Proactive governance is the only sustainable path forward.

If you are ready to strengthen your AI governance strategy, let us start the conversation.