June 5, 2025

AI Audit Tools and Scenarios for Insurance: Claims, Underwriting, and More

Contents

The widespread adoption of AI into the insurance sector marks a paradigm shift in how insurers approach risk assessment, claims processing, fraud detection, and customer engagement. As AI systems assume increasingly influential roles in these critical functions, the necessity for robust auditing mechanisms becomes paramount. Rigorous AI auditing is not merely a regulatory obligation but a strategic imperative to ensure compliance, fairness, transparency, and sustained stakeholder trust. This article provides a brief exploration of AI audit tools and scenario-based methodologies tailored for the insurance industry, with a particular focus on claims, underwriting, and ancillary domains.

The Imperative of AI Auditing in Insurance

AI technologies have become deeply embedded in the operational fabric of contemporary insurance enterprises. Their main applications in this field include:

- Claims Automation: Machine learning algorithms and computer vision models expedite the claims lifecycle by automating document verification, damage assessment, and payment authorization. For instance, insurers deploy image recognition tools to evaluate vehicle damage, reducing processing times from days to minutes.

- Underwriting: AI-driven risk models analyze vast and heterogeneous datasets, enabling granular risk segmentation and dynamic pricing. Telematics data, social media activity, and IoT sensor feeds are increasingly leveraged to inform underwriting decisions.

- Fraud Detection: Advanced anomaly detection and pattern recognition algorithms facilitate the early identification of fraudulent activities, both at the point of underwriting and during claims adjudication.

- Customer Engagement: Natural language processing (NLP) powers chatbots and virtual assistants to deliver personalized, real-time support and policy recommendations.

The adoption of AI in insurance is accompanied by a complex array of regulatory, ethical, and operational risks. Without rigorous auditing, AI systems may perpetuate or even exacerbate biases, generate opaque or inexplicable outcomes, and expose insurers to regulatory sanctions and reputational damage. Traditional model validation is insufficient to address the dynamic and context-dependent nature of AI risks. Scenario-based auditing, which simulates real-world and edge-case situations, is essential for uncovering vulnerabilities and ensuring resilient AI deployment.

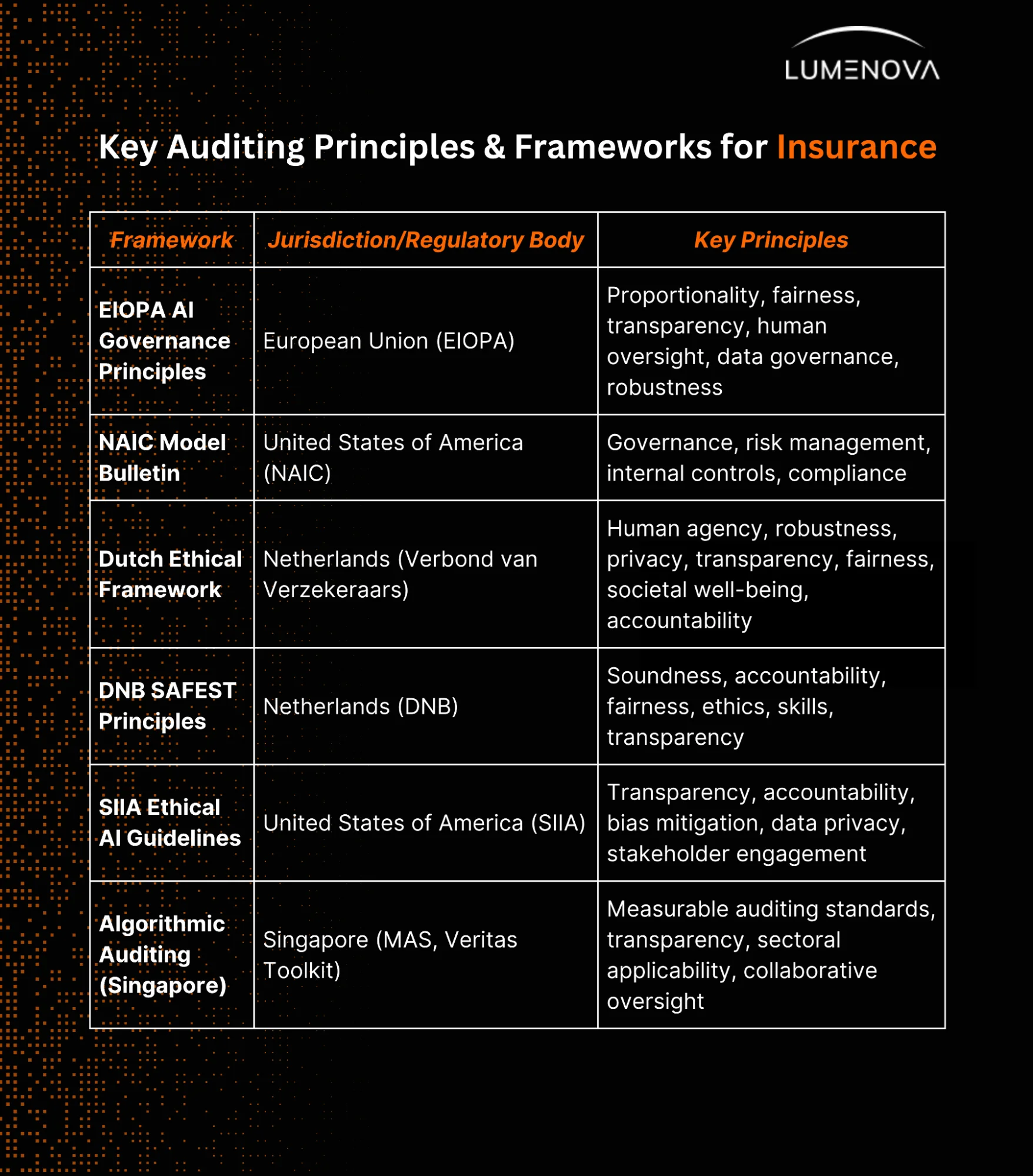

Regulatory bodies such as the European Insurance and Occupational Pensions Authority (EIOPA), the National Association of Insurance Commissioners (NAIC), and the Monetary Authority of Singapore (MAS) have promulgated guidelines emphasizing fairness, explainability, and data privacy. Compliance with these frameworks is non-negotiable.

The European Insurance and Occupational Pensions Authority (EIOPA)’s AI Governance Principles, the Self Insurance Institute of America (SIIA)’s Ethical AI Guidelines, and the NAIC Model Bulletin all converge on core themes: proportionality of AI governance measures to the impact of the AI use case, fairness, transparency, human oversight, robust data governance, and ongoing monitoring. As AI adoption accelerates, insurers are expected to integrate these principles into their governance structures, supported by internal audits and sector-specific best practices, to ensure ethical, trustworthy, and compliant AI systems.

Key AI Audit Scenarios in Insurance

Claims Algorithms

→ Scenario 1: Bias detection in automated claims approval

Auditors must systematically evaluate claims algorithms for disparate impacts across demographic groups. This includes statistical analysis of approval rates, payout amounts, and denial justifications, stratified by protected characteristics such as age, gender, and ethnicity. Transparency in decision logic and documentation of override mechanisms is critical for regulatory scrutiny.

→ Scenario 2: Fraud detection model drift

Fraud detection models are susceptible to performance degradation due to evolving fraud tactics (leading to what we know as concept drift). Continuous monitoring and periodic revalidation against novel fraud patterns are required. Auditors should employ adversarial testing and synthetic data generation to assess model robustness.

Underwriting Models

→ Scenario 3: Fairness in risk scoring

Underwriting models must be audited for unjustified premium differentials among protected groups. This involves reviewing data provenance, feature selection methodologies, and the potential for proxy variables that encode prohibited biases. Statistical parity, equal opportunity, and disparate impact analyses are recommended.

→ Scenario 4: Explainability in automated underwriting

Explainability is a cornerstone of responsible AI. Underwriting decisions must be interpretable to both regulators and policyholders. Scenario-based testing should include edge cases and exceptions to ensure that automated decisions can be justified and, if necessary, overridden by human underwriters.

Customer Engagement and Personalization

→ Scenario 5: Data privacy and consent management

The use of personal and behavioral data in marketing or policy recommendations requires rigorous auditing for compliance with data protection regulations (e.g., GDPR, CCPA). Auditors should assess consent management workflows, data minimization practices, and the traceability of data usage across AI-driven processes.

→ Scenario 6: Transparency in chatbots and virtual agents

AI-powered customer engagement tools must be monitored for transparency and accuracy. Auditing should include scenario testing for potential misinformation, escalation protocols for complex queries, and the clarity of AI-human handoffs.

Model Governance and Lifecycle Management

→ Scenario 7: Model validation and performance monitoring

Comprehensive model governance entails regular scenario-based stress testing, particularly under adverse conditions such as economic downturns or catastrophic events. Auditors should document model updates, retraining cycles, and performance metrics, ensuring traceability and accountability throughout the model lifecycle.

Best Practices for Scenario-Based AI Auditing

To realize the full value of AI in insurance, beyond celebrating premature successes, insurers must systematically embed scenario-based auditing into their operational and strategic frameworks. This approach not only ensures compliance and risk mitigation but also drives sustainable value creation across core functions. Drawing on recent industry research and regulatory guidance, several best practices and actionable steps emerge for effective scenario-based AI auditing.

Align auditing with strategic value and real-world impact

AI’s value in insurance is not evenly distributed across all functions; most measurable gains are concentrated in certain areas like claims automation, fraud detection, and personalized customer engagement. Auditing efforts should therefore prioritize high-value, high-risk use cases, ensuring that AI delivers real business outcomes, such as efficiency gains, improved accuracy, and enhanced customer experience, while maintaining regulatory compliance and ethical standards. This means moving beyond “showcase” projects and embedding auditing as a continuous, organization-wide discipline.

Adopt a structured, multi-phase audit approach

Leading institutes recommend a phased approach to AI auditing in insurance, comprising the following dimensions:

- Enable: Establish a clear AI strategy, appoint responsible leaders, and identify priority use cases with significant business and compliance impact. Build foundational AI literacy and ensure regulatory and ethical guidelines are integrated from the outset.

- Embed: Integrate scenario-based auditing into business processes, supported by robust data governance, change management, and continuous monitoring. This phase emphasizes trust, transparency, and security in day-to-day AI operations.

- Evolve: Regularly update audit scenarios to reflect emerging risks, new data sources, and evolving regulatory requirements. Foster a culture of innovation and continuous improvement, leveraging lessons learned to refine both AI models and audit protocols.

Leverage comprehensive audit checklists and frameworks

Certain regulatory bodies have published detailed checklists to guide AI audits, like the one issued by the European Data Protection Board (EDPB). These frameworks recommend evaluating AI systems not only on technical grounds but also in terms of social impact, user participation, and recourse mechanisms. Key audit dimensions include:

- Bias and fairness assessment

- Data integrity and quality

- Explainability and transparency

- Security and privacy controls

- Societal and ethical impact

- User engagement and recourse

Integrate human oversight and AI-first leadership

While automation enhances audit efficiency, human judgment remains crucial for interpreting nuanced scenarios and making informed decisions, especially in ambiguous or high-impact cases. AI-first leadership, at both senior and mid-level, ensures that auditing is not a one-off compliance exercise but an ongoing strategic priority, with leaders championing responsible AI adoption and continuous upskilling.

Establish safeguards and controls for AI outputs

Best practices dictate implementing layered controls to validate AI-generated outputs, especially when these inform critical business or regulatory decisions. This may include automated cross-checks, manual expert reviews, and the use of independent validation tools to flag anomalies or inconsistencies.

Challenges and Tools to Address Them

One of the foremost challenges in scenario-based AI auditing is the dynamic nature of AI capabilities. AI models for insurance are evolving rapidly, with new algorithms and data sources, such as telematics, IoT devices, and unstructured data, being integrated into core processes. This constant evolution requires audit methodologies that are not only robust but also highly adaptive, capable of responding to emerging risks, shifting regulatory requirements, and novel use cases. Static or infrequent audits are insufficient; instead, insurers must implement ongoing, iterative audit processes that can keep pace with technological change.

Another critical challenge lies in striking a balance between automation and human oversight. Automated audit tools can process vast datasets, flag anomalies, and run scenario simulations at scale, greatly enhancing efficiency and coverage. However, the complexity and contextual nuances of insurance operations mean that human expertise remains indispensable. Experienced auditors and domain specialists are crucial for interpreting ambiguous findings, making informed judgments in complex cases, and ensuring that ethical considerations are properly addressed. The optimal approach combines the speed and scalability of automation with the discernment and contextual understanding of human reviewers.

The lack of standardization and shared resources also poses a significant hurdle. The insurance industry currently faces a fragmented landscape of audit protocols and scenario libraries, making it difficult to benchmark performance or ensure regulatory harmonization across markets. Developing industry-wide scenario libraries and standardized audit frameworks is crucial for establishing consistent best practices, facilitating regulatory compliance, and enabling meaningful comparisons across organizations.

To address these challenges, insurers are increasingly turning to advanced technological solutions. For organizations seeking a holistic approach, the Lumenova AI platform delivers integrated governance, risk management, and scenario-based audit functionalities tailored specifically to the insurance sector. These tools enable insurers to operationalize best practices, maintain regulatory alignment, and build resilient, trustworthy AI systems.

Conclusion

Scenario-based AI auditing is indispensable for ensuring the robustness, fairness, and trustworthiness of AI systems in insurance. As the industry accelerates its adoption of AI, insurers must proactively develop, implement, and continuously refine scenario-based audit programs, guided by evolving regulatory frameworks and best practices. By leveraging advanced audit tools and fostering a culture of responsible AI governance, insurers can not only achieve regulatory compliance but also enhance operational resilience and stakeholder confidence.

To explore how scenario-based AI audit can strengthen your insurance operations, we invite you to request a demo of Lumenova AI – the all-in-one platform for AI governance and risk management in insurance.