July 22, 2025

Explainable AI for Executives: Making the Black Box Accountable

Contents

In most organizations, AI no longer lives in labs or on pitch decks. It lives in production. Embedded in underwriting workflows, pricing engines, hiring systems, and customer segmentation tools. And while AI may be invisible to the end user, its influence is anything but subtle.

The shift has already happened: AI isn’t assisting decisions, it’s making them.

And now, a harder question is surfacing across executive teams:

Can we explain what our AI is doing and why?

Not just to regulators. But to our customers, partners, employees, and increasingly, to our boards.

That’s the real promise of explainability. Not as a technical feature, but as a governance foundation. It’s how leadership can validate that their AI aligns with policy, law, and brand values before the headlines, before the audits, and before the harm.

At Lumenova AI, we work with enterprises that’ve already embraced AI’s upside and now need a clear-eyed strategy for managing its risks. This article offers a grounded look at explainability as an operational asset: how to build it, how to scale it, and how to use it to future-proof your AI governance.

Because the organizations that can explain their systems are the ones that will be trusted to run them.

Why Explainability Has Become a Board-Level Issue

Executives no longer have the luxury of delegating explainability to the data science team alone. Here’s why:

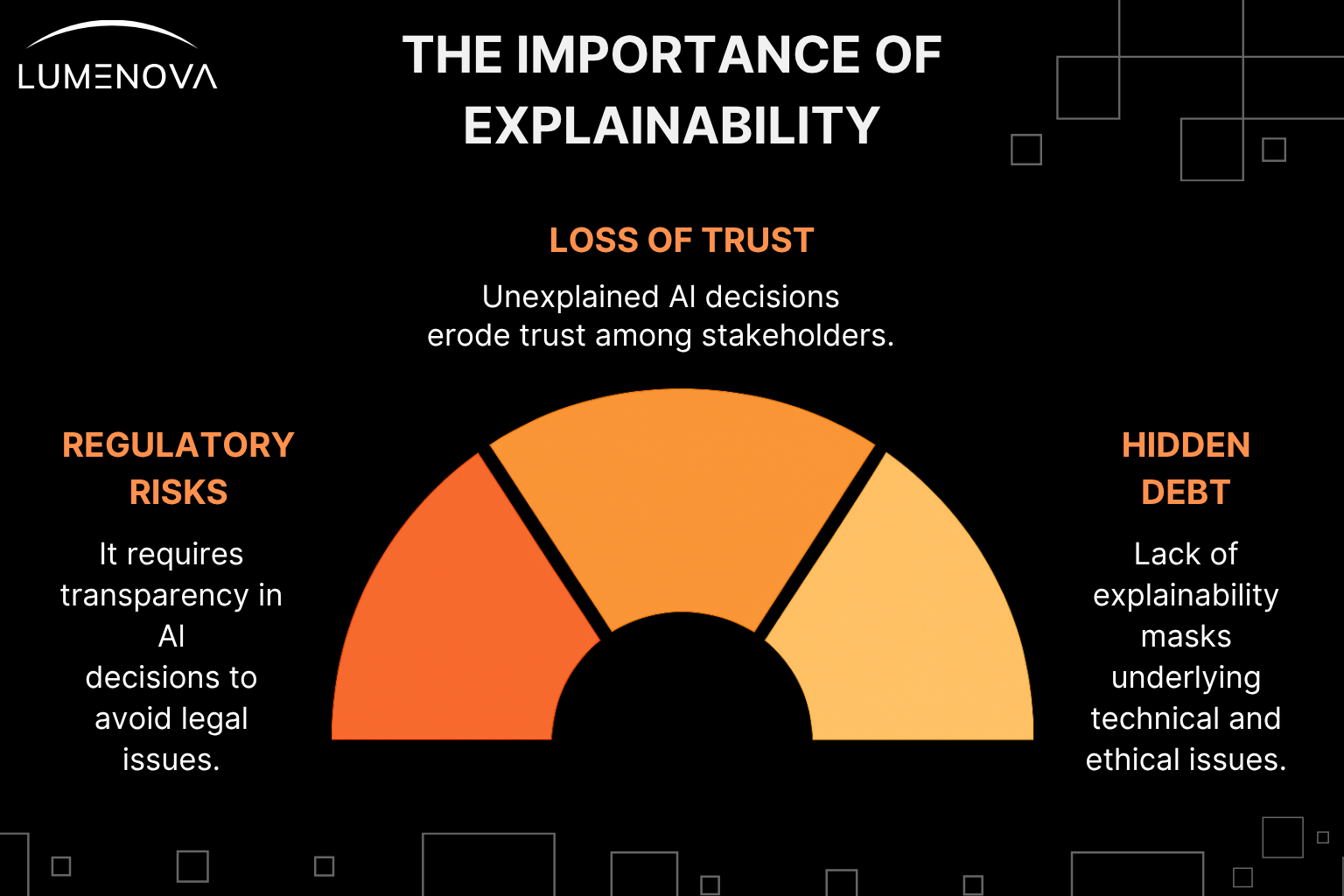

1. Regulatory Risk

New regulations increasingly require organizations to provide meaningful explanations for automated decisions, especially when those decisions affect individuals’ rights, finances, or employment.

- The EU AI Act explicitly requires high-risk systems to be “sufficiently transparent.”

- NYC Local Law 144 mandates explainable outcomes for AI-based hiring tools.

- California and Colorado are advancing AI laws with provisions for transparency and auditability.

- Financial regulators (e.g., the CFPB, and SEC) are paying close attention to AI in credit, lending, and trading.

If your organization can’t explain how its AI works, you may soon be out of compliance (even if the model is accurate).

For a broader perspective on how explainability intersects with evolving AI laws and board-level risk, you may want to explore: Why Explainable AI in Banking and Finance Is Critical for Compliance.

2. Loss of Trust

When AI decisions go unexplained, trust erodes (with customers, employees, investors, and partners).

- 75% of consumers say they’re more likely to trust AI if they understand how it works (IBM).

- Financial institutions are seeing explainability become a prerequisite for onboarding AI vendors.

- In hiring and HR, unexplained algorithmic decisions are leading to litigation and public backlash.

3. Hidden Technical and Ethical Debt

Lack of explainability often masks deeper issues: biased data, overfitting, security risks, or models making decisions for the wrong reasons (e.g., learning to associate zip code with creditworthiness).

Explainability is how leadership shines light on these blind spots, before regulators or customers do.

What Exactly Is Explainable AI (XAI)?

Explainable AI (XAI) refers to the techniques and processes that make AI decision-making understandable, especially to non-technical users. In practice, explainability helps answer these questions:

- Why did the AI make this decision?

- What data did it rely on?

- Would changing that input have changed the outcome?

- Can we justify the result to regulators or stakeholders?

Explainability doesn’t require giving up performance. It means choosing the right tools and models to balance accuracy, transparency, and risk.

To see how other leaders are operationalizing AI transparency in highly regulated environments, check out Weighing the Benefits and Risks of Artificial Intelligence in Finance.

Models and Methods: The Two Paths to XAI

There are two main ways to achieve XAI, and most enterprises will need a mix of both.

1. Use Interpretable Models Where Possible

These include models like:

- Decision trees

- Logistic regression

- Rule-based systems

These models are simple by design. You can trace how a prediction is made, step-by-step. They’re ideal for domains where accountability is paramount (like healthcare eligibility, compliance checks, or employee screening).

2. Use Post-Hoc Explanation Tools for Complex Models

Modern AI often relies on more complex systems:

- Random forests

- Gradient boosting

- Deep neural networks

- Large language models (LLMs)

These aren’t easily interpretable, but you can analyze them using tools like:

- SHAP (Shapley values): Shows which inputs mattered most.

- LIME: Explains predictions by approximating local behavior.

- Counterfactuals: Tell you what would have changed the outcome.

- Partial dependence plots: Show how features affect predictions.

The key is to integrate these tools early in the development lifecycle, not after deployment.

Want to go deeper into how interpretable models and post-hoc tools like SHAP are being used in the field? Read AI in Finance: The Promise and Risks of RAG & CAG vs RAG: Which One Drives Better AI Performance?.

What Explainability Looks Like in Practice

Financial Services

A bank uses a credit risk model powered by gradient boosting. SHAP analysis reveals the model heavily penalizes applicants based on employment gaps (unintentionally disadvantaging caregivers). The bank adjusts its features and documents explainability for internal auditors and external regulators.

Healthcare

An AI model is used to prioritize patient intake. Clinicians are skeptical. Using SHAP and counterfactuals, the health system provides explainable dashboards that show which vitals and patient history data drive decisions. Clinician trust improves. So does patient care.

Insurance

An underwriter needs to understand how an AI model flags certain claims as high risk. Using Lumenova AI’s explainability layer during the system’s development, they see that location data was over-influencing decisions. The feature is re-weighted. Bias risk drops. The model is cleared for production.

Why Implementation Often Fails (And How to Fix It)

The Most Common Pitfalls:

- Explainability is an afterthought added only after regulators ask questions.

- Different teams use different definitions. Legal, compliance, and data science aren’t aligned.

- Dashboards exist, but no one uses them because they don’t answer business-relevant questions.

- Models are explained in code, not language, making them inaccessible to decision-makers.

What Strong Implementation Looks Like:

- A defined explainability policy, aligned with regulatory and business priorities.

- Tooling that translates model insights into plain language, not just plots.

- Training across teams, especially legal and business units, on how to read and question model outputs.

- Embedded processes, such as mandatory explainability checks during model approval or retraining events.

If you’re looking to align technical teams with legal and business leadership on responsible AI practices, Overreliance on AI: Addressing Automation Bias Today offers a helpful framework.

How We Help Executives Operationalize Explainability

At Lumenova AI, explainability is a core feature of our Responsible AI platform, not a plugin.

We help you:

- Automatically generate explanations at both global (model-level) and local (decision-level) resolution.

- Create auditable reports for compliance reviews, stakeholder communications, and internal oversight.

- Monitor explanations over time so that if a model starts drifting or changing behavior, you can see it.

We don’t just show you what the model did; we help you understand why it matters, who’s affected, and what to do next.

Final Reflections: Explainability Is Leadership

Explainability is not a “data science feature.” It’s a leadership responsibility.

It’s how you demonstrate accountability, protect the organization from blind risk, and align AI use with your brand values and public expectations.

We believe the companies that will succeed with AI aren’t the ones that build the most complex models. They’re the ones that can justify and explain their decisions.

That’s what builds trust. And trust is what turns AI from a liability into a long-term asset.

Want to see how explainability can be integrated into your AI governance strategy? Book a demo to explore how Lumenova AI makes transparency operational, without slowing innovation.

Executive Discussion Starters

- Which of our AI models directly affect customers, employees, or compliance exposure?

- Can we explain how those models make decisions to a regulator, board, or customer?

- Do our teams have a shared understanding of what explainability means and how it’s implemented?

- Is our explainability capability built into our workflows or added only when issues arise?

To understand how explainability, ethics, and trust converge in AI governance, explore Ethics of Generative AI in Finance.