November 25, 2025

How to Choose the Right AI Monitoring Tool for Your Enterprise

Contents

We get it. Choosing any platform, app, or tool has become incredibly difficult. There are so many options that even the most experienced teams can feel overwhelmed. AI monitoring tools are no different. From traditional ML dashboards to new GenAI observability platforms, everything starts to look similar when you are comparing features on paper.

That is exactly why we want to make things easier for you and your teams. In this quick guide, we walk you through what truly matters when evaluating AI monitoring solutions, such as clarity, transparency, and risk awareness, and help you understand which type of tool is more likely to support your enterprise, especially if you operate in a regulated or high-risk environment.

Before we get into features, let’s address a common misunderstanding. Most teams still assume that monitoring AI models is the same as monitoring any other software system. But once you see the difference, the tool selection process becomes much clearer.

Insights: For an in-depth analysis of the features you need in an AI governance platform, please check our article.

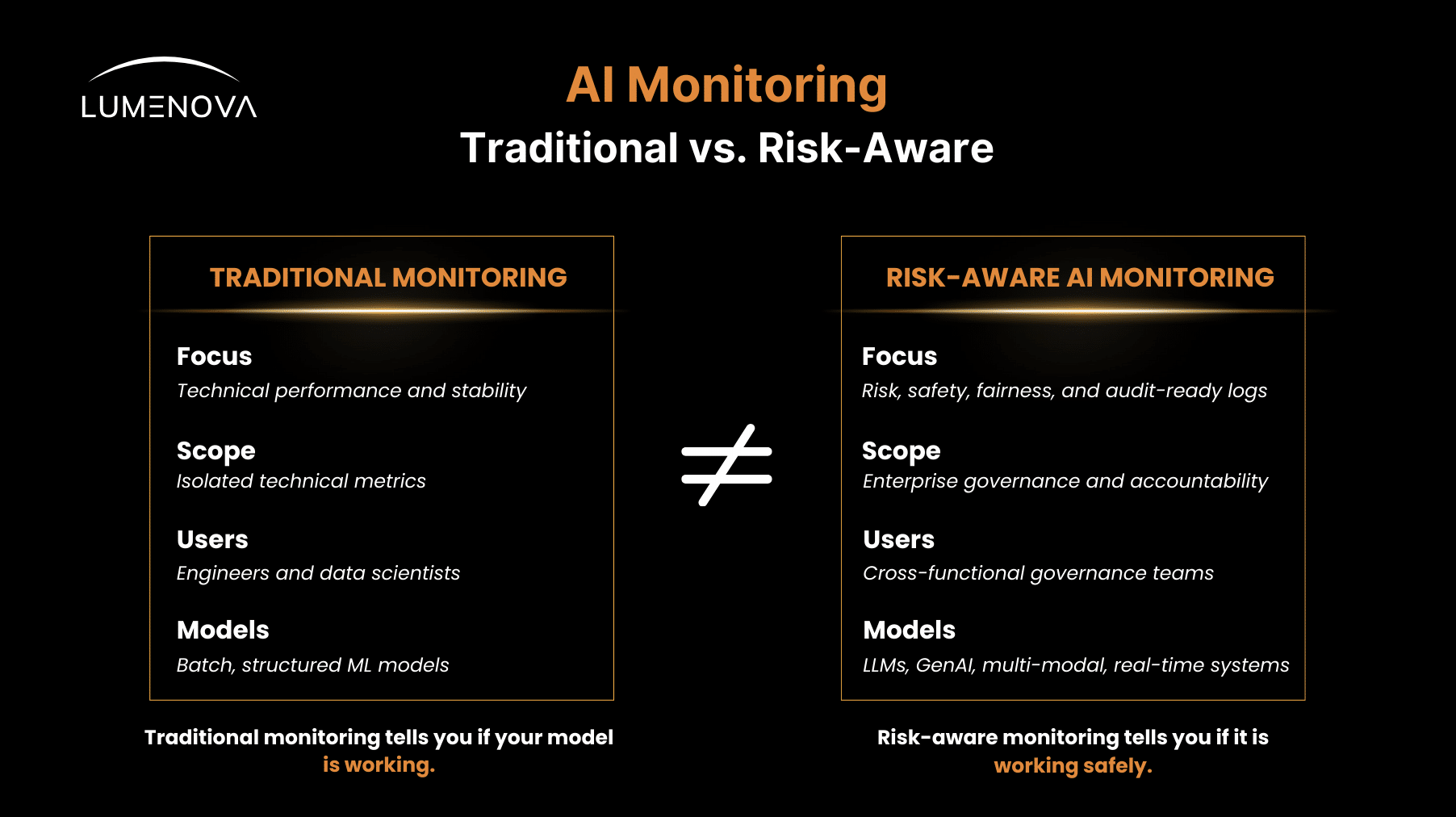

Traditional Model Monitoring ≠ Risk-Aware AI Monitoring

Traditional monitoring tools were designed for a world where models made predictions on structured datasets and stayed relatively stable over time. Those tools still have value, but they were never meant to support the complexity of today’s AI systems, particularly generative models, agentic frameworks, or multi-modal pipelines.

To make this distinction clear, here is how the two approaches compare:

Traditional monitoring answers the question: Is the model working as expected?

Risk-aware monitoring answers a much more critical one: Is the model working safely, fairly, and in a way we can defend in front of regulators or customers?

Many general-purpose ML monitoring tools stop at performance metrics, which means they fall short the moment your organization needs risk governance, regulatory reporting, or external validation. If accountability matters to your team, this gap becomes impossible to ignore.

This is exactly where Lumenova AI offers an advantage. Let’s break down the capabilities that actually matter for enterprises.

Key Features That Matter for Large Enterprises Using AI

1. Model Drift and Concept Drift Detection

Even the best AI model will change over time. Sometimes the data pipeline shifts quietly, user behavior evolves, or external events disrupt patterns overnight. Drift is a reality, and every enterprise needs a reliable way to catch it early.

Which is why we would suggest looking for a monitoring tool that can:

- Detect data drift, when input data starts to differ from the training set.

- Detect concept drift, when real-world relationships change.

- Prioritize drift findings based on risk impact, not just technical variance.

This helps both technical and non-technical teams stay aligned when a model must be reviewed or retrained.

2. Real-Time Alerts

In an enterprise environment, delayed alerts create real operational risk. Suppose a model suddenly produces harmful or non-compliant outputs such as denying a loan application for the wrong reasons, generating sensitive information in a customer conversation, leaking confidential data, or giving inaccurate medical guidance. In that case, you cannot afford to uncover the issue weeks later in a dashboard.

The right platform should immediately notify the right person with:

- The context surrounding the issue

- The affected model and version

- A clear explanation of why the alert was triggered

- Suggested next steps if available

Real-time visibility is essential when your AI models influence decisions, customer interactions, or regulated workflows.

3. Bias and Fairness Monitoring

Fairness is no longer something you check once at the end of a model’s development. It is an ongoing responsibility.

A monitoring tool should help you evaluate:

- Differential performance across demographic groups

- Shifts in fairness metrics over time

- Patterns that could signal discriminatory behavior

This is particularly important in industries such as finance, insurance, HR technology, and healthcare, as decisions in these sectors directly impact people’s livelihoods and access to essential services. A small shift in model behavior can unintentionally disadvantage certain groups, even if the model was fair on day one.

For example, a lending model that drifts could start approving loans unevenly across demographic groups. A health triage system might begin recommending different care levels for similar symptoms. An HR screening tool could filter out qualified applicants from specific backgrounds due to subtle data changes. These issues are not only harmful to individuals, they also create legal exposure, regulatory scrutiny, and reputational damage for the organization.

This is why fairness monitoring should be a fundamental part of responsible AI governance.

4. Prompt and Output Logging for LLMs

Most traditional ML monitoring systems were never built for LLMs or GenAI. They struggle with unstructured content, long text, images, or multi-step agent actions.

You want a platform that captures:

- Every user prompt

- Every generated output

- Metadata such as user ID, timestamp, and model version

- Intermediate system messages if available

- Reruns or fallback actions

Without this type of logging, you cannot perform investigations, conduct audits, or understand how your generative model handled a specific case.

And lastly, another essential capability in modern monitoring is explainability. Executives must be able to ask, “Why did the model make this decision?” If you want a deeper dive into this topic, take a look at our piece Explainable AI for Executives: Making the Black Box Accountable.

5. Integrated Risk Scoring

This is one of the areas where Lumenova AI shines. Most tools stop at technical metrics, which forces non-technical teams to interpret drift curves, anomaly alerts, or fairness charts without the right context.

Risk scoring solves this problem by translating technical signals into a clear risk level that anyone can understand.

Instead of saying:

“The model experienced a 12.7 percent input distribution shift and a 4.5 percent performance decay.”

You can say:

“This model has moved from a low-risk state to a moderate-risk state because of data drift and declining stability.”

This improves communication with leadership, legal teams, compliance officers, and internal auditors.

Red Flags: When a Monitoring Tool Isn’t Ready for Regulated AI

If you are comparing vendors, here are the signs that should immediately raise concern. These suggest the tool may have been built for experimentation, and not enterprise governance.

1. No Logging of Unstructured Inputs or Outputs

If a monitoring platform cannot capture prompts, generated text, images, or multi-modal data, it is not ready for GenAI governance. Enterprises are moving toward conversational agents, automated workflows, and content generation. You need full visibility into what went in and what came out.

2. No Integration With Risk Frameworks or Reporting Pipelines

Monitoring cannot live in a silo. You should be able to link findings directly to your:

- Internal risk registers

- Compliance dashboards

- Audit preparation processes

- Governance reviews

If the monitoring tool sits on an island, you will spend more time exporting CSV files than managing risk.

3. No Transparency Into How Risk Thresholds or Anomalies Are Calculated

Opaque systems create compliance gaps. If a vendor cannot explain how it calculates anomalies or why an alert was triggered, you will not be able to defend that monitoring strategy in an audit.

Transparency is not a luxury anymore. It is a requirement.

4. Lack of User Access Tracking or Explainability for Outputs

Enterprises need to know:

- Who used the model

- What they asked

- What the model responded

- Why did the model respond that way

Without explainability and user tracking, you cannot investigate incidents or demonstrate accountability.

For more details on how guardrails and platform‐level governance operate, see our article Using AI Governance Platforms to Automate Gen AI Guardrails

Conclusion

AI systems evolve rapidly, and your governance needs must evolve accordingly. Lumenova AI provides a single platform for implementing guardrails across your entire AI landscape, from legacy ML models to LLMs and agentic systems. The goal is simple: deploy AI safely, confidently, and in compliance with every requirement that matters to your organization.

If you are ready to move beyond basic dashboards and adopt a monitoring solution built for regulated, high-stakes AI, Lumenova AI might be the right choice for you. Schedule a personalized demo and let us show you how Lumenova AI turns technical signals into a clear, board-ready risk register that strengthens your entire AI governance program.