Contents

AI is moving quickly, but the structures around it, like safety, accountability, and fairness, haven’t caught up in most organizations. That’s why the idea of Responsible AI (RAI), exists. It’s not about slowing down innovation. It’s about making sure the tools we build (and rely on) don’t create problems that go under the radar — problems that can’t be undone once AI is deployed. Responsible AI principles are essential to guiding the ethical development and use of AI.

This article is for businesses that are serious about using AI responsibly. We’ll define Responsible AI and explain its core principles, then show you how to put them into practice.

What Is Responsible AI?

RAI means building and using AI in ways that are safe, fair, and honest. It’s about making sure these systems help people, and don’t cause unintended harm. To be responsible, an AI system must be governed. It should respect people’s rights, protect their data, avoid unfair treatment, and be easy to check and fix if something goes wrong.

RAI is the bridge for companies to build trust, stay ready for regulations, and avoid costly mistakes. It gives leaders confidence that their AI tools are doing what they’re supposed to do, and doing it the right way.

To learn more about what Responsible AI really means in practice (and why it matters so much in 2025) check out our latest article: What Is Responsible AI (RAI)?

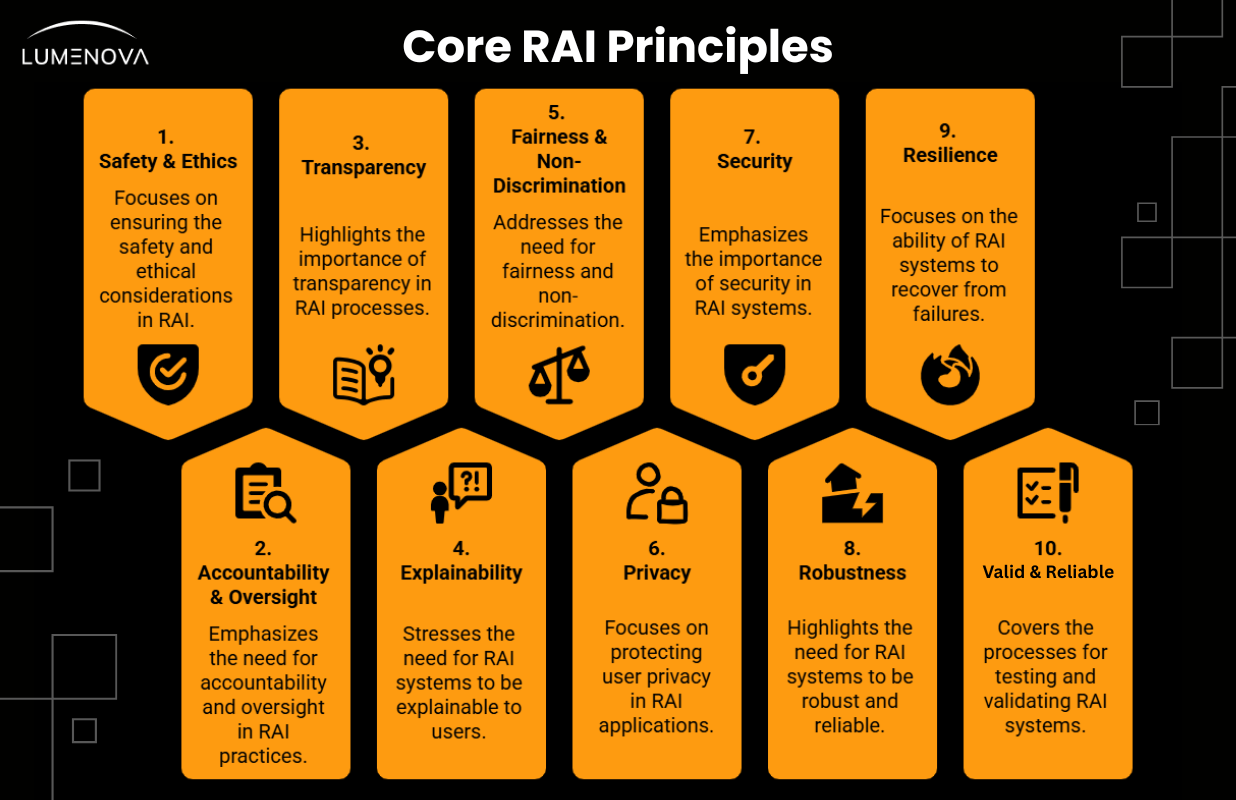

Up next are 10 key principles that form the foundation of Responsible AI, with explanations of what each principle means and why they matter.

The 10 Core Principles of RAI

1. Safety & Ethics

AI should never be used in ways that cause harm, whether intended or unintended. This means ensuring that systems are safe before they are used in real life. For example, if a hospital uses AI to suggest cancer treatments, the system must be tested to verify that it won’t discriminate against certain patients.

Ethical use of AI also means thinking about what AI should and shouldn’t do. If AI is used in schools to grade students, it should not favor some students over others according to their writing style or background. It should focus on what really matters: the actual learning process.

To stay safe and ethical, AI tools should follow clear rules. These rules might come from a company’s internal policy or from government laws. The goal is to protect people and do what is best.

2. Accountability & Oversight

There should always be a person or team in charge of the AI system. If something goes wrong, we need to know who is responsible. For example, if a company uses AI for hiring processes, someone must be able to explain what a particular hiring decision was made.

Oversight concerns monitoring AI performance. Let’s say a city uses AI for traffic lights. A team should monitor it regularly to make sure it’s working well and not causing traffic jams or accidents.

Good oversight also means keeping good records. If a mistake happens, teams can look back and understand what went wrong. This makes it easier to fix the issue and prevent it from happening again.

Read The Strategic Necessity of Human Oversight in AI Systems to learn how organizations apply human checks in AI systems. The article outlines the risks of using AI without monitoring and shows why human review is important.

3. Transparency

People should know when AI is being used. For example, if a student interacts with a chatbot tutor, they should be aware, from the initial time of interaction, that it’s not a human tutor. Hiding this information can compromise human autonomy and agency.

Transparency also means sharing how the AI system contributes to some process or outcome. If an online store uses AI to raise prices based on who’s shopping, customers should be told what role AI plays in this process (e.g., personalized product recommendations). This helps people make informed and honest choices — choices that aren’t made due to subtle psychological nudging or profiling.

Lastly, transparency builds trust. When people understand how a system works, they are more likely to feel comfortable using it. This is key for adoption in high-impact environments like schools, banks, and hospitals.

4. Explainability (XAI)

AI should be able to explain its decisions in simple language. Imagine a person applies for a loan and is denied. The bank’s AI system should be able to give a clear reason, like “low income” or “high existing debt.”

This is important in hiring too. If an AI system rejects a job application, the company should be able to clearly articulate the reasons for rejection. Maybe the applicant’s experience didn’t match the job description — any reasoning provided must be clear and accessible

XAI also helps teams improve AI. If a teacher doesn’t understand why an AI is grading student essays a certain way, it’s hard to make the system better. Simple explanations lead to better decisions.

Interpretability goes a step further by making the internal logic and decision pathways of AI systems understandable. While explainability tells us what decision was made, interpretability reveals how and why that decision was reached. This is crucial for uncovering hidden biases, debugging unexpected behavior, and ensuring that the system aligns with human values.

5. Fairness & Non-Discrimination

AI should treat everyone fairly. For example, if a company uses AI to screen job candidates, it should not exhibit gender, race, socioeconomic, political, or cultural preferences that perpetuate discriminatory outcomes.

Biased training data is a major cause of unfairness in AI. If a facial recognition system is trained mostly on light-skinned faces, it won’t effectively generalize to darker-skinned people.

To make AI fair, companies must test their systems on many different groups while ensuring they’re training on high-quality, representative data. This helps catch problems early and fix them. Fair AI means better decisions (and outcomes) for everyone.

6. Privacy

AI systems must protect private and sensitive information. For example, a health app that uses AI to give fitness tips should not share a user’s weight or medical history without permission.

In schools, if AI is used to monitor student progress, all acquired data should be stored securely and parents should know what data is being collected, how it’s used, and why.

Privacy also means giving people control. Users should be able to delete their data or stop the AI from collecting it. This keeps people in charge of their own information.

7. Security

AI needs strong security to ensure systems remain safe, reliable, and uncorrupted. For example, if AI controls security cameras in a bank, someone hacking into the system could shut down safety alerts.

Companies should implement strong access controls and fail-safes. This includes password protection, multi-factor authentication, and automated alerts for suspicious activity.

Also, AI should be regularly assessed for novel or emerging vulnerabilities. As hackers get smarter, security must improve too. Safe systems protect both people and businesses.

To learn more, visit the Privacy & Security page. Those blogs explain why privacy is essential and how to protect personal information when using AI.

8. Robustness

AI should perform reliably throughout changing or novel environments. If a weather app uses AI to give forecasts, it should still work during storms or power outages.

If an AI system is used in emergency services to dispatch ambulances, it should continue functioning reliably during high-demand periods or unexpected system loads. This means testing it in different situations to make sure it won’t fail.

Robust AI is reliable. People can count on it to do the job without breaking. This is important for tools used every day, like voice assistants or online maps.

9. Resilience

Sometimes systems fail, but AI should bounce back. For example, if an AI in a smart home stops working, it should be able to restart and still remember settings.

Resilient AI also means backup plans. If a hospital’s AI stops tracking patient data, doctors need another way to access it right away.

Resilience helps in emergencies too. If AI is used in emergency response, like fire detection or flood alerts, it must keep going even during chaos.

Resilience also includes protection against adversarial attacks (such as attempts to manipulate or crash the system). AI should be designed to detect, withstand, and recover from these types of disruptions without compromising safety or integrity.

10. Valid & Reliable

Before using AI, it should be tested carefully. This is like checking a bridge before people drive over it. If a factory uses AI to sort products, the system should be tested to avoid costly mistakes.

Testing means trying the AI in different cases to see what works and what doesn’t. A school might test an AI homework helper with students at different grade levels to make sure it’s accurate.

Just as highlighted by NIST’s work on AI Testing, Evaluation, Validation & Verification (TEVV), this process of making AI valid & reliable is ongoing. AI should be checked often to catch any new problems. This helps keep the system strong, fair, and helpful.

Conclusion

Now, knowing the RAI principles is great, but what really counts is what you do with them. That’s where most teams get stuck. You need tools to check if your AI is doing the right thing. You need people who know what questions to ask. And you need a clear plan if something doesn’t work the way it should.

Here’s one more thing: every AI system is different. If you’re using AI to write emails, that’s one thing. If you’re using it to make hiring or medical decisions, that’s something else. The higher the stakes, the more careful you need to be. So, how you apply these principles has to match your situation.

Lumenova AI helps with that. Request a demo today to see how our platform helps you keep your AI systems working the way they should (clear, safe, and aligned with your goals). We’ll walk you through how to stay in control, reduce risk, and build the kind of AI your team and your customers can trust.

Frequently Asked Questions

The 10 RAI principles (Safety & Ethics, Accountability, Transparency, Explainability, Fairness, Privacy, Security, Robustness, Resilience, and Valid & Reliable) provide a framework to build AI systems that are trustworthy, compliant, and risk-aware. These principles help businesses reduce legal exposure and boost user trust.

The fairness principle ensures AI systems are tested against diverse demographic data to avoid discrimination. It emphasizes equal treatment and demands high-quality, representative datasets, preventing biased outcomes in hiring, lending, or healthcare applications.

Validity and reliability ensure that AI systems consistently produce accurate, dependable results that reflect real-world conditions and intended use. In RAI, this means decisions and outcomes are trustworthy, repeatable, and fair (helping organizations avoid errors, build user confidence, and meet regulatory standards).

Explainability (XAI) allows stakeholders to understand AI decisions in plain language, which is vital for accountability and trust. Whether rejecting a loan or grading an essay, users must know why the decision was made, aligning with ethical and legal standards.

Businesses can implement RAI principles by assigning clear oversight roles, regularly testing AI systems, ensuring data privacy, and using platforms like Lumenova AI to automate governance, auditability, and risk management aligned with RAI standards.