Contents

Artificial intelligence promises efficiency, innovation, and competitive advantage. But many executives wonder: Will investing in responsible AI slow us down or drain resources? The business reality is clear. Far from being a drag on performance, a responsible AI framework can deliver measurable value. By reducing risks, improving trust, and unlocking efficiencies, it often pays for itself many times over.

The Cost of Non-Compliance

Regulations like the EU AI Act, GDPR, and sector-specific rules are no longer optional. Non-compliance can result in fines worth millions and damage to brand reputation. A responsible AI framework helps companies stay ahead of evolving requirements, protecting both financial and brand equity. In regulated industries such as finance, healthcare, and insurance, avoiding penalties and litigation alone represents significant cost savings.

Research shows that organizations with proactive compliance see fewer last-minute audits and a smoother regulatory process, avoiding costly penalties and legal fees. Waiting to fix issues post-deployment is at least 30% more expensive than building compliance upfront.

We explored this in detail in our article on AI governance frameworks, where proactive compliance measures proved to be a cornerstone for reducing legal and operational risks. Companies that build compliance into their AI strategy from the start often discover it costs far less than trying to fix issues later.

Efficiency Through Structure

Responsible AI brings structure to the way systems are designed, tested, and deployed, minimizing wasted effort on failed initiatives and avoiding costly rework. By setting clear governance standards, organizations streamline decision-making across technical, legal, and business teams, reducing delays and accelerating time to market.

Investing early in AI governance also lowers the costs of compliance monitoring by automating checks that would otherwise require manual oversight, freeing up resources for innovation. At the same time, it strengthens security by preventing threats like model poisoning – when attackers corrupt training data – and adversarial attacks that manipulate outputs. Left unchecked, both can cause significant financial and reputational harm.

As highlighted in our article on preventing AI data breaches, early detection and strong guardrails greatly reduce the likelihood of poisoning, manipulation, or regulatory exposure. Embedding these disciplines ensures that resources are allocated more effectively while safeguarding sensitive assets.

Building Customer Trust

Trust is a critical economic driver. Customers, partners, and investors prefer companies that can prove their AI systems are safe, explainable, and fair. Explainable AI (XAI) provides the transparency needed to build confidence. It satisfies regulators and reassures customers who want to understand how decisions are made, reducing friction and supporting long-term loyalty.

According to a 2025 IDC report, more than 75% of organizations adopting responsible AI practices report improved customer trust and brand reputation. In sectors like finance, explainable credit scoring models help comply with regulations and foster customer acceptance of AI-driven decisions.

Long-Term Value Creation

The upfront investment in responsible AI not only reduces risks but also boosts operational efficiency and sustainable growth. Organizations that embed responsibility from the start avoid costly crises and rushed compliance efforts, freeing them to innovate confidently.

A BCG study found that companies integrating responsible AI capabilities before scaling AI deployment are 28% less likely to face costly failures, demonstrating how responsibility strengthens business resilience. Insurance and finance industries are already benefiting by detecting fraud more effectively and improving risk assessments while maintaining stakeholder trust.

What Makes a Good Responsible AI Framework?

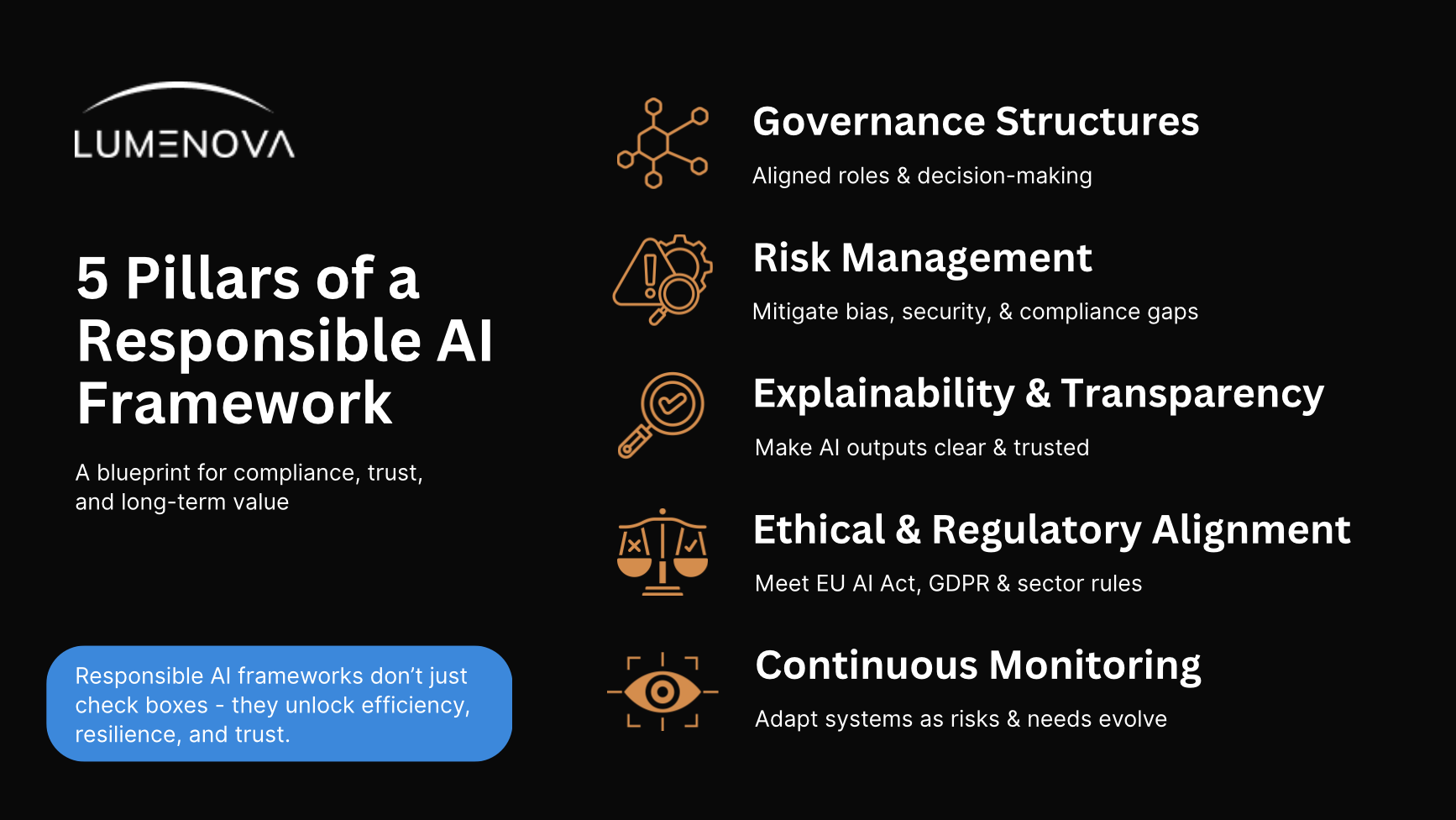

A strong Responsible AI framework goes beyond compliance. It creates a foundation for governance, efficiency, and trust. The most effective frameworks typically include:

- Clear Governance Structures: Defined roles and responsibilities across technical, legal, and business teams, ensuring faster, more aligned decision-making.

- Risk Management Protocols: Processes for identifying and mitigating risks like bias, security vulnerabilities, and compliance gaps.

- Explainability & Transparency: Practices and tools that make AI outputs understandable, supporting both customer confidence and regulatory requirements.

- Ethical & Regulatory Alignment: Built-in safeguards to meet evolving standards such as the EU AI Act, GDPR, and industry-specific regulations.

- Continuous Monitoring & Improvement: Ongoing evaluation of systems, adapting as new risks and business needs emerge.

These elements ensure AI systems are not only compliant but also resilient, trustworthy, and adaptable, turning responsibility into a long-term business advantage.

Taking Action: Next Steps for Organizations

- Evaluate your current AI governance maturity and risk exposure to identify gaps and opportunities.

- Establish cross-functional teams, including technology, legal, compliance, and business to integrate responsible AI practices.

- Pilot explainability initiatives to enhance transparency and build stakeholder trust.

- Embed responsible AI principles and governance early in AI project lifecycles to unlock innovation while controlling risks.

Final Thoughts

The economics of responsible AI are compelling. It reduces exposure to fines, litigation, and security risks; enhances efficiency through governance; builds lasting customer trust; and positions organizations for long-term growth. Embracing responsible AI means investing in resilience, agility, and competitive strength.

Ready to unlock the full economic potential of responsible AI for your organization?

Book a personalized demo with Lumenova to see how our responsible AI framework can drive compliance, efficiency, and trust, helping you innovate confidently while managing risk. Or schedule a free consultation with one of our AI governance experts to discuss your unique challenges and build a roadmap for lasting business value.