September 24, 2025

Pros and Cons of Implementing the NIST AI Risk Management Framework

Contents

Everyone’s eager to boast about their shiny new AI deployments – the two letters dominating every boardroom slide. But fewer want to face the messier reality: models drift, bias spikes, regulators come knocking. According to National Institute of Standards and Technology (NIST), it’s exactly where risk lives. And it’s why they built the AI Risk Management Framework (AI RMF), a voluntary guideline designed to help organizations identify, assess, and mitigate AI-related risks. Like any governance model, adopting it brings both advantages and challenges that executives must weigh carefully.

The Benefits of NIST AI RMF

Stronger Risk Mitigation

The NIST AI RMF provides a structured way to evaluate potential AI harms before they occur. By following its four key functions (govern, map, measure, and manage), organizations can proactively identify bias, security gaps, and unintended consequences. This results in fewer compliance surprises, stronger ethical safeguards, and reduced exposure to reputational or financial damage. It also helps teams catch problems early, when they are less costly and easier to fix.

Alignment with Global Standards

Although the framework was developed in the US, it aligns well with international requirements such as the EU AI Act and ISO standards. For multinational companies, implementing the NIST AI RMF can bridge regulatory regimes, making it easier to demonstrate accountability to diverse stakeholders.

Crucially, the framework does not just meet today’s compliance needs; it encourages organizations to take a proactive stance on AI risk management and governance. By embedding monitoring, evaluation, and transparency into everyday practices, companies can anticipate issues before they escalate into regulatory breaches or reputational harm.

This proactive approach not only reduces compliance complexity but also positions organizations as global leaders in responsible AI (RAI) adoption. In a business environment where regulators, investors, and customers increasingly demand proof of trustworthy AI, the NIST AI RMF becomes both a defensive shield and a competitive advantage.

Enhanced Trust and Transparency

Clear documentation, testing protocols, and monitoring requirements help organizations communicate more openly about how their AI systems function. The NIST AI RMF formalizes these practices, providing a roadmap for demonstrating fairness, reliability, and accountability. This visibility is increasingly demanded by customers, investors, and regulators alike, particularly in sectors like healthcare and finance, where decisions affect livelihoods. Transparency is essential to building trust.

Stronger Organizational Culture

One often overlooked benefit of the framework is cultural. Implementing the NIST AI RMF requires collaboration between technical, legal, compliance, and business teams. This cross-functional approach fosters a culture of responsibility and continuous improvement. Over time, organizations that adopt the framework report stronger internal alignment and clearer decision-making processes.

Typical Challenges and Pitfalls in Implementation

- Mid-Sized Tech Firm Documentation Hurdles: Fast-moving companies may struggle to document bias assessments and risk controls adequately, causing friction between engineering and compliance teams.

- Healthcare Data Privacy Complexities: Hospitals needing to maintain patient confidentiality find balancing transparency demands delays AI system rollouts due to consent and audit trail requirements.

- Finance Resource Constraints: Smaller banks lacking in-house AI risk expertise may face stalled efforts due to costly consultancy needs and conflicting priorities between compliance and innovation teams.

Deepening Resource and Expertise Insights

Effective adoption requires diverse resources:

- Personnel & Expertise: Roles such as AI ethicists, risk managers, compliance officers, and data scientists trained in AI risk management, safety, and fairness are critical.

- Training & Education: Continuous learning on AI governance standards and internal protocols is essential.

- Consultancy: Smaller firms often engage specialists for gap analysis and strategy adoption.

- Technology Tools: Risk mapping, documentation, and monitoring solutions reduce manual effort and strengthen ongoing evaluations.

Budgets and timeframes can vary, and initial adoption can take several months, with ongoing staffing needed for sustained governance.

Sector-Specific Applications

The risks and regulatory pressures associated with AI vary significantly across industries, shaped by the nature of the data involved, operational environment and deployment scope, the potential impact of AI decisions, and sector-specific compliance requirements. Understanding how the NIST AI RMF applies within different contexts helps organizations target their governance efforts more effectively. The following highlights illustrate how various sectors can tailor the framework to address their unique challenges and operational realities.

- Healthcare: Patient safety and privacy dominate, with regulatory mandates like HIPAA requiring rigorous validation and explainability measures.

- Finance & Insurance: Fraud detection, credit risk, and compliance with financial regulations demand strong transparency and audit capabilities.

- Consumer Technology: Companies balance rapid innovation cycles against user privacy and emerging regulatory pressures.

- Multinational Cross-Sector: Harmonizing compliance across regions and standards demands flexible, robust frameworks.

Innovation and Flexibility

Balancing governance rigor with innovation agility involves:

- Phased Implementation: Focus first on high-risk AI systems, then gradually expand controls.

- Risk-Tiered Oversight: Adjust governance depth based on AI system complexity and impact.

- Cross-Functional Dialogue: Collaborative teams ensure controls evolve without hampering development speed.

- Tailored Framework Adoption: Avoid strict checklists; adapt the framework to fit business needs and risk profiles.

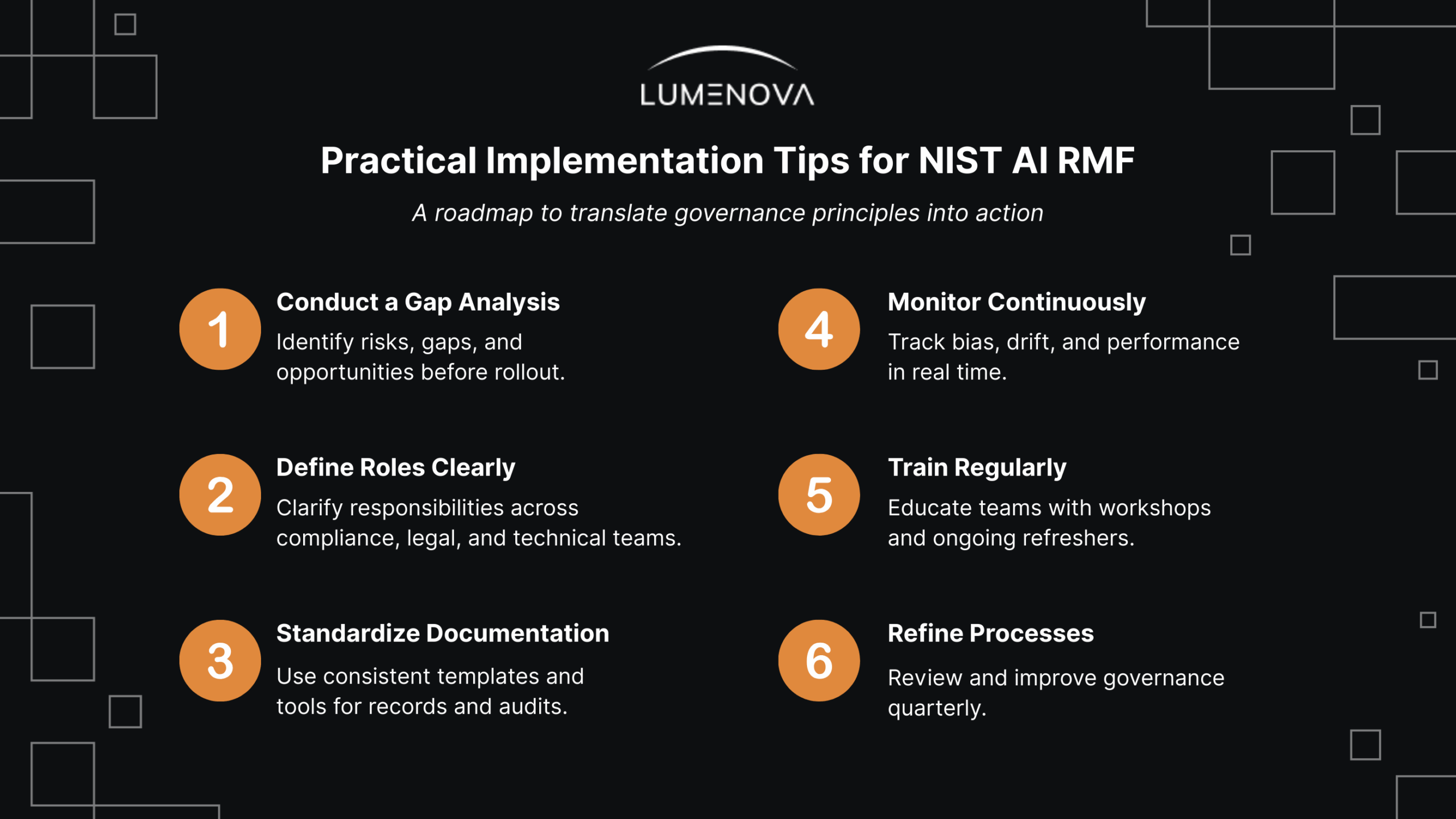

Practical Implementation Tips

While the benefits of adopting the NIST AI Risk Management Framework are clear, organizations frequently seek concrete guidance on how to begin the process and effectively integrate the framework into their existing workflows. Implementing the framework can seem complex, but breaking it down into manageable, actionable steps helps ensure steady progress, early wins, and sustained success.

The following practical tips outline a roadmap for organizations at various stages of adoption, helping teams translate the framework’s principles into day-to-day practices:

- Conduct a thorough assessment and gap analysis.

- Clearly define roles and responsibilities across departments.

- Standardize documentation using templates and tools.

- Establish continuous monitoring for bias and performance drift.

- Provide regular training and workshops.

- Review and refine processes regularly.

Future Trends and Regulatory Outlook

As AI technology grows more sophisticated and spreads into new sectors, the rules and structures that govern it will evolve. Staying ahead of these changes is imperative for organizations aiming to remain compliant, competitive, and trusted by stakeholders. Understanding emerging regulatory trends and anticipating future demands can help businesses not only adapt but also turn AI governance into a strategic advantage.

Below, we highlight key developments shaping the future of AI risk management and the regulatory environment.

- Explainability and transparency demands are intensifying from regulators and consumers.

- AI risk management will increasingly integrate with cybersecurity standards.

- Ethical AI practices and accountability will shape investment and public trust.

- The NIST AI RMF will adapt to emerging AI technologies and risks, making early adoption valuable for resilience.

The Drawbacks of NIST AI RMF

Potential Efficiency Losses

The primary challenge is the additional time and resources required. Formalizing governance processes, documenting risk controls, and running ongoing evaluations can slow product development cycles and delay the release of new features. However, in the long run, AI risk management efforts actually have the potential to support efficiency. Guardrails protect organizations, but they also help to maintain model performance over time.

Resource and Expertise Demands

Implementing the framework effectively also calls for specialized expertise in AI governance, risk management, and compliance. For larger organizations, these resources may be more accessible. Smaller companies, however, often face steep learning curves and may need to rely on external consultants, increasing costs and adding friction to adoption. At Lumenova, each of our clients gains access to a Forward Deploy Team to help mitigate these needs.

Incomplete Market Adoption

Another challenge is that the NIST AI RMF is voluntary. Not every organization will choose to adopt it, and some competitors may prioritize speed to market over structured governance. This uneven adoption can create disparities across the industry, where companies committed to governance may face higher costs and slower timelines compared to less regulated peers. To offset these disparities, many forward-thinking organizations position their commitment to governance as a unique point of differentiation, compared with less cautious competitors.

Risk of Over-Complexity

Finally, there is a risk of making the framework more burdensome than necessary. Attempting to apply the RMF in full, without tailoring it to the specific context of a company’s AI use cases, can result in unnecessary layers of bureaucracy.

Success depends on balancing rigor with practicality.

Balancing Risks and Rewards

The NIST AI RMF isn’t a silver bullet but a valuable tool when thoughtfully applied. It helps reduce risks, aligns with regulations, and builds trust, driving benefits that outweigh short-term efficiency costs. Tailoring the framework to specific organizational contexts enables safer, more sustainable AI innovation. Companies adhering to the NIST AI RMF are positioning themselves for resilience, credibility, and future success in AI.

Lumenova’s RAI Platform helps organizations evaluate, implement, and operationalize AI governance frameworks like the NIST AI RMF. Our work spans industries like healthcare, finance, and technology, where the balance between compliance and innovation is especially critical. We recognize that every organization has its own governance priorities, risk profile, and pace of adoption. That’s why we provide flexible pathways (from helping you integrate the NIST AI RMF into existing processes, to creating custom governance structures designed around your specific use cases).

Schedule a consultation with Lumenova AI to explore how your company can adopt the NIST AI RMF effectively.