August 12, 2025

AI Risk Assessment Best Practices: Using the NIST AI RMF for Compliance

Contents

AI is shaping loan approvals, automating triage, and deciding which customers get what support. While 93% of organizations use AI, yet only 7% have embedded governance frameworks, just 4% feel prepared to support AI at scale, and oversight is often fragmented. Nearly 75% of executives emphasize the need for human oversight to manage legal and reputational risk.

Yet as AI accelerates, governance lags behind, and this lag isn’t theoretical. It’s where operational, reputational, and regulatory risk begins.

The NIST AI Risk Management Framework (AI RMF) offers a solution: a structured, practical approach to identifying, measuring, managing, and governing AI risk across diverse systems and use cases. It doesn’t just provide you with a blueprint for managing AI risks , but also highlights the architecture required to make responsible AI (RAI) operational.

What is NIST AI RMF

The NIST AI RMF is a comprehensive guide developed by the National Institute of Standards and Technology to help organizations identify, evaluate, and mitigate risks associated with AI systems. Rather than being a checklist, the AI RMF outlines a flexible, principles-based structure that integrates into every stage of the AI lifecycle.

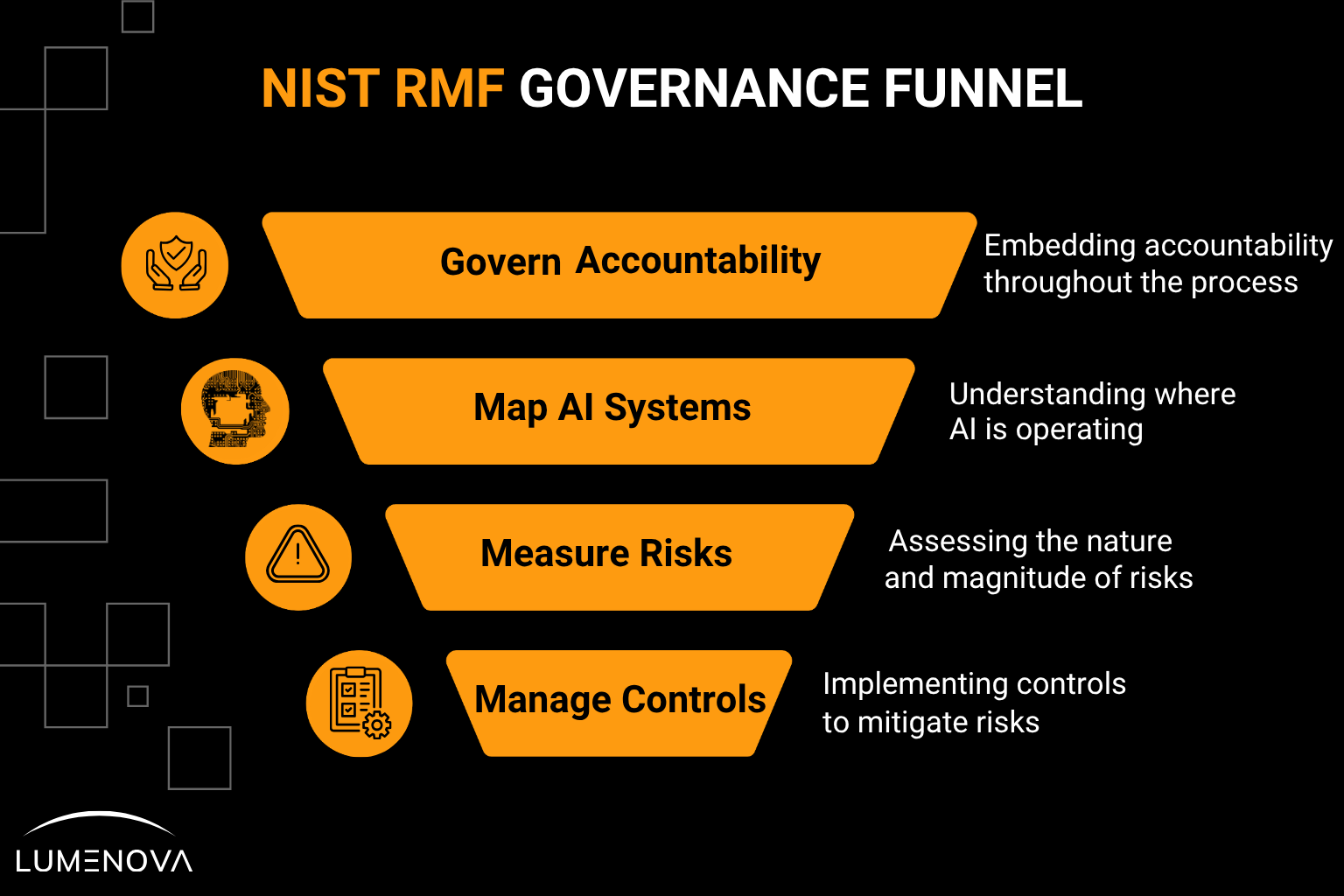

It focuses on four core functions, and we’ll explore them below.

Why AI Risk Has Become a Leadership Imperative

AI risk isn’t only a side effect of exponential innovation and irresponsible human behavior. It’s often also a function of scale without oversight.

Systems introduced with good intent can later introduce serious harm: discrimination, data leakage, manipulation, or opacity in critical decisions. These aren’t isolated events but systemic breakdowns

The AI RMF gives executive teams a shared language to align on risk tolerance, accountability, and decision rights across legal, risk, product, and engineering teams. It elevates AI governance from a compliance task to a board-level responsibility (one that protects trust and enables resilience).

To better understand how executive leaders can shape responsible AI strategies, explore, Explainable AI: What Every Executive Needs to Know, a guide to aligning transparency with business accountability.

The Four Core Functions: What the NIST RMF Actually Asks You to Do

The RMF outlines four core functions that, when embedded across the AI lifecycle, create a durable governance system:

1. Govern: Is Accountability Embedded (Not Bolted On)?

Too many organizations think of governance as something to address at the end. But the AI RMF positions it as the first step, one that initiates a continuous thread through the entire AI lifecycle.

That means:

- Defining clear ownership for every AI system

- Establishing cross-functional review structures (e.g. an AI ethics committee)

- Aligning on risk tolerance and mitigation thresholds

- Running recurring evaluations of risk metrics and governance health

- Training teams across functions in risk fluency, not just compliance vocabulary

Governance is how organizations maintain continuity, accountability, and alignment as AI systems evolve. It ensures decisions remain consistent with mission, values, and changing market demands.

2. Map: Do You Know Where AI Is Already Operating?

Most organizations don’t have a full inventory of AI systems, especially when AI capabilities are embedded in third-party tools and vendor platforms.

Mapping forces visibility:

- Which systems are using AI (internally or externally)?

- What kind of data do they ingest, leverage, and access (PII, PHI, financial records)?

- What decisions do they influence, and how consequential are their outcomes?

- What regulations might apply (e.g. GDPR, HIPAA, EU AI Act)?

- What intended use cases have been identified?

To see how leading fintechs gain visibility into embedded AI systems and third-party risk, read 5 Must-Have Features in a Fintech AI Risk Management Platform.

3. Measure: What’s the Nature and Magnitude of Risk?

Measurement is where governance matures, from awareness to insight.

Once systems are mapped, organizations must assess how risk manifests:

- Are models exhibiting bias toward protected classes?

- Can you explain how a decision was made, and by what logic?

- Could an adversary manipulate the system’s inputs or outputs?

- How would a stakeholder be affected if the model underperformed?

Poorly performing models, unexplainable outcomes, or unmanaged data flows are signs of weak accountability structures.

Curious how organizations evaluate model risk and bias in production? Dive into AI in Finance: The Promise and Risks of RAG & CAG vs RAG: Which One Drives Better AI Performance? for a breakdown of real-world risk measurement tools and techniques.

4. Manage: What Controls Actually Mitigate That Risk?

Controls aren’t one-size-fits-all, and not every system needs heavy governance. But every AI system needs some level of responsible intervention.

Examples of risk mitigation mechanisms include:

- Human-in-the-loop for high-impact decisions

- Access controls for sensitive training data

- Model documentation (model cards, datasheets, lineage maps)

- Live monitoring for model drift or performance degradation

- User-facing explainability tools to support transparency

- XAI methods to improve model interpretability and support auditability across internal and regulatory reviews

The value here is alignment, ensuring your risk controls are aligned with your use cases, organizational values and objectives, model complexity, and compliance requirements.

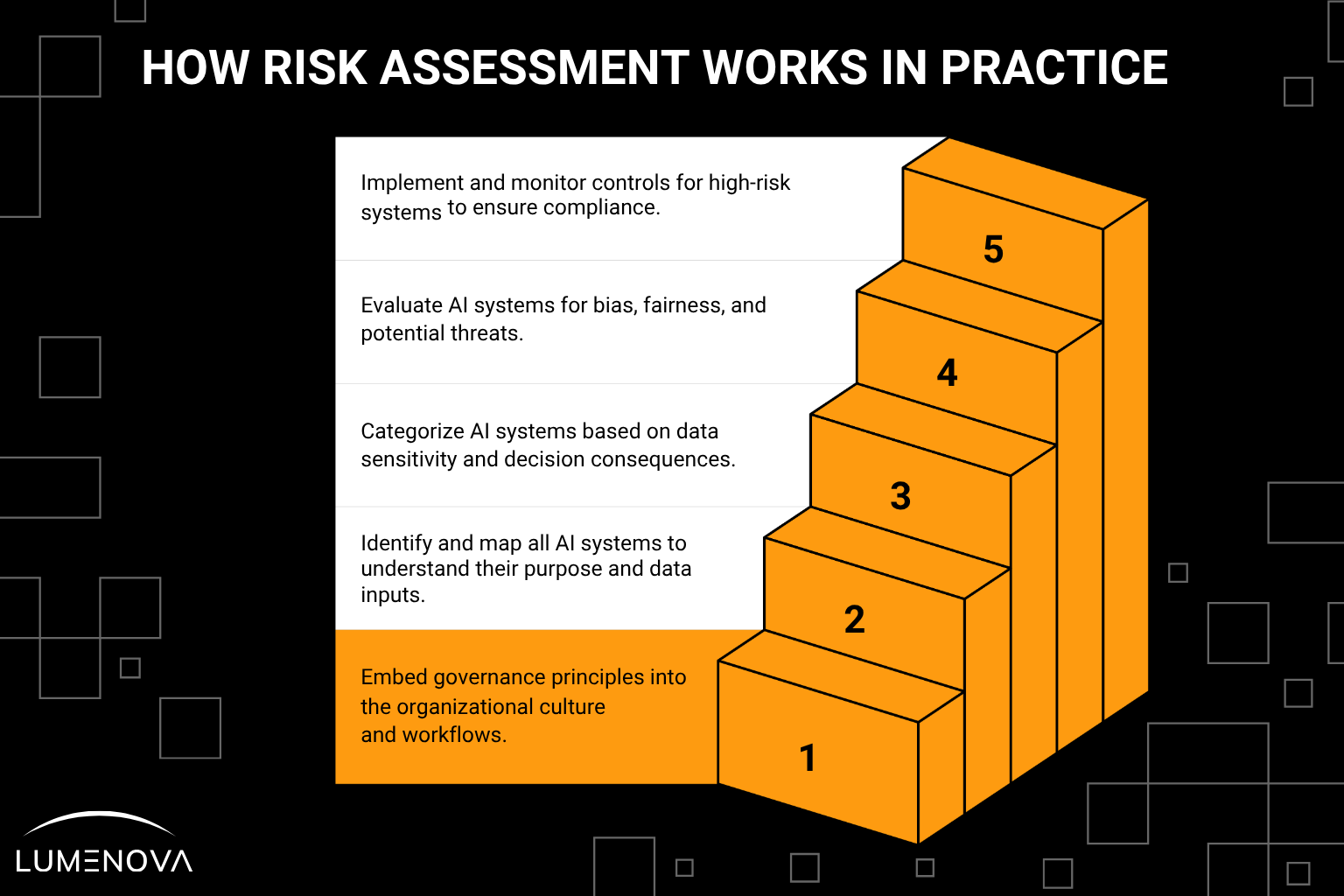

How Risk Assessment Works in Practice: A Step-by-Step Framework

Here’s a practical blueprint that reflects how the most advanced organizations turn AI governance from theory into operational muscle.

Step 1: Operationalize Governance

- Establish quarterly risk reviews

- Embed governance checkpoints throughout the model lifecycle

- Empower AI stewards to escalate risk concerns

- Train your teams in governance principles, not just process steps

Why it matters: Governance must be cultural AND procedural. A compliant team might miss the point. A fluent team won’t.

Step 2: Inventory All AI

Identify every AI system (proprietary, vendor-supplied, or embedded in platforms). Map them to their intended purpose and use, key stakeholders, data characteristics and requirements, and the business functions and/or decisions supported.

Why it matters: You can’t govern what you can’t see. Hidden systems tend to cause the biggest surprises.

Step 3: Classify and Tier by Risk

Classify systems based on:

- Data privacy and sensitivity

- Decision consequences

- Regulatory and stakeholder impacts

- Model complexity

- Maintenance and remediation costs

- Safety and security vulnerabilities

- Objective and value alignment

- Explainability and transparency

Use this to assign risk tiers (low, medium, high) and calibrate your controls accordingly.

Why it matters: Not all AI systems are equal, and treating them like they are wastes time, resources, and trust.

Step 4: Run Targeted Risk Assessments

Use a mix of technical and human-centered evaluation:

- Fairness and bias audits across demographics

- Explainability assessments (internal and customer-facing)

- Adversarial testing and threat modeling

- Stakeholder mapping and scenario planning

Why it matters: Risk doesn’t only emerge from models. It’s shaped by how AI intersects with real-world systems and users.

Step 5: Apply and Monitor Controls

For high-risk systems:

- Implement review gates before deployment

- Require documentation and justification for key design decisions

- Activate live monitoring for drift, performance degradation, and unusual outputs

Why it matters: Controls lose value when they’re passive or outdated. They should evolve with the system they’re designed to protect.

Why the NIST AI RMF Is More Than a Compliance Tool

The AI RMF isn’t just a regulatory hedge. It’s a maturity model, a way to develop institutional discipline as AI becomes part of your core infrastructure.

It helps answer key leadership questions:

- Can we explain how AI makes decisions, and who’s accountable when it fails?

- Are we governing consistently across teams, vendors, and platforms?

- Do we have repeatable practices to identify and mitigate AI risk and could we communicate them to regulators or customers if asked?

That’s what separates organizations that “use AI” from those that deploy AI responsibly at scale.

Case Studies: How Leading Companies Are Doing It

Workday

Mapped its AI governance practices to the AI RMF, creating alignment across product, legal, and Responsible AI teams. The AI RMF became a reference model for evaluating third-party AI and vendor platforms.

IBM

Integrated AI RMF principles into WatsonX governance. Structured adoption in three phases: define, audit, iterate. Their teams use the AI RMF to stay adaptable in a fast-moving regulatory environment.

To see how explainability, bias mitigation, and regulatory prep converge in top AI programs, read Overreliance on AI: Addressing Automation Bias Today.

Where Most Programs Go Wrong

| Pitfall | Insight | Solution |

|---|---|---|

| Blind spots in inventory | Missed systems create hidden risk | Conduct AI discovery audits quarterly |

| Misaligned controls | Over- or under-engineering risk responses | Use tiering to scale governance appropriately |

| Governance as a project | Short-lived compliance pushes lose momentum | Build into workflows, not just policy decks |

| Talent and tooling gaps | Most teams lack AI-specific governance skills | Upskill internally or bring in trusted partners |

Looking Ahead

On July 26, 2024, NIST released a GenAI Profile (NIST-AI-600-1) targeting risks tied to LLMs and synthetic media. New sector-specific playbooks (finance, healthcare) and global harmonization efforts (EU AI Act, ISO 42001) are in motion.

The message is clear: governance cannot be static. The AI RMF’s strength lies in its adaptability, not rigidity.

Want to stay ahead of AI governance trends and GenAI-specific risks? Explore the Ethics of Generative AI in Finance to see how forward-looking firms are adapting.

Key Takeaways for Executive Teams

- AI governance is now a leadership issue, not an operational and technical afterthought.

- The NIST AI RMF provides a mature governance blueprint for strategic risk management.

- Lumenova AI operationalizes this framework, helping organizations implement controls, build visibility, and run AI with integrity.

Looking to update your board on AI risk? Schedule a demo with Lumenova AI to experience how our platform operationalizes the NIST AI RMF. Designed for enterprise scale, our solution offers measurable insights and expert guidance to help you achieve strong AI risk governance and compliance.